- Beats

- FileBeat

- Metricbeat

- Kibana

- Logstash

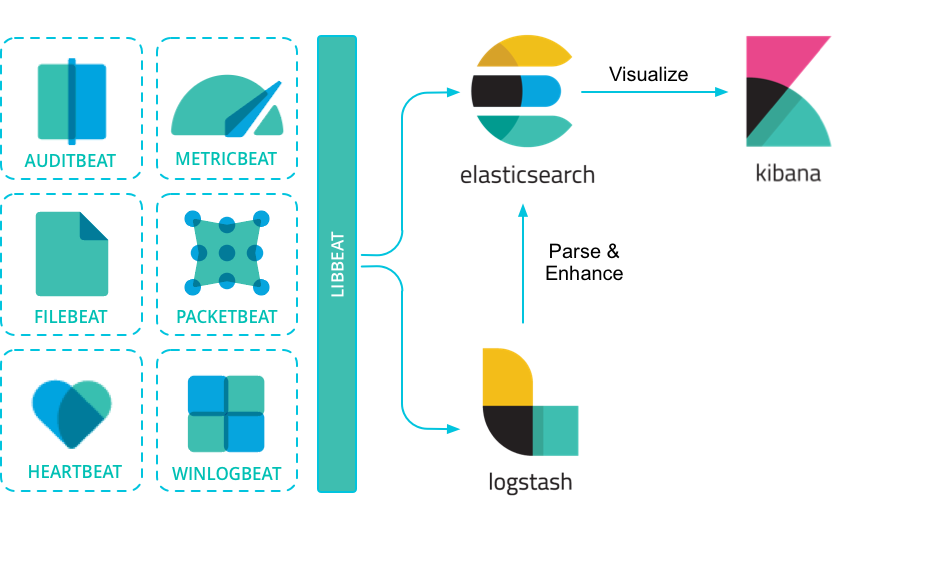

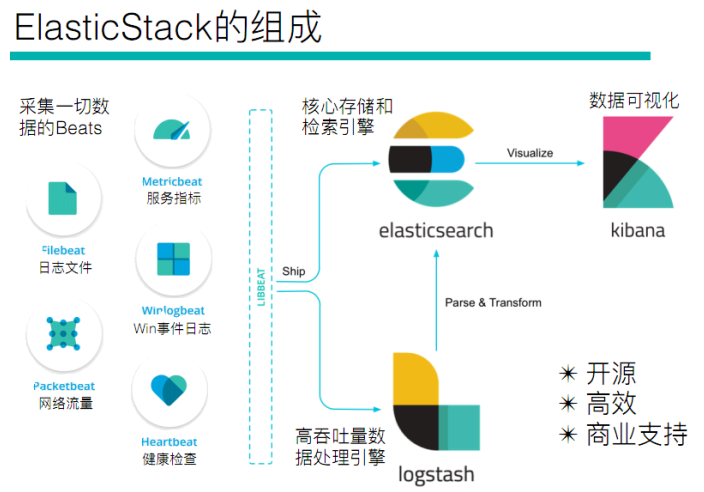

ElasticStack

简介

ELK(旧称呼),由ElasticSearch、Logstash、Kibana组成,及新加入的Beats

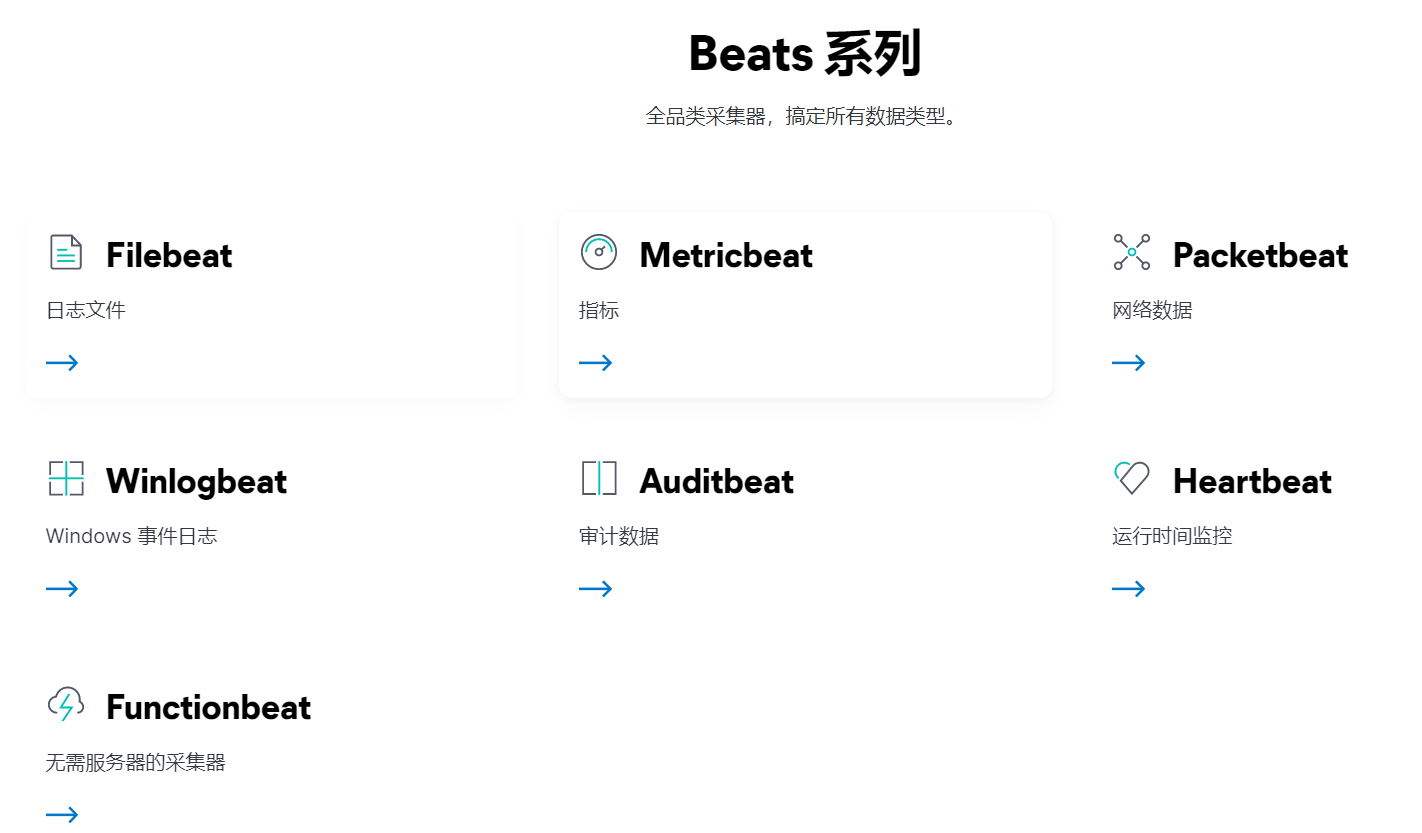

Beats

采集系统监控数据的代理,是在被监控服务器上以客户端形式运行的数据收集器的统称。可以直接把数据发送给ElasticSearch或者通过LogStash发送给ElasticSearch,然后进行后续的数据分析活动

Beats组成

- PacketBeat:网络数据分析器,用于监控、收集网络流量信息、Packetbeat嗅探服务器之间的流

量,解析应用层协议,并关联到消息的处理,其支 持ICMP (v4 and v6)、DNS、HTTP、Mysql、PostgreSQL、Redis、MongoDB、Memcache等协议; - Filebeat:用于监控、收集服务器日志文件,其已取代 logstash forwarder

- Metricbeat:可定期获取外部系统的监控指标信息,其可以监控、收集 Apache、HAProxy、MongoDB 、MySQL、Nginx、PostgreSQL、Redis、System、Zookeeper等服务;

- Winlogbeat:用于监控、收集Windows系统的日志信息;

Logstash

基于Java,可以用于手机,分析和存储日志的工具

由于与Beats功能部分重叠,现用于数据处理

ElasticSearch

基于Java,是开源分布式搜索引擎,特点有:分布式、零配置、自动发现,索引自动分片、索引副本机制、restful风格接口,多数据源、自动搜索负载

Kibana

基于nodejs,开源免费工具,Kibana可以为Logstash和ElasticSearch提供的日志分析友好的web界面,可以汇总、分析和搜索重要数据日志

Beats——日志采集

Beats:Elasticsearch 的数据采集器 | Elastic

FileBeat

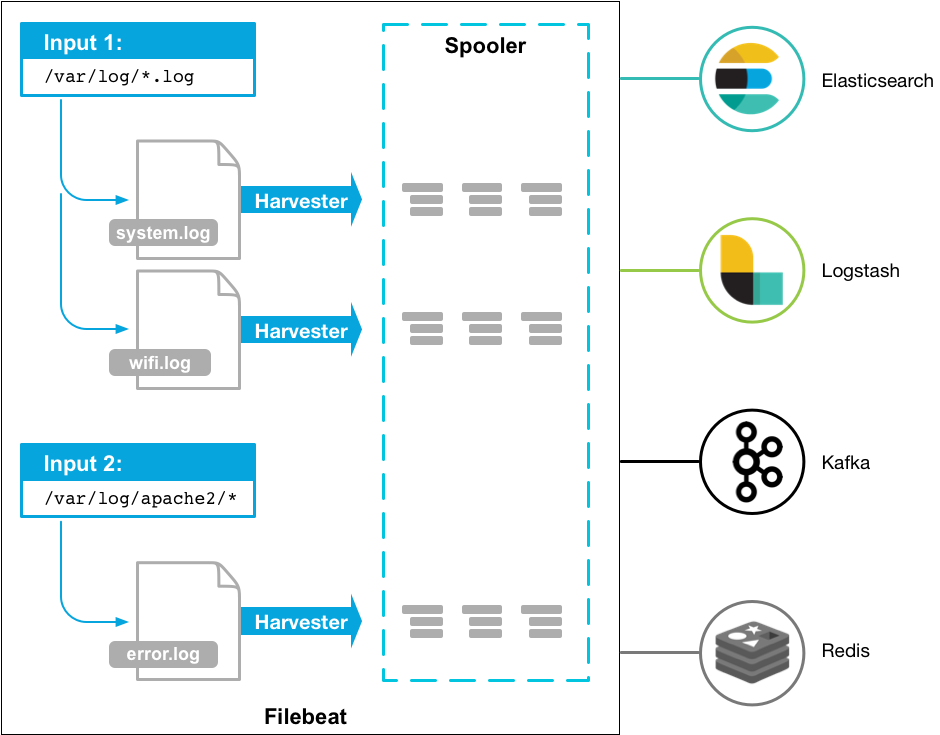

架构

FileBeat 由主要组件组成:prospector 和 harvester

harvester

负责读取单个文件内容

如果文件在读取时被删除或重命名,FileBeat将会继续读取文件

Prospector

prospector 负责管理

harvester,并查找所有要读取的文件来源如果输入类型为日志,则查找器将查找路径匹配的所有文件,并为每个文件启动一个 harvester

FileBeat支持两种

prospector:log 和 stdin

Filebeat如何记录文件的状态

- Filebeat 保存每个文件的状态并经常将状态刷新到磁盘上的注册文件中

- 该状态用于记住harvester 正在读取的最后偏移量,并确保发送所有日志行

- 如果输出(Elasticsearch或Logstash)无法访问,Filebeat会跟踪最后发送的行,并在输出再次可用时继续读取文件

- 在Filebeat运行时,每个

prspector内存中也会保存文件状态信息,当重新启动FileBeat时,将使用注册文件/data/registry的数据来重建文件状态 - 文件状态记录在

/data/registry

部署与运行

1 | mkdir -p /haoke/beats |

启动命令

1 | ./filebeat -e -c haoke.yml |

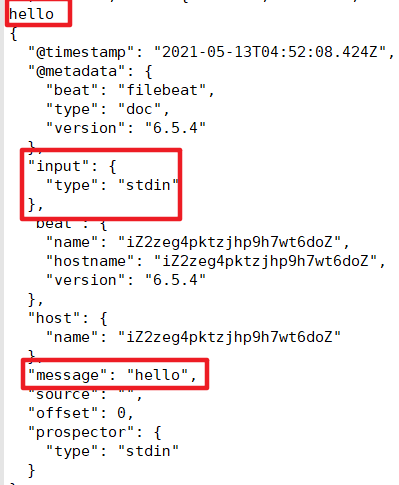

标准输入

1 | 创建配置文件 haoke.yml |

- @metadata :元数据信息

- message:输入的信息

- prospector:标准输入勘探器

- input:控制台标准输入

- beat:beat版本以及主机信息

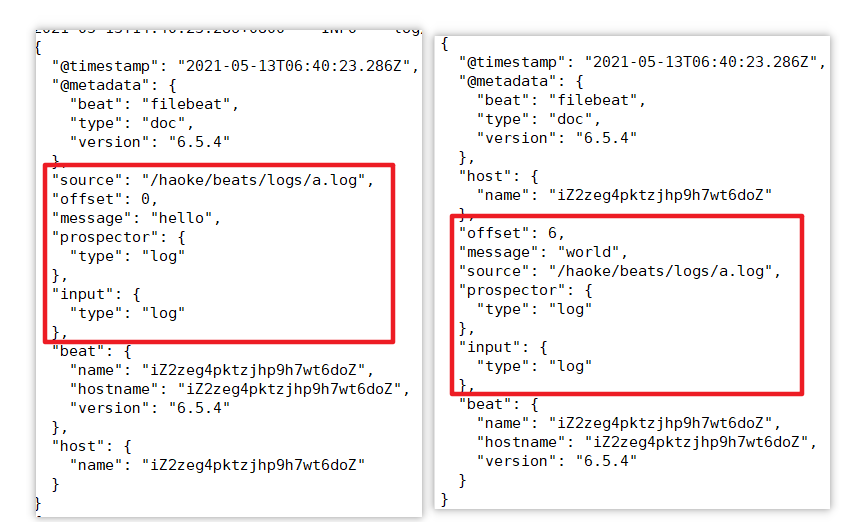

读取文件

1 | cd filebeat-6.5.4-linux-x86_64 |

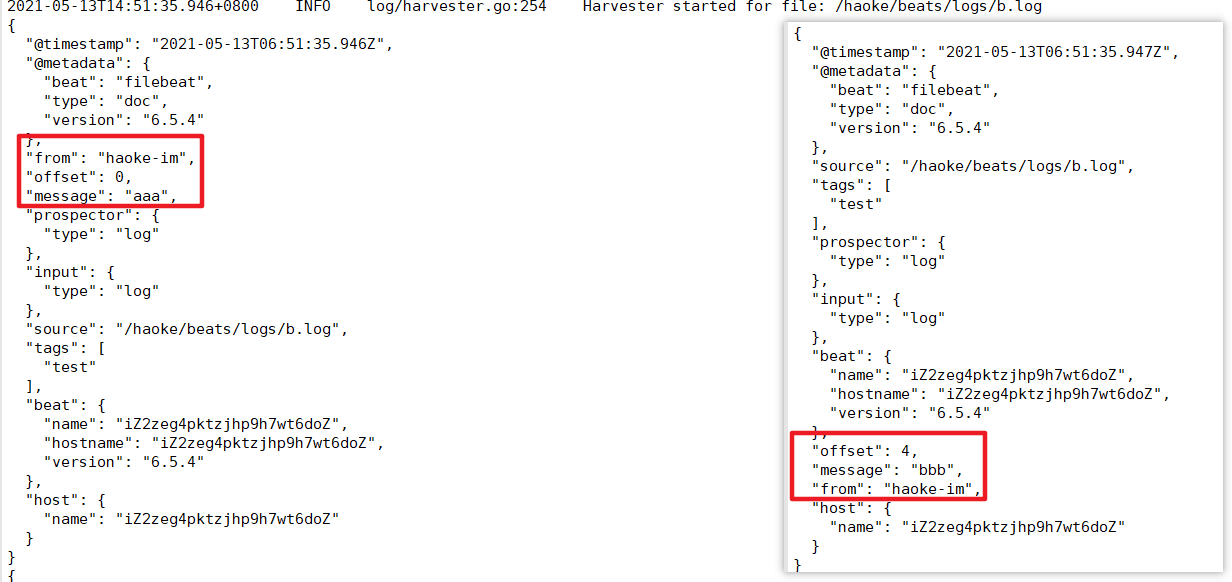

可见,已经检测到日志文件有更新,立刻就会读取到更新的内容,并且输出到控制台

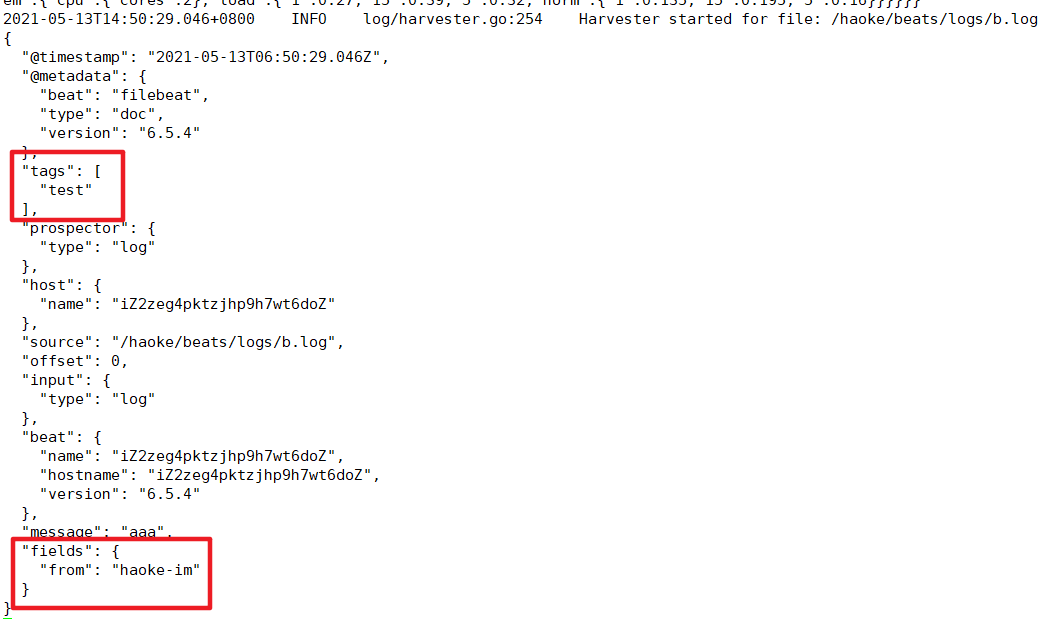

自定义字段

1 | 配置读取文件项 haoke-log.yml |

fields_under_root: false

fields_under_root: true

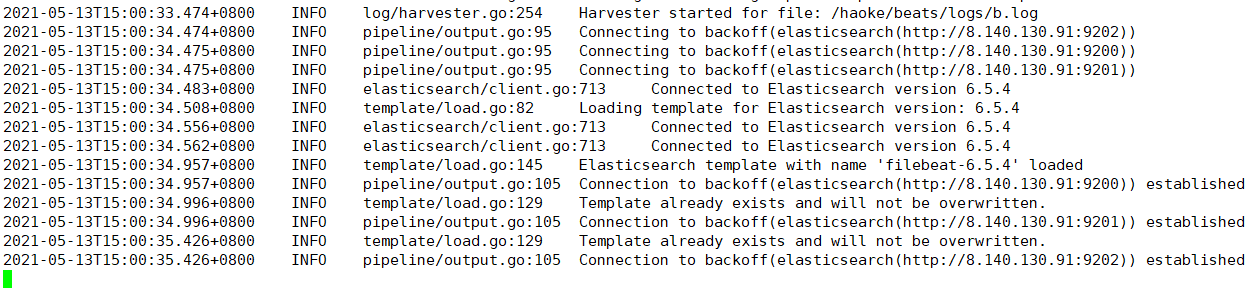

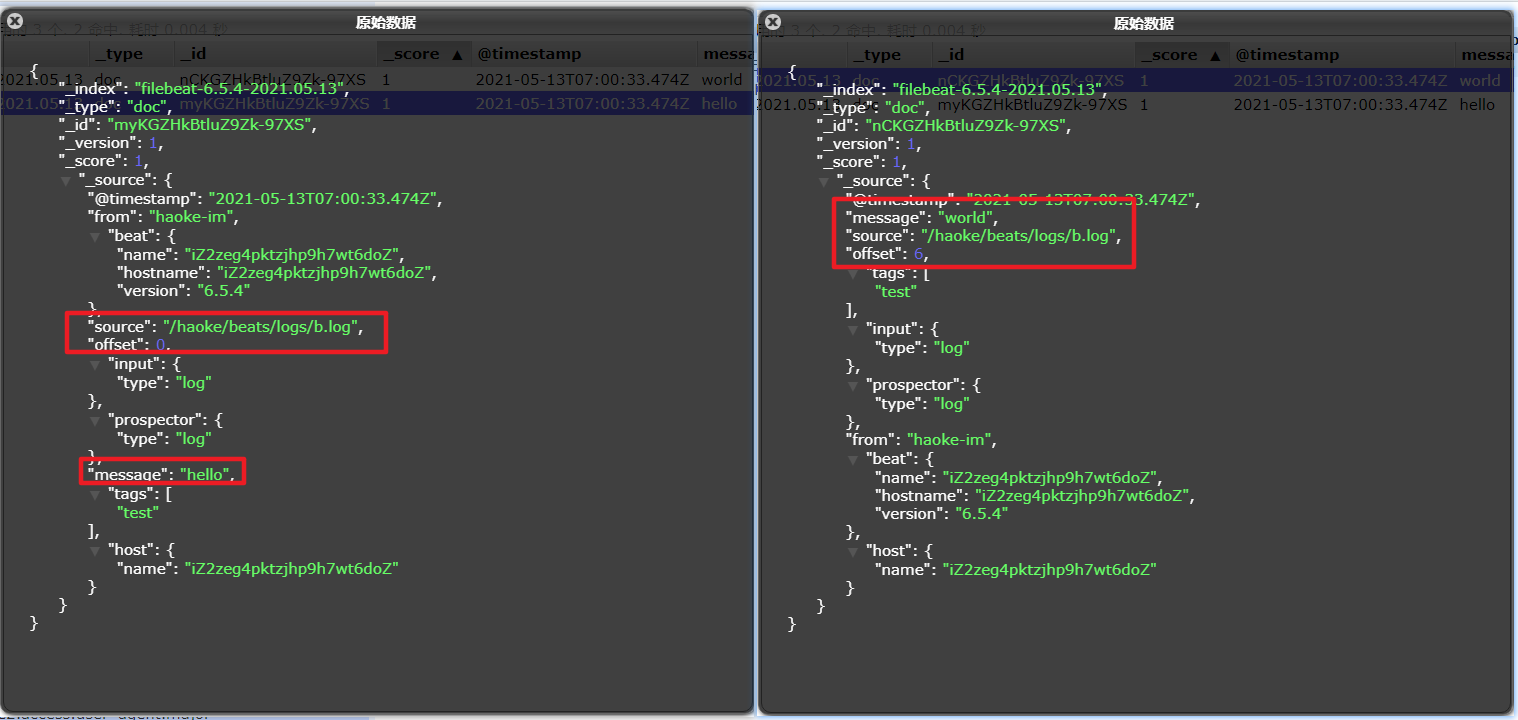

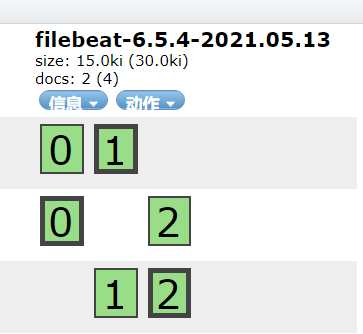

输出到ES

1 | 配置读取文件项 haoke-log.yml |

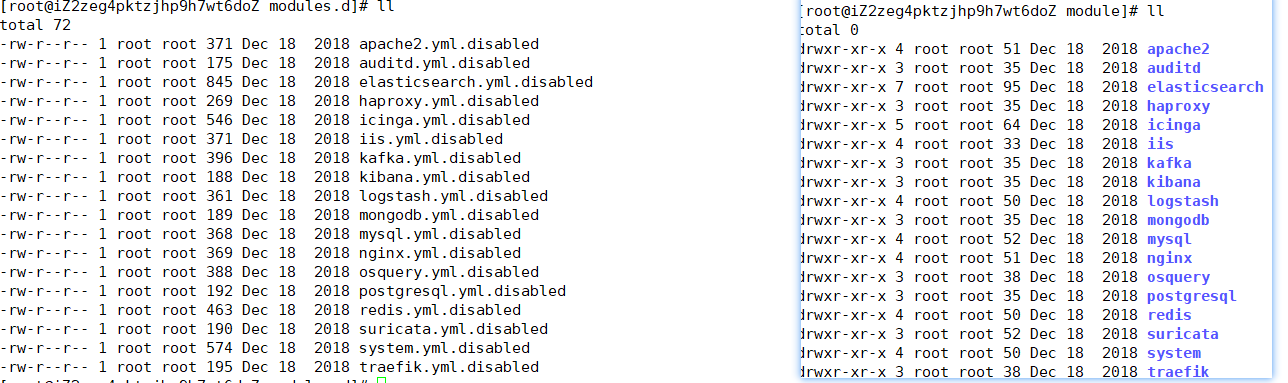

Module

前面想要实现日志数据的读取以及处理都是自己手动配置,其实,在 Filebeat 中,有大量的Module,可以简化配置

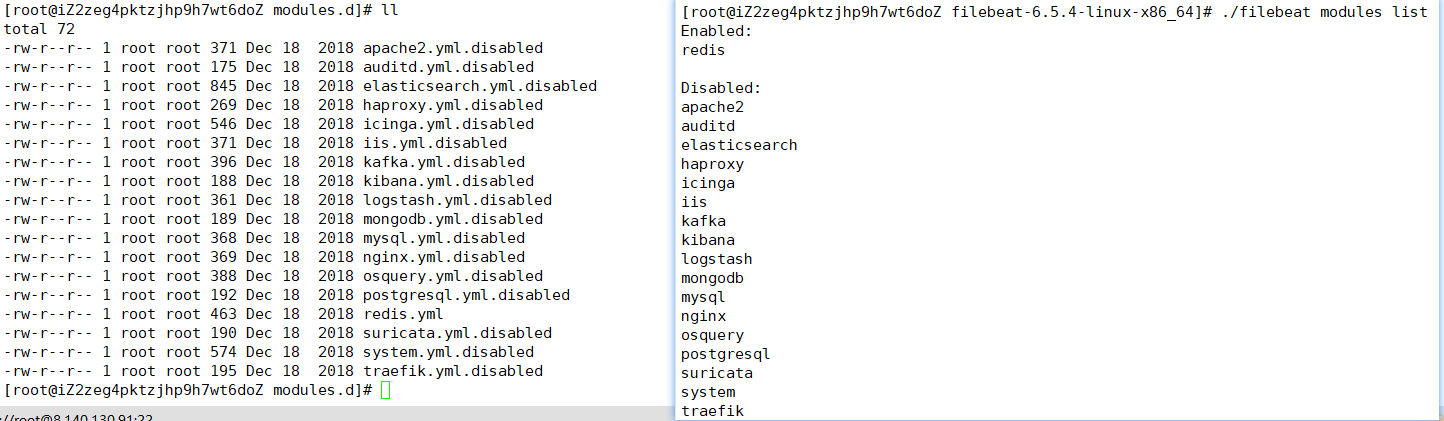

1 | ./filebeat modules list |

启动某一 module

1 | ./filebeat modules enable redis #启动 |

可见,启动某一

module就是将filebeat-6.5.4-linux-x86_64/modules.d/下的对应yml去掉disabled

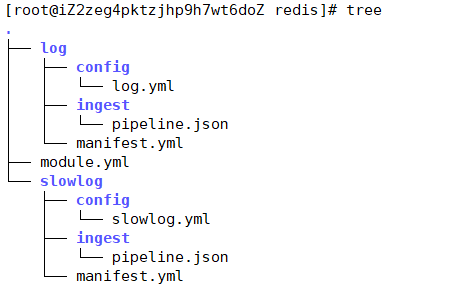

redis module

- log:日志

- slowlog:慢查询日志

redis module 配置

1 | - module: redis |

修改redis的docker容器

redis 默认情况下,是不会输出日志的,需要自行配置

1 | docker create --name redis-node01 --net host -v /data/redis-data/node01:/data redis --cluster-enabled yes --cluster-announce-ip 8.140.130.91 --cluster-announce-bus-port 16379 --cluster-config-file nodes-node-01.conf --port 6379 --loglevel debug --logfile nodes-node-01.log |

loglevel 日志等级分为:debug、verbose、notice、warning

- debug 会有大量信息,对开发、测试有用;

- verbose 等于log4j 中的info,有很多信息,但是不会像debug那样乱;

- notice 一般信息;

- warning 只有非常重要/关键的消息被记录

配置filebeat

1 | cd /haoke/beats/filebeat-6.5.4-linux-x86_64/modules.d/ |

1 | vim haoke-redis.yml |

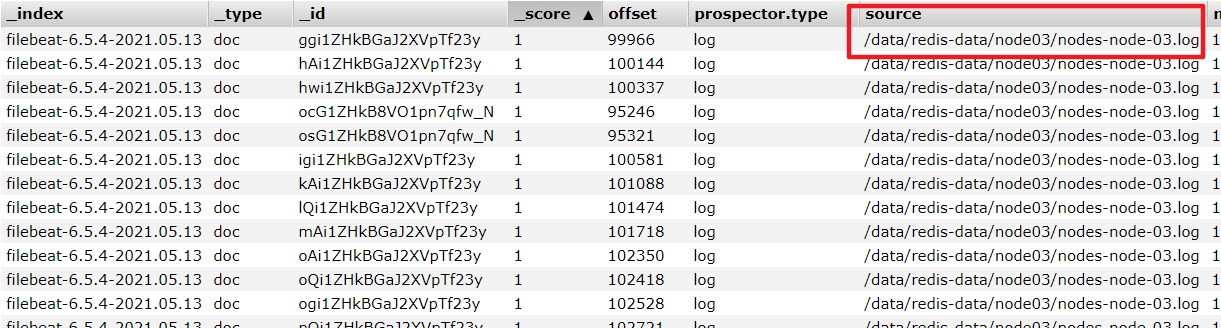

启动测试

1 | ./filebeat -e -c haoke-redis.yml --modules redis |

其他Module用法

Modules | Filebeat Reference 7.12] | Elastic

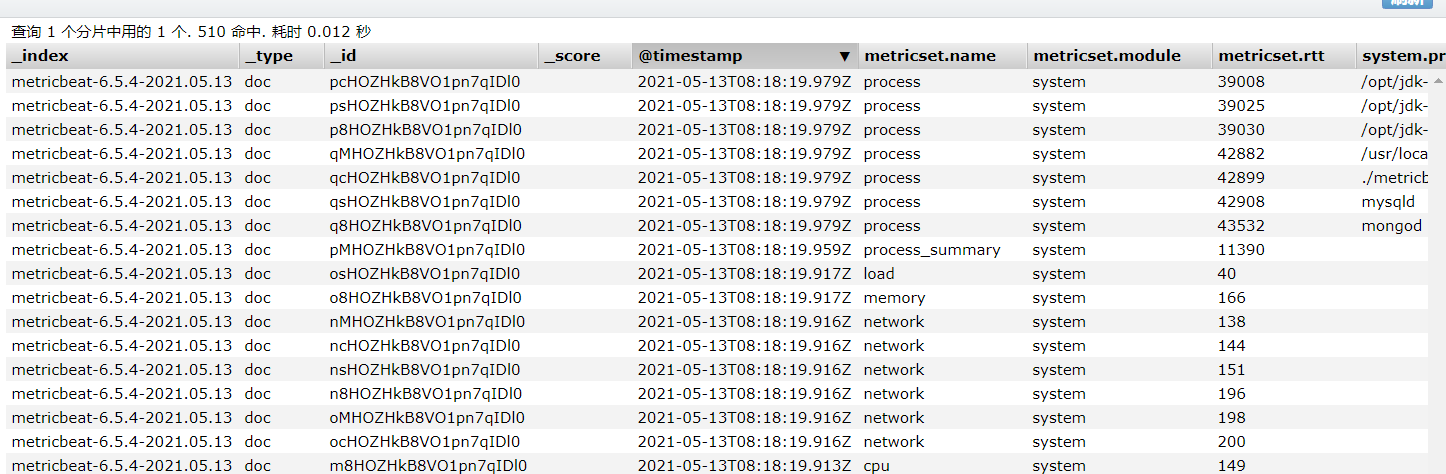

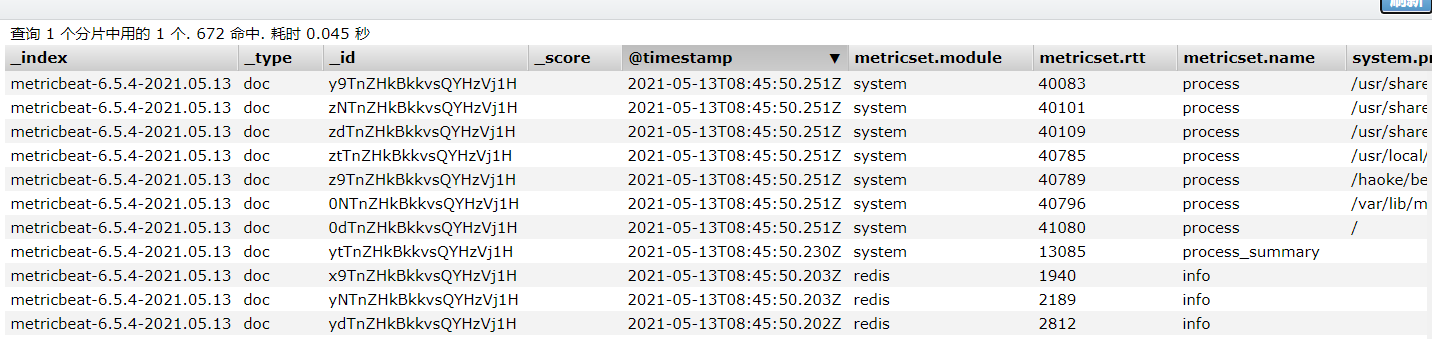

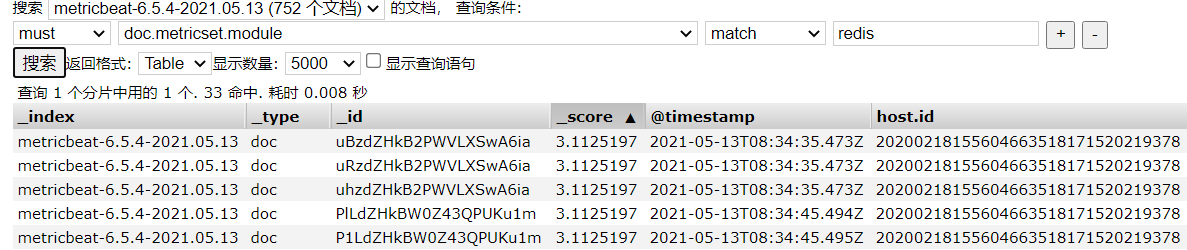

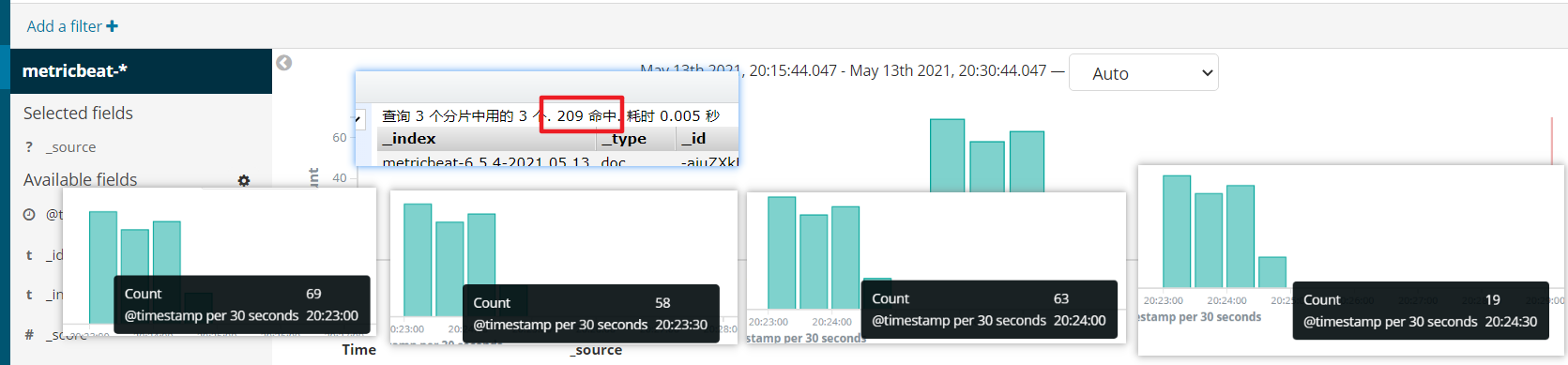

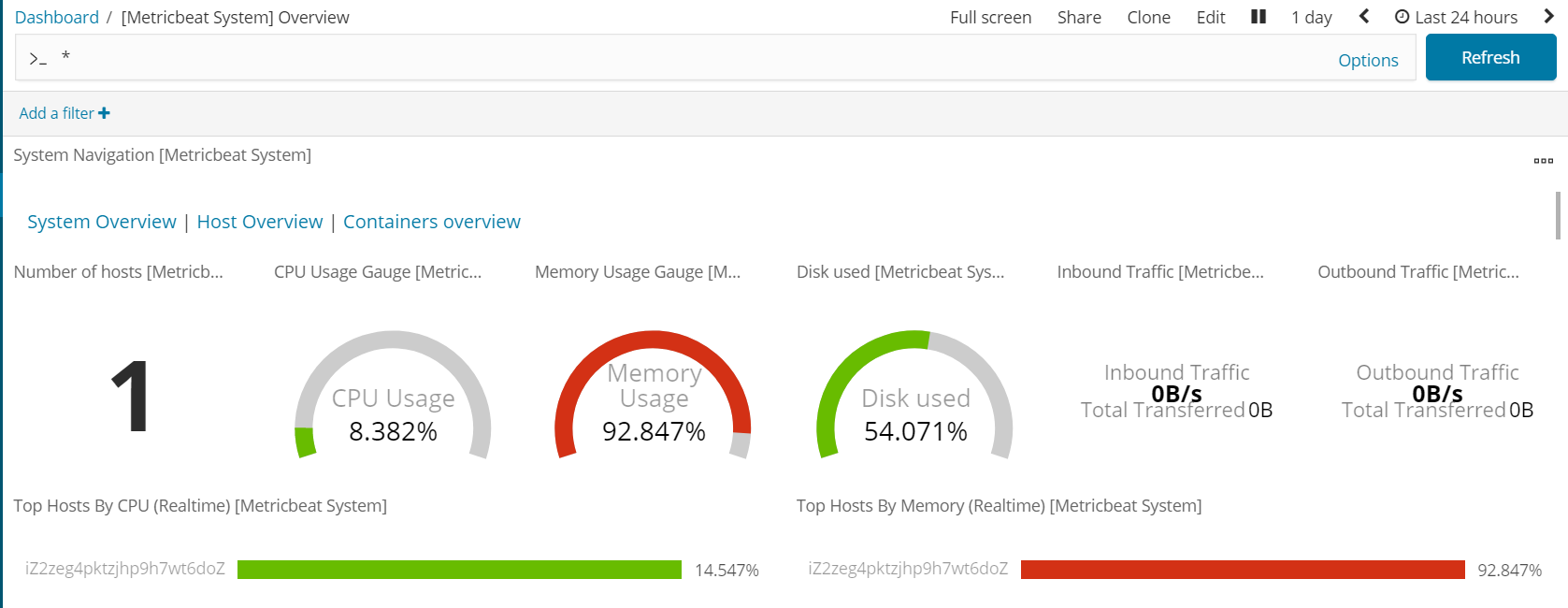

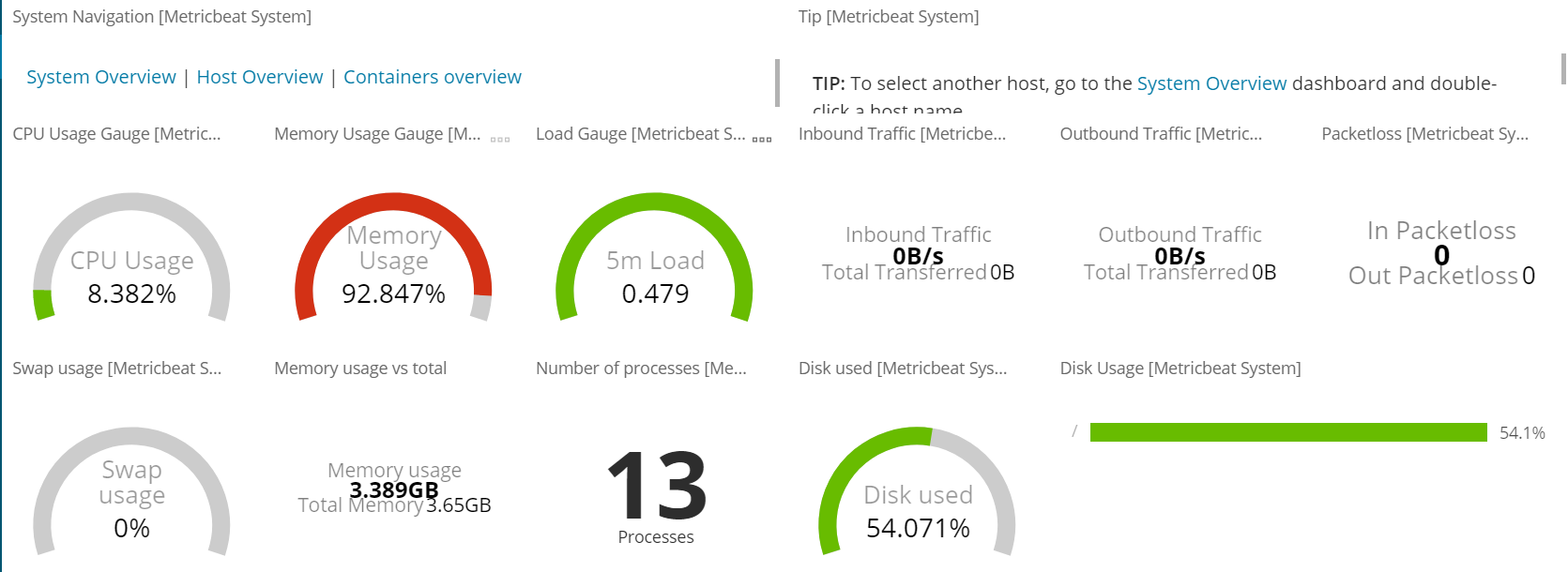

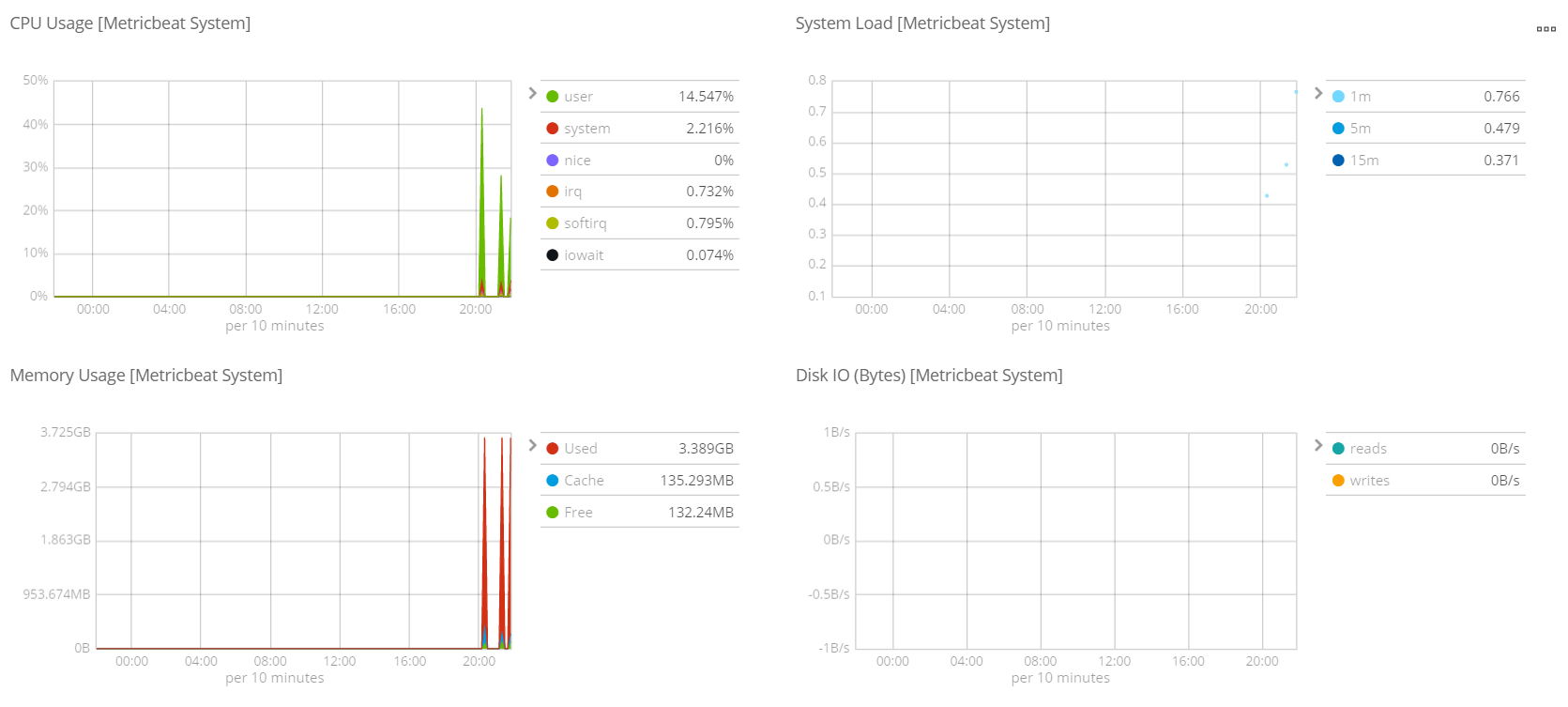

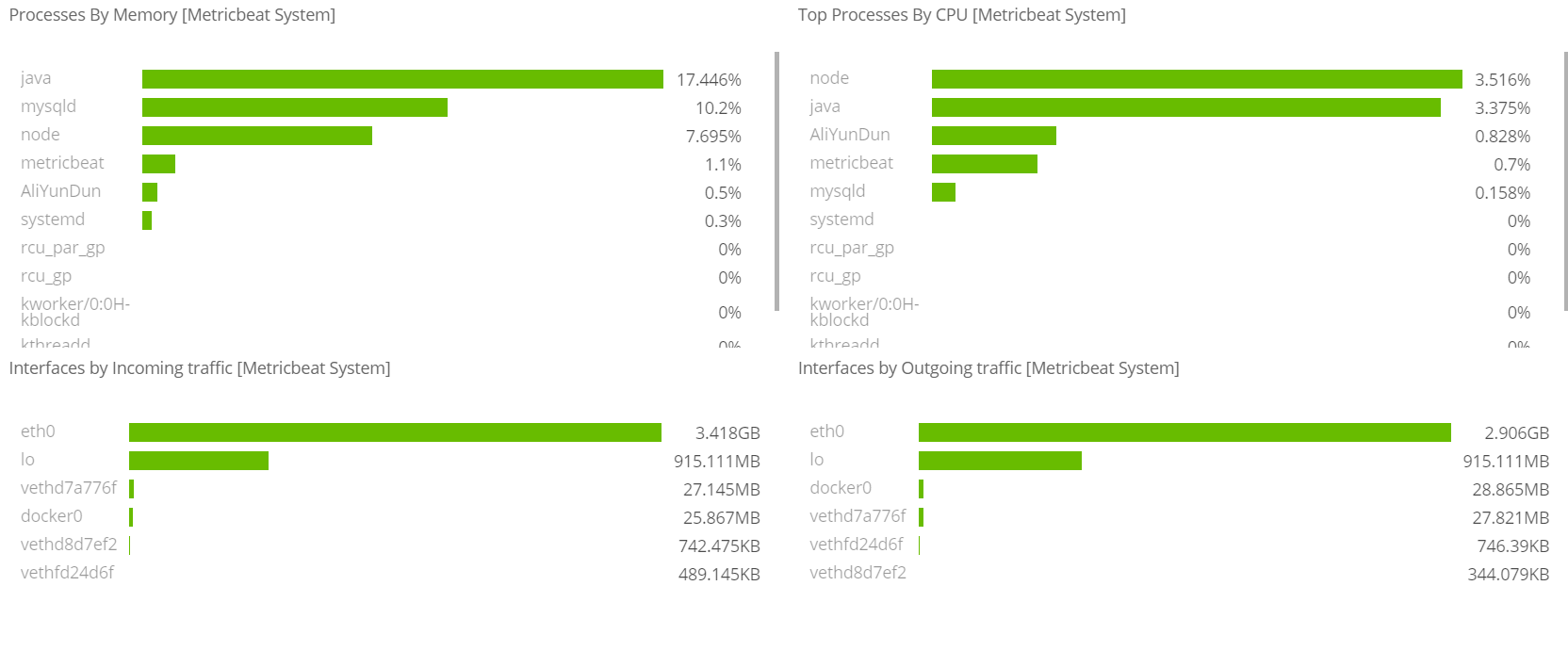

Metricbeat

- 定期收集OS或应用服务的指标数据

- 存储到Elasticsearch中,进行实时分析

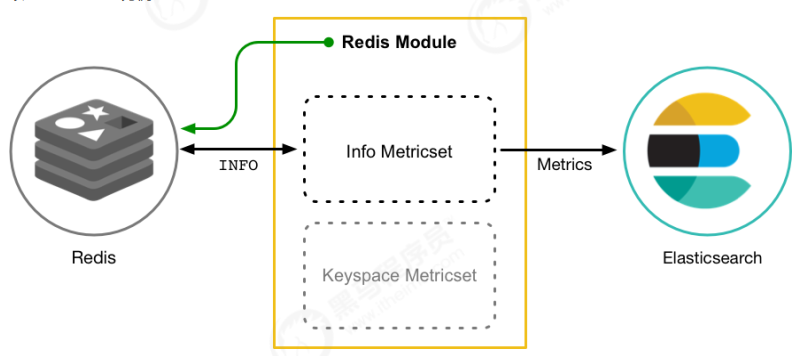

Metricbeat组成

Module

收集的对象,如:mysql、redis、操作系统等

Metricset

收集指标的集合,如:cpu,memory,network

以RedisModule为例:

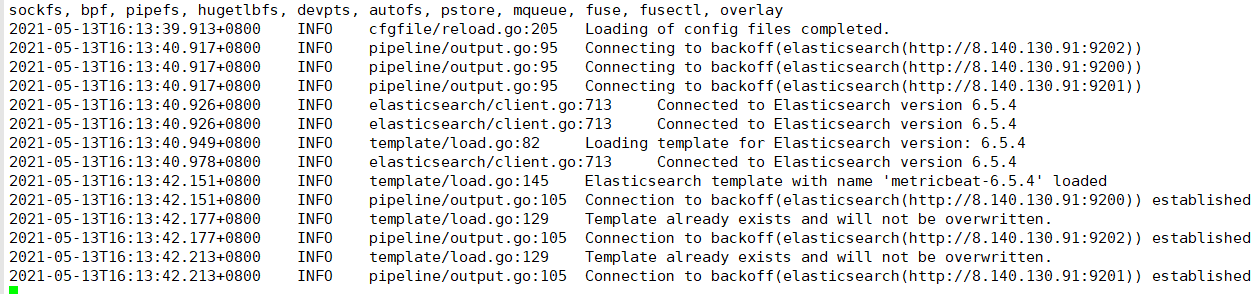

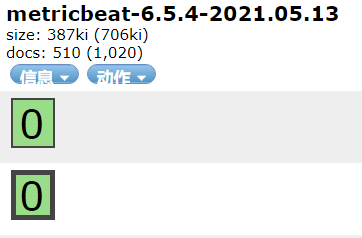

部署与收集系统指标

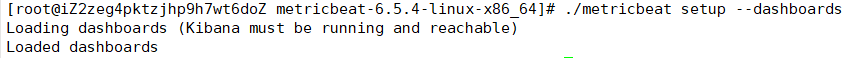

1 | tar -xvf metricbeat-6.5.4-linux-x86_64.tar.gz |

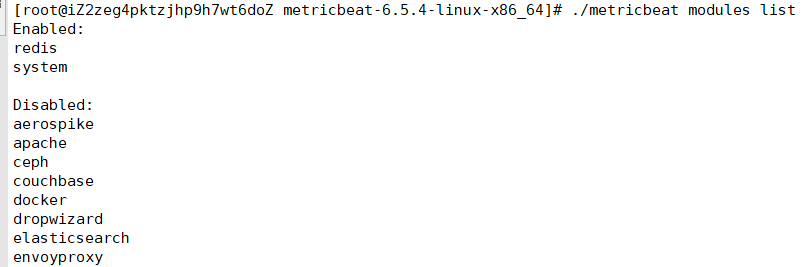

Module

System module配置

1 | cd /haoke/beats/metricbeat-6.5.4-linux-x86_64/modules.d/ |

Redis module

1 | 启用redis module |

1 | { |

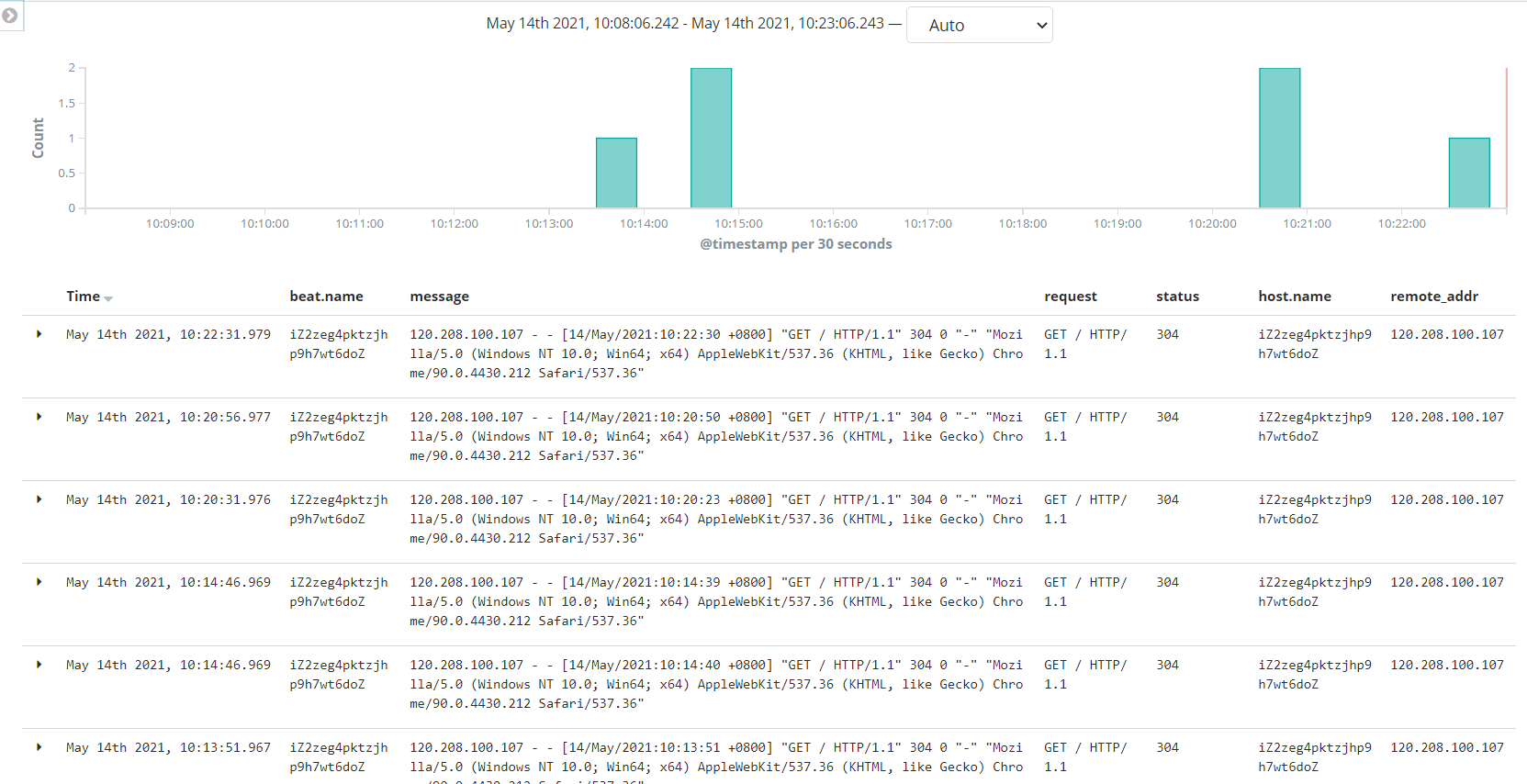

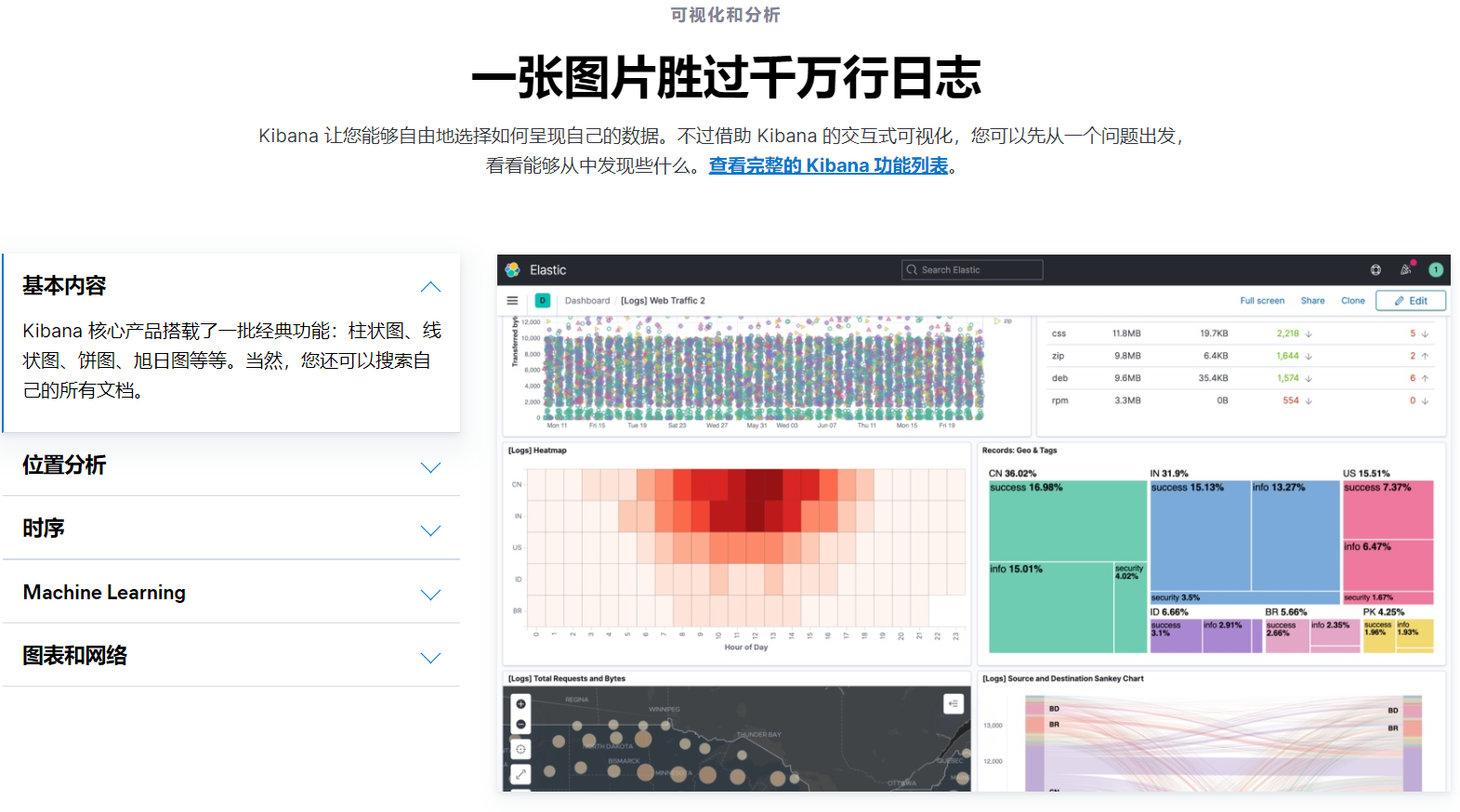

Kibana——可视化

docker 部署kibana

1 | docker pull kibana:6.5.4 |

Metricbeat仪表盘

1 | 修改metricbeat.yml配置 |

Filebeat仪表盘

1 | #修改 filebeat.yml 配置文件 |

日志太大 就不做测试

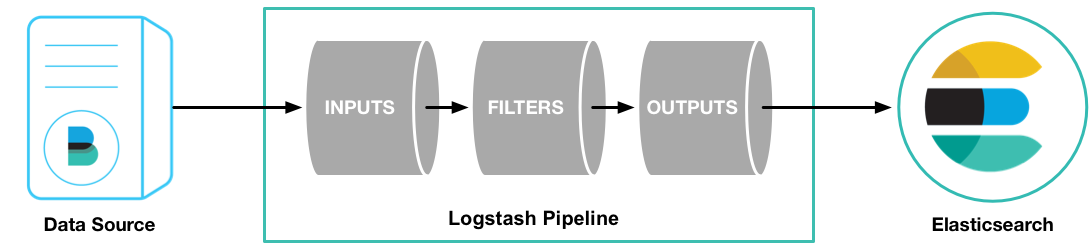

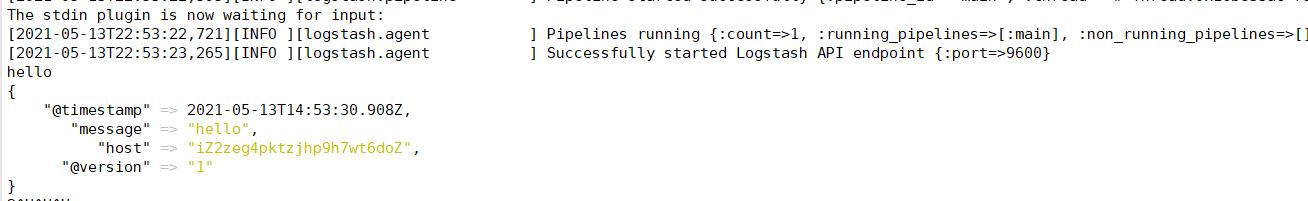

Logstash

部署安装

1 | 检查jdk环境,要求1.8+ |

接收Filebeat输入的日志

将

Filebeat和Logstash整合起来,读取nginx的日志

graph LR Nginx-->|生成| B["日志文件"] A["Filebeat"]--> |读取| B A-->|发送| Logstash

Nginx生成日志文件

安装Nginx

1 | apt install nginx -y |

Filebeat向Logstash发送日志

配置Logstash——logstash处理日志

1 | cd /haoke/logstash |

配置 Filebeat——读取nginx日志

1 | cd /haoke |

测试

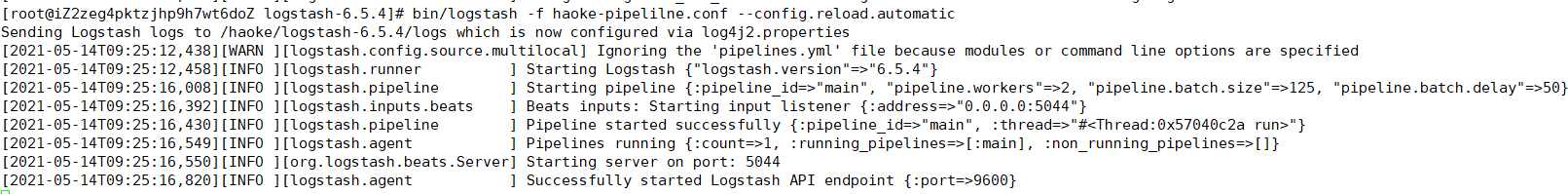

1 | 正式启动 --config.reload.automatic 热加载配置文件,修改配置文件后无需重新启动 |

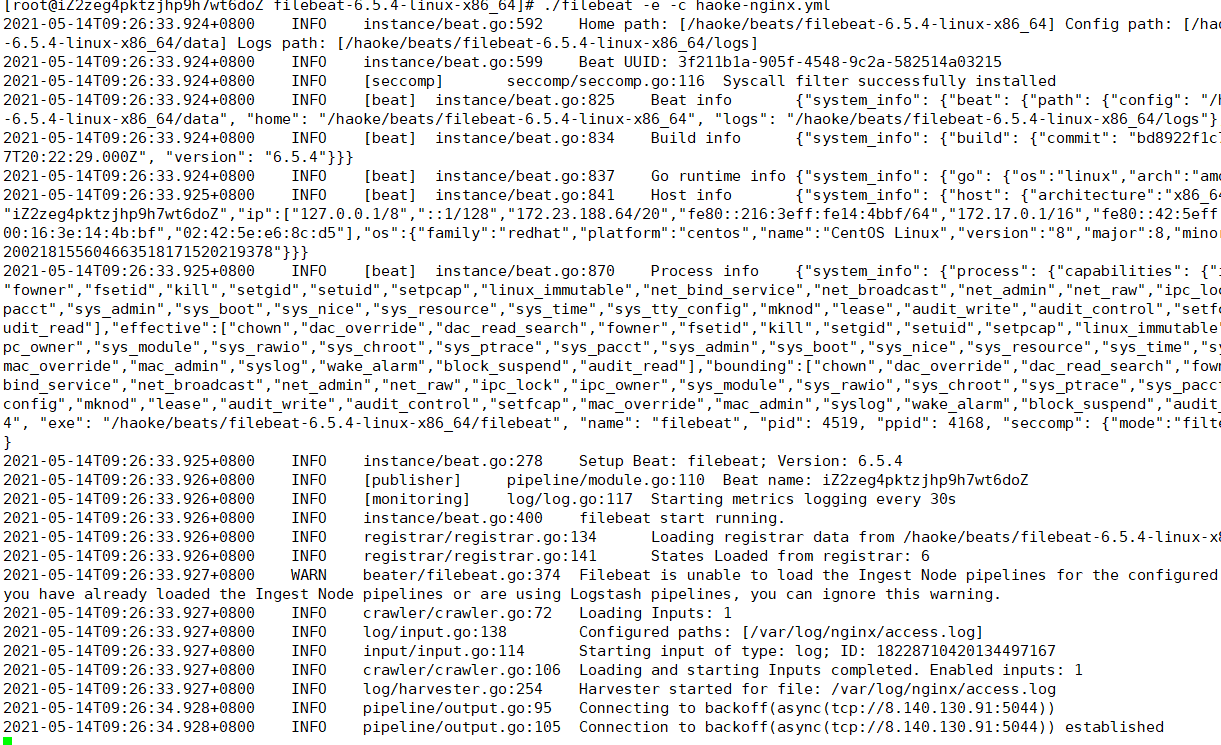

1 | 启动 filebeat 采集nginx日志数据 |

刷新页面

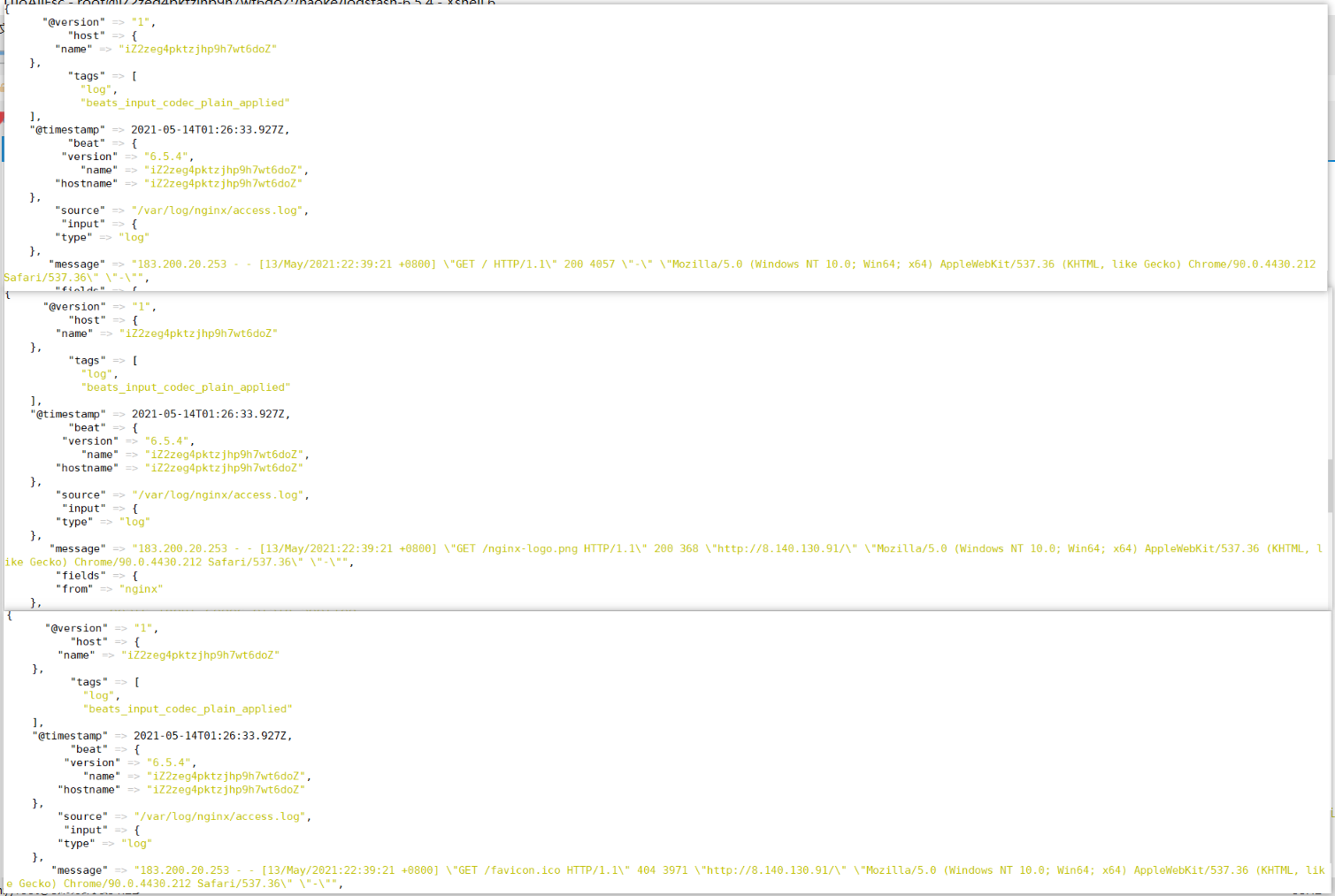

查看输出

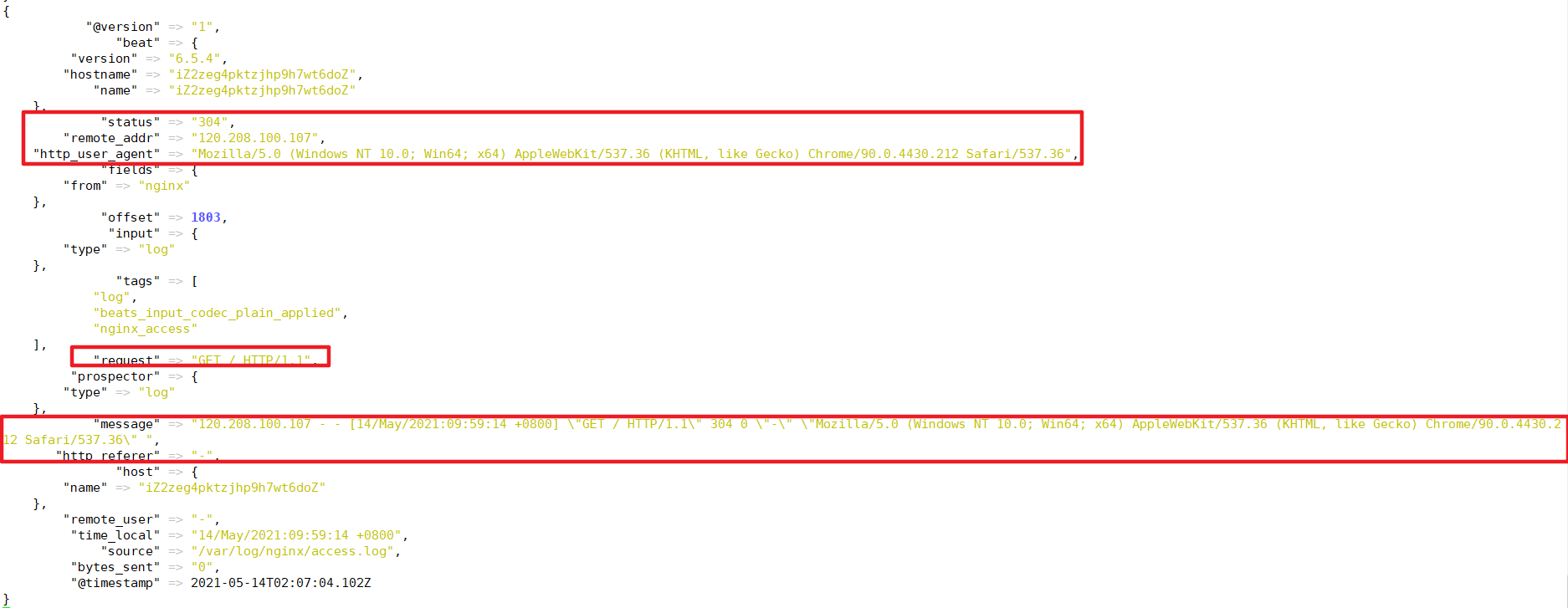

配置filter

未经处理的信息格式很不友好

自定义nginx日志格式

1 | vim /etc/nginx/nginx.conf |

出现以下问题:Redirecting to /bin/systemctl start nginx.service

解决:安装 iptables

1 | yum install iptables-services |

编写nginx-patterns 文件

1 | cd /haoke/logstash-6.5.4 |

修改 haoke-pipeline.conf

1 | input { |

测试

1 | bin/logstash -f haoke-pipeline.conf --config.reload.automatic |

Logstash 向ES发送数据

1 | input{ |

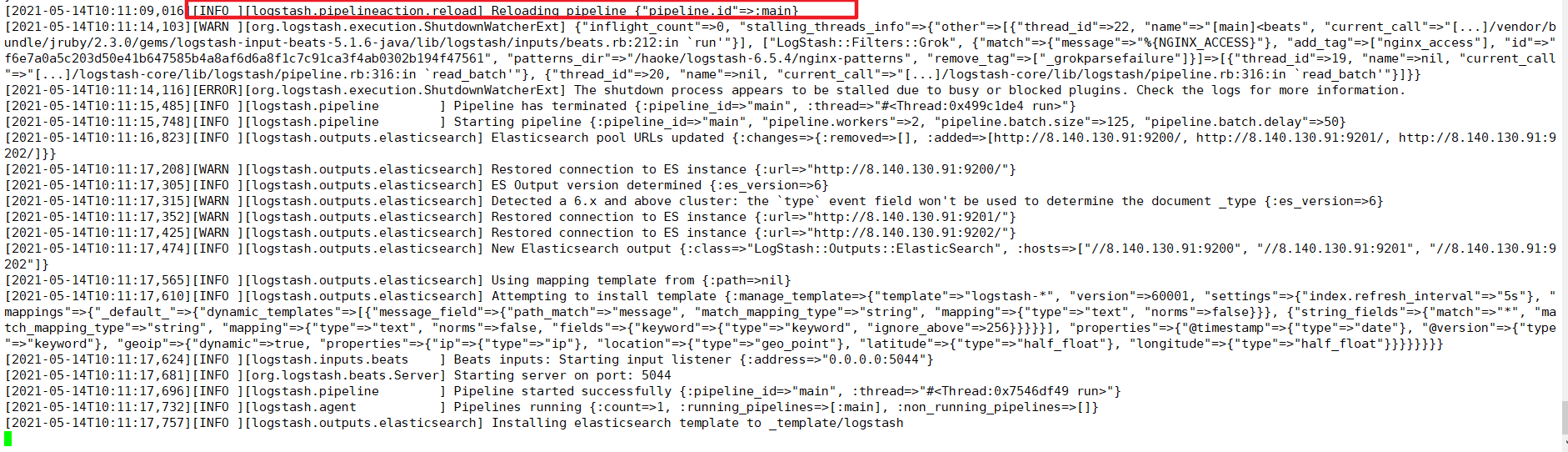

由于是 logstash 是热启动,修改配置文件后自动重载

启动filebeat 采集 nginx日志数据