1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

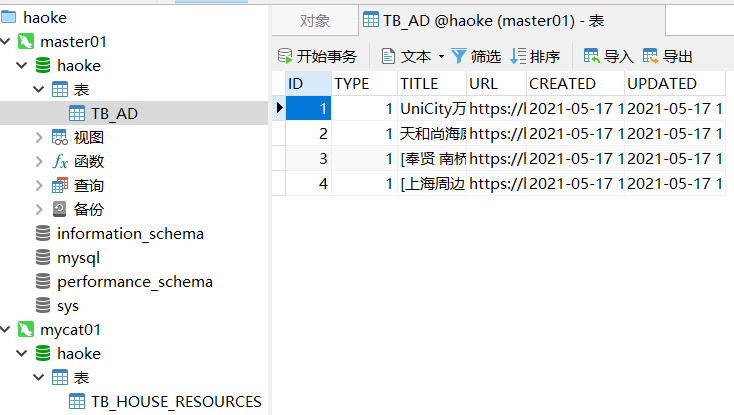

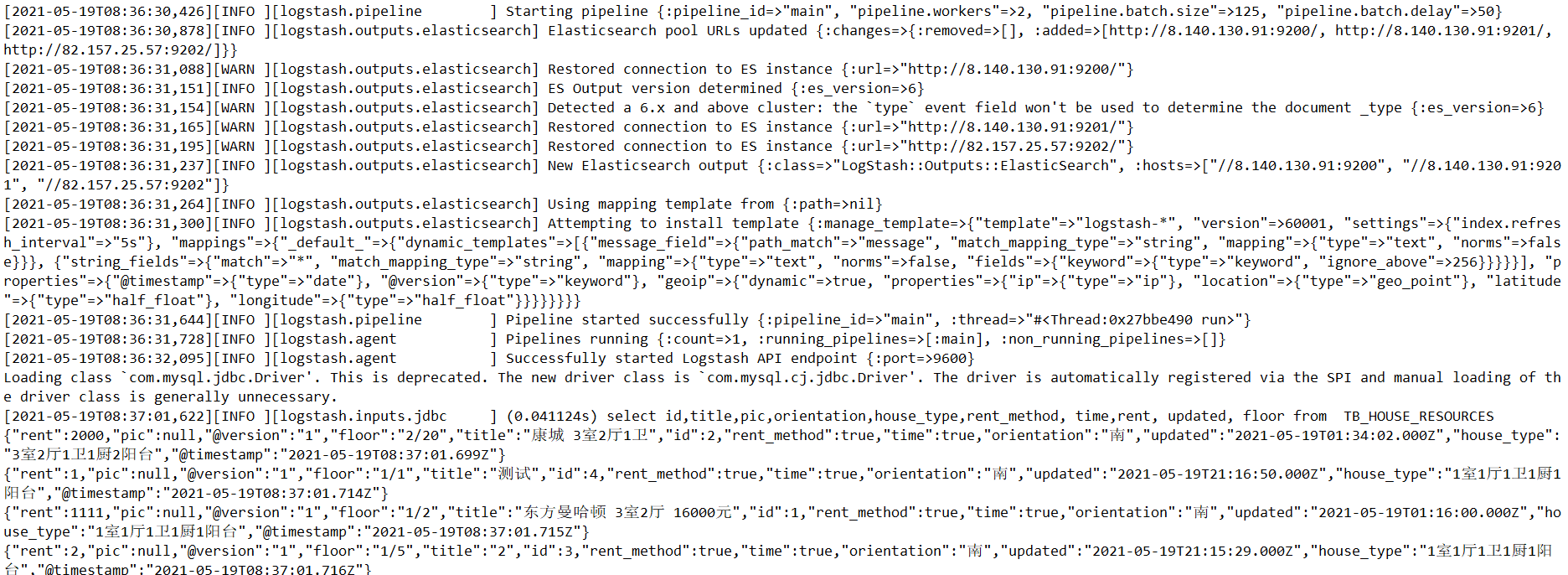

| INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('1', '东方曼哈顿 3室2厅 16000元', '1005', '2', '1', '1','1111', '1', '1', '1室1厅1卫1厨1阳台', '2', '2', '1/2', '南', '1', '1,2,3,8,9', NULL, '这个经纪人很懒,没写核心卖点', '张三', '11111111111', '1', '11', '2018-11-16 01:16:00','2018-11-16 01:16:00');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('2', '康城 3室2厅1卫', '1002', '1', '2', '3', '2000', '1','2', '3室2厅1卫1厨2阳台', '100', '80', '2/20', '南', '1', '1,2,3,7,6', NULL, '拎包入住','张三', '18888888888', '5', '1.5', '2018-11-16 01:34:02', '2018-11-16 01:34:02');

=====

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('3', '2', '1002', '2', '2', '2', '2', '1', '1', '1室1厅1卫1厨1阳台', '22', '11', '1/5', '南', '1', '1,2,3', NULL, '11', '22', '33', '1', '3','2018-11-16 21:15:29', '2018-11-16 21:15:29');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`,`payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('4', '11', '1002', '1', '1', '1', '1', '1', '1', '1室1厅1卫1厨1阳台', '11', '1', '1/1', '南', '1', '1,2,3', NULL, '11', '1', '1', '1', '1','2018-11-16 21:16:50', '2018-11-16 21:16:50');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('5', '最新修改房源5', '1002', '1', '1', '1', '3000', '1','1', '1室1厅1卫1厨1阳台', '80', '1', '1/1', '南', '1', '1,2,3', 'http://itcast-haoke.osscn-qingdao.aliyuncs.com/images/2018/12/04/15439353467987363.jpg,http://itcasthaoke.oss-cn-qingdao.aliyuncs.com/images/2018/12/04/15439354795233043.jpg', '11', '1','1', '1', '1', '2018-11-16 21:17:02', '2018-12-04 23:05:19');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('6', '房源标题', '1002', '1', '1', '11', '1', '1', '1', '1室1厅1卫1厨1阳台', '11', '1', '1/1', '南', '1', '1,2,3', 'http://itcast-haoke.oss-cnqingdao.aliyuncs.com/images/2018/11/16/15423743004743329.jpg,http://itcast-haoke.osscn-qingdao.aliyuncs.com/images/2018/11/16/15423743049233737.jpg', '11', '2', '2', '1','1', '2018-11-16 21:18:41', '2018-11-16 21:18:41');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`,`payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('7', '房源标题', '1002', '1', '1', '11', '1', '1', '1', '1室1厅1卫1厨1阳台', '11', '1', '1/1', '南', '1', '1,2,3', 'http://itcast-haoke.oss-cnqingdao.aliyuncs.com/images/2018/11/16/15423743004743329.jpg,http://itcast-haoke.osscn-qingdao.aliyuncs.com/images/2018/11/16/15423743049233737.jpg', '11', '2', '2', '1','1', '2018-11-16 21:18:41', '2018-11-16 21:18:41');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('8', '3333', '1002', '1', '1', '1', '1', '1', '1', '1室1厅1卫1厨1阳台', '1', '1', '1/1', '南', '1', '1,2,3', 'http://itcast-haoke.oss-cnqingdao.aliyuncs.com/images/2018/11/17/15423896060254118.jpg,http://itcast-haoke.osscn-qingdao.aliyuncs.com/images/2018/11/17/15423896084306516.jpg', '1', '1', '1', '1','1', '2018-11-17 01:33:35', '2018-12-06 10:22:20');

INSERT INTO `TB_HOUSE_RESOURCES` (`id`, `title`, `estate_id`, `building_num`,`building_unit`, `building_floor_num`, `rent`, `rent_method`, `payment_method`,`house_type`, `covered_area`, `use_area`, `floor`, `orientation`, `decoration`,`facilities`, `pic`, `house_desc`, `contact`, `mobile`, `time`, `property_cost`,`created`, `updated`)

VALUES ('9', '康城 精品房源2', '1002', '1', '2', '3', '1000', '1','1', '1室1厅1卫1厨1阳台', '50', '40', '3/20', '南', '1', '1,2,3', 'http://itcasthaoke.oss-cnqingdao.aliyuncs.com/images/2018/11/30/15435106627858721.jpg,http://itcast-haoke.osscn-qingdao.aliyuncs.com/images/2018/11/30/15435107119124432.jpg', '精品房源', '李四','18888888888', '1', '1', '2018-11-21 18:31:35', '2018-11-30 00:58:46');

|