[TOC]

价值函数近似思路 价值函数将已知的 状态/动作 价值泛化到其他未知 状态/动作 价值上 ,用以表示连续的价值函数

基于价值函数近似的TD-learning,其目标函数

求损失的最小值

采用随机梯度下降法,求解损失最小值

而真实的价值函数 $V_{\pi}(s)$ 是未知的,所以在此算法中,需要将其代替

这样的故事线在数学上是不严谨的:

首先,最优化算法与目标函数并不对应;

其次,将 $r_{t+1}+\gamma\hat{V}(s_{t+1},w_t)$ 最为TD target 是否依然收敛

数学上可严谨证明:替换后收敛得到的 $w^*$ 是最小化 $J_{PBE}$ 的解

真实的状态价值误差

基于贝尔曼方程的状态价值误差

用 $\hat{\mathbf{V}}_{\pi}(S,w)$ 去近似 $\mathbf{V}_{\pi}(S)$ ,理论上 $\hat{\mathbf{V}}_{\pi}(S,w)$ 也会满足贝尔曼方程,即价值近似函数最优 $J(w)=0$ 时,价值近似函数的贝尔曼方程成立

基于贝尔曼方程的状态价值投影误差

用 $\hat{V}$ 去近似价值函数,由于函数结构的限制, $T_{\pi}\left(\hat{\mathbf{V}}_{\pi}(S’,w)\right)$ 与 $\hat{\mathbf{V}}_{\pi}(S,w)$ 可能永远都不能相等

其中 $\mathbf{M}$ 为投影矩阵,将 $T_{\pi}\left(\hat{\mathbf{V}}_{\pi}(S’,w)\right)$ 投影到以 $w$ 为参数的 $\hat{V}_{\pi}(w)$ 组成的空间,此时误差可能为0

所以上述求解算法实质上是 基于贝尔曼方程的状态价值投影误差 的随机梯度下降法

Sarsa+价值函数近似 Q-learning+价值函数近似 原始DQN 将价值函数变为神经网络

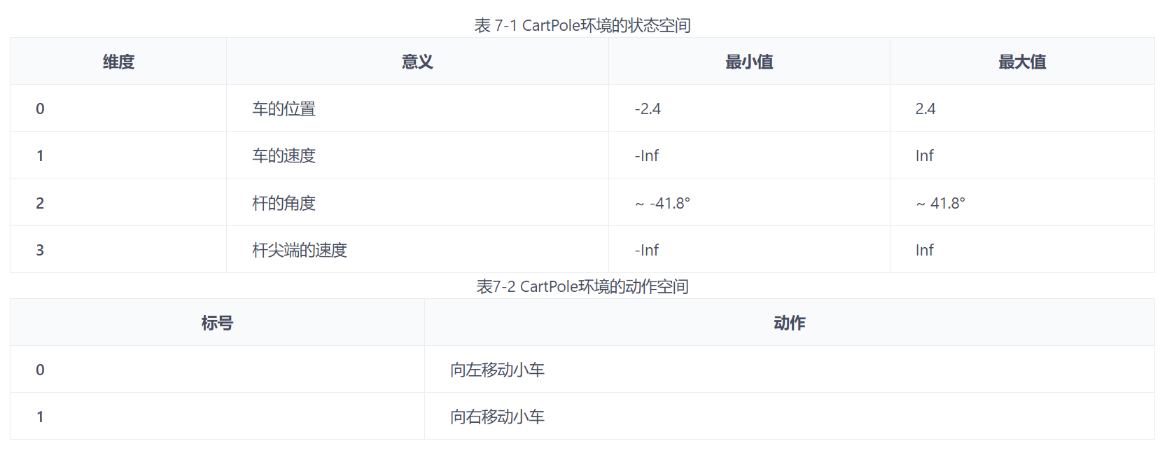

车-杆环境 状态值就是连续的,动作值是离散的

智能体的任务是通过左右移动保持车上的杆竖直,若杆的倾斜度数过大,或者车子离初始位置左右的偏离程度过大,或者坚持时间到达 200 帧,则游戏结束

在游戏中每坚持一帧,智能体能获得分数为 1 的奖励

状态空间与动作空间:

实现 1 2 3 4 5 6 7 8 9 10 import randomimport gymimport numpy as npimport collectionsfrom tqdm import tqdmimport torchimport torch.nn.functional as Fimport matplotlib.pyplot as pltimport rl_utilsfrom typing import Union

经验回放池 加入经验、采样经验

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 class ReplayBuffer : ''' 经验回放池 ''' def __init__ (self, capacity:int ): self.buffer = collections.deque(maxlen=capacity) def add (self, state:np.ndarray, action:int , reward:float , next_state:np.ndarray, done:bool ): self.buffer.append((state, action, reward, next_state, done)) def sample (self, batch_size:int ): transitions = random.sample(self.buffer, batch_size) state, action, reward, next_state, done = zip (*transitions) return np.array(state), action, reward, np.array(next_state), done def size (self ) -> int : return len (self.buffer)

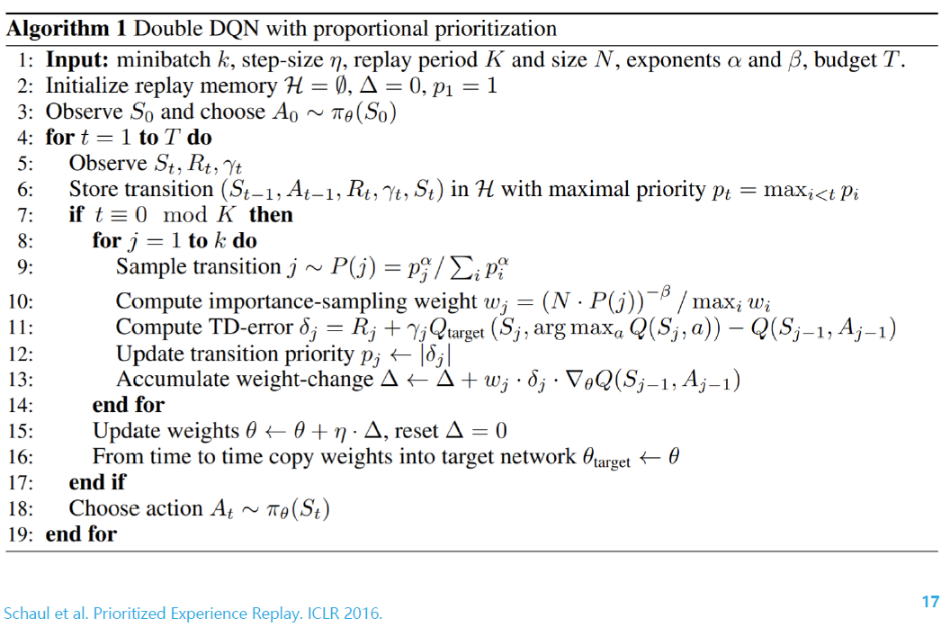

优先经验回放 衡量标准

以 $Q$ 函数的值与TD目标的差异来衡量学习到的价值

为使各样本都有机会被采样,经验存储为 $e_t=<s,a,s’,r_{t+1},p_t+\epsilon>$

经验 $e_t$ 被选中概率为 $P(e_t)=\frac{p_t^\alpha}{\sum\limits_{k}p_k^\alpha}$

重要性采样:权重为 $w_t=\frac{\left(N\times P(t)\right)^{-\beta}}{\max\limits_{i}w_i}$

https://github.com/ameet-1997/Prioritized_Experience_Replay

https://arxiv.org/pdf/1511.05952v4

Q网络 给定状态 $s$ ,输出该状态下每个动作的动作价值

1 2 3 4 5 6 7 8 9 10 11 12 13 class Qnet (torch.nn.Module): ''' 只有一层隐藏层的Q网络 输入:状态 输出:该状态下各个动作的动作价值 ''' def __init__ (self, state_dim:int , hidden_dim:int , action_dim:int ): super (Qnet, self).__init__() self.fc1 = torch.nn.Linear(state_dim, hidden_dim) self.fc2 = torch.nn.Linear(hidden_dim, action_dim) def forward (self, x:torch.Tensor ) -> torch.Tensor: x = F.relu(self.fc1(x)) return self.fc2(x)

DQN 初始化:一个Q网络,一个目标Q网络,一些超参数 动作生成函数 价值网络更新 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 class DQN : ''' DQN算法 ''' def __init__ (self, state_dim: int , hidden_dim: int , action_dim: int , learning_rate: float , gamma: float , epsilon: float , target_update: int , device: torch.device ): self.action_dim = action_dim self.q_net = Qnet(state_dim, hidden_dim, self.action_dim).to(device) self.target_q_net = Qnet(state_dim, hidden_dim, self.action_dim).to(device) self.optimizer = torch.optim.Adam(self.q_net.parameters(), lr=learning_rate) self.gamma = gamma self.epsilon = epsilon self.target_update = target_update self.count = 0 self.device = device def take_action (self, state ) -> int : if np.random.random() < self.epsilon: action = np.random.randint(self.action_dim) else : state = torch.tensor(np.array([state]), dtype=torch.float ).to(self.device) action = self.q_net(state).argmax().item() return action def update (self, transition_dict: dict ): states = torch.tensor(transition_dict['states' ], dtype=torch.float ).to(self.device) actions = torch.tensor(transition_dict['actions' ]).view(-1 , 1 ).to(self.device) rewards = torch.tensor(transition_dict['rewards' ], dtype=torch.float ).view(-1 , 1 ).to(self.device) next_states = torch.tensor(transition_dict['next_states' ], dtype=torch.float ).to(self.device) dones = torch.tensor(transition_dict['dones' ], dtype=torch.float ).view(-1 , 1 ).to(self.device) q_values = self.q_net(states).gather(1 , actions) ''' tensor([[1, 2], [4, 2]]) b.max(1) torch.return_types.max( values=tensor([2, 4]), indices=tensor([1, 0])) ''' max_next_q_values = self.target_q_net(next_states).max (1 )[0 ].view(-1 , 1 ) q_targets = rewards + self.gamma * max_next_q_values * (1 - dones) dqn_loss = torch.mean(F.mse_loss(q_values, q_targets)) self.optimizer.zero_grad() dqn_loss.backward() self.optimizer.step() if self.count % self.target_update == 0 : self.target_q_net.load_state_dict(self.q_net.state_dict()) self.count += 1

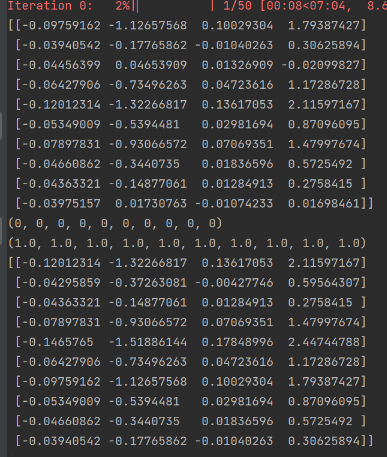

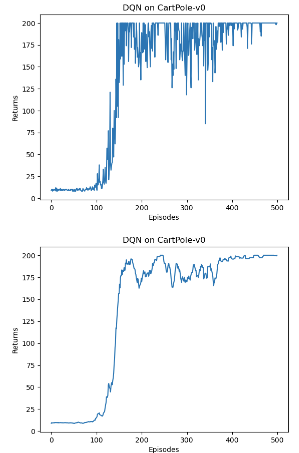

训练过程 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 lr = 2e-3 num_episodes = 500 hidden_dim = 128 gamma = 0.98 epsilon = 0.01 target_update = 10 buffer_size = 10000 minimal_size = 500 batch_size = 64 device = torch.device("cuda" ) if torch.cuda.is_available() else torch.device("cpu" ) env_name = 'CartPole-v0' env = gym.make(env_name) random.seed(0 ) np.random.seed(0 ) env.seed(0 ) torch.manual_seed(0 ) replay_buffer = ReplayBuffer(buffer_size) state_dim = env.observation_space.shape[0 ] action_dim = env.action_space.n agent = DQN(state_dim, hidden_dim, action_dim, lr, gamma, epsilon,target_update, device) return_list = [] for i in range (10 ): with tqdm(total=int (num_episodes / 10 ), desc='Iteration %d' % i) as pbar: for i_episode in range (int (num_episodes / 10 )): episode_return = 0 state = env.reset() done = False while not done: action = agent.take_action(state) next_state, reward, done, _ = env.step(action) replay_buffer.add(state, action, reward, next_state, done) state = next_state episode_return += reward if replay_buffer.size() > minimal_size: b_s, b_a, b_r, b_ns, b_d = replay_buffer.sample(batch_size) print (b_s,b_a,b_r,b_ns,b_d) transition_dict = { 'states' : b_s, 'actions' : b_a, 'next_states' : b_ns, 'rewards' : b_r, 'dones' : b_d } agent.update(transition_dict) return_list.append(episode_return) if (i_episode + 1 ) % 10 == 0 : pbar.set_postfix({ 'episode' : '%d' % (num_episodes / 10 * i + i_episode + 1 ), 'return' : '%.3f' % np.mean(return_list[-10 :]) }) pbar.update(1 )

可视化 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 import osos.environ["KMP_DUPLICATE_LIB_OK" ]="TRUE" episodes_list = list (range (len (return_list))) plt.plot(episodes_list, return_list) plt.xlabel('Episodes' ) plt.ylabel('Returns' ) plt.title('DQN on {}' .format (env_name)) plt.show() mv_return = rl_utils.moving_average(return_list, 9 ) plt.plot(episodes_list, mv_return) plt.xlabel('Episodes' ) plt.ylabel('Returns' ) plt.title('DQN on {}' .format (env_name)) plt.show()

Double DQN 普通DQN通常会导致对Q值的过高估计

传统DQN的TD目标为

因此,每次得到的都是Q网络估计的最大值,在这种更新方式下,会将误差正向累积

假设在状态 $s’$ 下所有动作的Q值为0,$Q(s’,a),a\in \mathcal{A}(s’)$ ,此时正确的TD目标为 $r’+0=r’$

但由于Q网络拟合时会存在误差,即存在某个动作 $a’$ 有 $Q(s’,a’)>0$ ,TD误差变为 $r’+\gamma Q(s’,a’)>r’$ ,因此用TD目标更新 $Q(s,a)$ 时也被高估了

这种Q值的高估会逐步累积。当动作空间较大的任务,DQN的高估问题会非常严重,造成DQN无法有效工作

Double的做法是用训练网络的Q值选择目标网络在 $s’$ 的动作

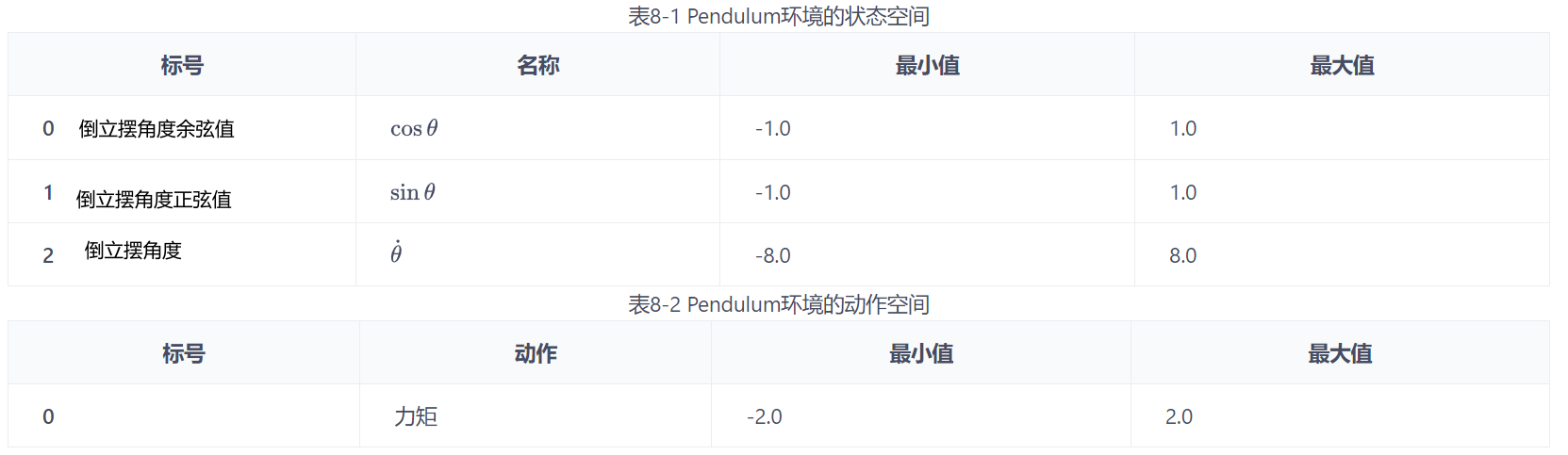

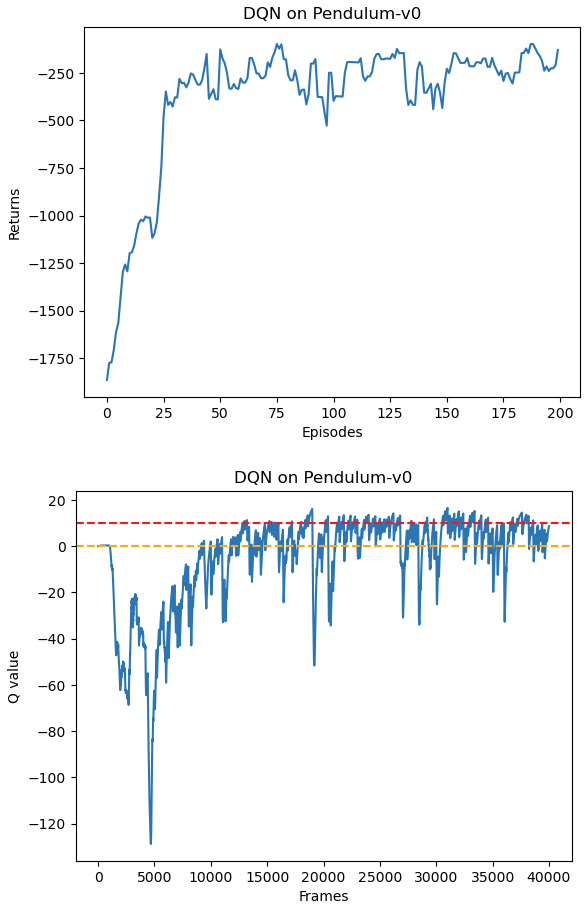

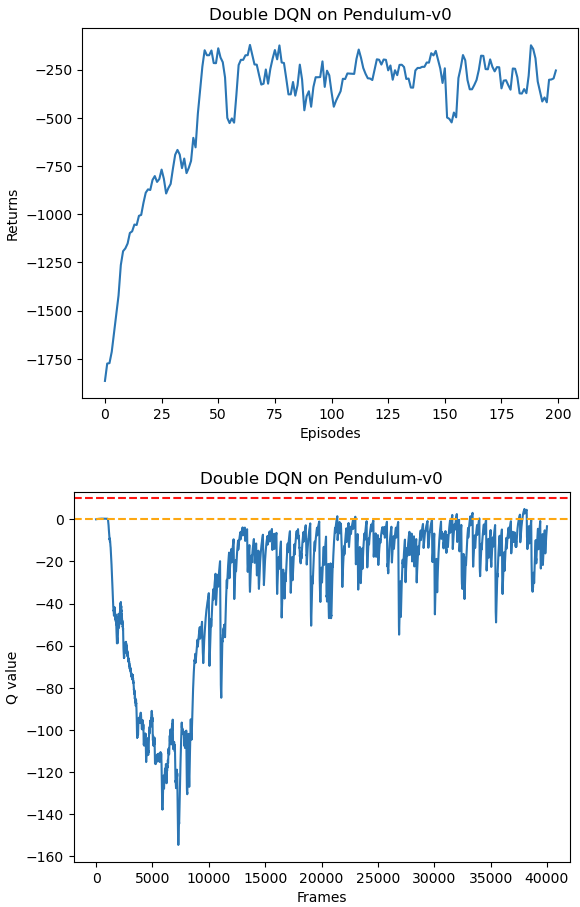

环境 倒立摆

动作为对倒立摆施加的力矩

每一步都会根据当前倒立摆的状态的好坏给予智能体不同的奖励,该环境的奖励函数为 $-(\theta^2+0.1\theta^2+0.001a^2)$

倒立摆向上保持直立不动时奖励为 0,倒立摆在其他位置时奖励为负数

倒立摆环境可以比较方便的验证DQN对Q值的过高估计:对Q值的最大估计应该是0,当Q值大于0的情况则说明出现了高估

DQN只能处理离散环境,所以需要对力矩离散化 1 2 3 4 5 6 action_dim = 11 def dis_to_con (discrete_action:int , env:gym.Env, action_dim:int ): action_lowbound = env.action_space.low[0 ] action_upbound = env.action_space.high[0 ] return action_lowbound + (discrete_action / (action_dim - 1 )) * (action_upbound - action_lowbound)

Q网络 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 import randomimport gymimport numpy as npimport torchimport torch.nn.functional as Fimport matplotlib.pyplot as pltimport rl_utilsfrom tqdm import tqdmclass Qnet (torch.nn.Module): ''' 只有一层隐藏层的Q网络 ''' def __init__ (self, state_dim:int , hidden_dim:int , action_dim:int ): super (Qnet, self).__init__() self.fc1 = torch.nn.Linear(state_dim, hidden_dim) self.fc2 = torch.nn.Linear(hidden_dim, action_dim) def forward (self, x:torch.Tensor ) -> torch.Tensor: x = F.relu(self.fc1(x)) return self.fc2(x)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 class DQN : ''' DQN算法,包括Double DQN ''' def __init__ (self, state_dim:int , hidden_dim:int , action_dim:int , learning_rate:float , gamma:float , epsilon:float , target_update:int , device:torch.device, dqn_type:str ='VanillaDQN' ): self.action_dim = action_dim self.q_net = Qnet(state_dim, hidden_dim, self.action_dim).to(device) self.target_q_net = Qnet(state_dim, hidden_dim, self.action_dim).to(device) self.optimizer = torch.optim.Adam(self.q_net.parameters(), lr=learning_rate) self.gamma = gamma self.epsilon = epsilon self.target_update = target_update self.count = 0 self.dqn_type = dqn_type self.device = device def take_action (self, state ) -> int : if np.random.random() < self.epsilon: action = np.random.randint(self.action_dim) else : state = torch.tensor(np.array([state]), dtype=torch.float ).to(self.device) action = self.q_net(state).argmax().item() return action def max_q_value (self, state ) -> int : state = torch.tensor(np.array([state]), dtype=torch.float ).to(self.device) return self.q_net(state).max ().item() def update (self, transition_dict:dict ): states = torch.tensor(transition_dict['states' ], dtype=torch.float ).to(self.device) actions = torch.tensor(transition_dict['actions' ]).view(-1 , 1 ).to( self.device) rewards = torch.tensor(transition_dict['rewards' ], dtype=torch.float ).view(-1 , 1 ).to(self.device) next_states = torch.tensor(transition_dict['next_states' ], dtype=torch.float ).to(self.device) dones = torch.tensor(transition_dict['dones' ], dtype=torch.float ).view(-1 , 1 ).to(self.device) q_values = self.q_net(states).gather(1 , actions) if self.dqn_type == 'DoubleDQN' : ''' tensor([[1, 2], [4, 2]]) b.max(1) torch.return_types.max( values=tensor([2, 4]), indices=tensor([1, 0])) b.max(1)返回最大Q值对应的动作 ''' max_action = self.q_net(next_states).max (1 )[1 ].view(-1 , 1 ) max_next_q_values = self.target_q_net(next_states).gather(1 , max_action) else : max_next_q_values = self.target_q_net(next_states).max (1 )[0 ].view(-1 , 1 ) q_targets = rewards + self.gamma * max_next_q_values * (1 - dones) dqn_loss = torch.mean(F.mse_loss(q_values, q_targets)) self.optimizer.zero_grad() dqn_loss.backward() self.optimizer.step() if self.count % self.target_update == 0 : self.target_q_net.load_state_dict(self.q_net.state_dict()) self.count += 1

DQN训练过程记录每个状态下最大Q值 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 def train_DQN (agent:DQN, env:gym.Env, num_episodes:int , replay_buffer:rl_utils.ReplayBuffer, minimal_size:int , batch_size:int ): return_list = [] max_q_value_list = [] max_q_value = 0 for i in range (10 ): with tqdm(total=int (num_episodes / 10 ), desc='Iteration %d' % i) as pbar: for i_episode in range (int (num_episodes / 10 )): episode_return = 0 state = env.reset() done = False while not done: action = agent.take_action(state) max_q_value = agent.max_q_value(state) * 0.005 + max_q_value * 0.995 max_q_value_list.append(max_q_value) action_continuous = dis_to_con(action, env, agent.action_dim) next_state, reward, done, _ = env.step([action_continuous]) replay_buffer.add(state, action, reward, next_state, done) state = next_state episode_return += reward if replay_buffer.size() > minimal_size: b_s, b_a, b_r, b_ns, b_d = replay_buffer.sample( batch_size) transition_dict = { 'states' : b_s, 'actions' : b_a, 'next_states' : b_ns, 'rewards' : b_r, 'dones' : b_d } agent.update(transition_dict) return_list.append(episode_return) if (i_episode + 1 ) % 10 == 0 : pbar.set_postfix({ 'episode' : '%d' % (num_episodes / 10 * i + i_episode + 1 ), 'return' : '%.3f' % np.mean(return_list[-10 :]) }) pbar.update(1 ) return return_list, max_q_value_list

训练 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 lr = 1e-2 num_episodes = 200 hidden_dim = 128 gamma = 0.98 epsilon = 0.01 target_update = 50 buffer_size = 5000 minimal_size = 1000 batch_size = 64 device = torch.device("cuda" ) if torch.cuda.is_available() else torch.device("cpu" ) env_name = 'Pendulum-v0' env = gym.make(env_name) state_dim = env.observation_space.shape[0 ] action_dim = 11

DQN结果 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 random.seed(0 ) np.random.seed(0 ) env.seed(0 ) torch.manual_seed(0 ) replay_buffer = rl_utils.ReplayBuffer(buffer_size) agent = DQN(state_dim, hidden_dim, action_dim, lr, gamma, epsilon, target_update, device) return_list, max_q_value_list = train_DQN(agent, env, num_episodes, replay_buffer, minimal_size, batch_size) episodes_list = list (range (len (return_list))) mv_return = rl_utils.moving_average(return_list, 5 ) plt.plot(episodes_list, mv_return) plt.xlabel('Episodes' ) plt.ylabel('Returns' ) plt.title('DQN on {}' .format (env_name)) plt.show() frames_list = list (range (len (max_q_value_list))) plt.plot(frames_list, max_q_value_list) plt.axhline(0 , c='orange' , ls='--' ) plt.axhline(10 , c='red' , ls='--' ) plt.xlabel('Frames' ) plt.ylabel('Q value' ) plt.title('DQN on {}' .format (env_name)) plt.show()

DQN训练结果:累积回报与最大Q值

Double DQN结果 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 random.seed(0 ) np.random.seed(0 ) env.seed(0 ) torch.manual_seed(0 ) replay_buffer = rl_utils.ReplayBuffer(buffer_size) agent = DQN(state_dim, hidden_dim, action_dim, lr, gamma, epsilon, target_update, device, 'DoubleDQN' ) return_list, max_q_value_list = train_DQN(agent, env, num_episodes, replay_buffer, minimal_size, batch_size) episodes_list = list (range (len (return_list))) mv_return = rl_utils.moving_average(return_list, 5 ) plt.plot(episodes_list, mv_return) plt.xlabel('Episodes' ) plt.ylabel('Returns' ) plt.title('Double DQN on {}' .format (env_name)) plt.show() frames_list = list (range (len (max_q_value_list))) plt.plot(frames_list, max_q_value_list) plt.axhline(0 , c='orange' , ls='--' ) plt.axhline(10 , c='red' , ls='--' ) plt.xlabel('Frames' ) plt.ylabel('Q value' ) plt.title('Double DQN on {}' .format (env_name)) plt.show()

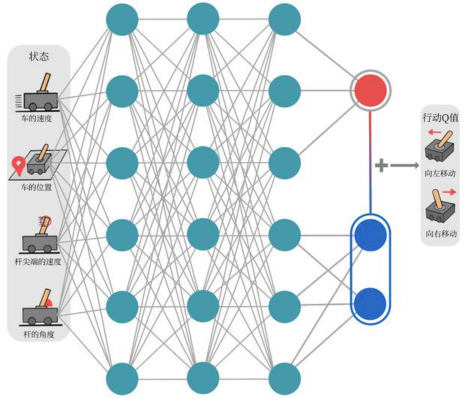

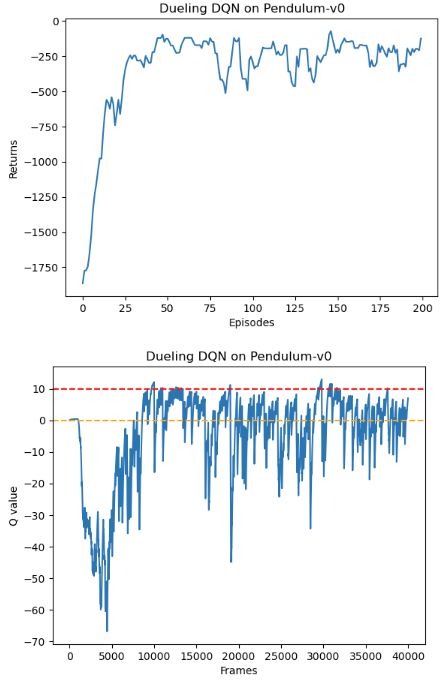

Dueling DQN 在RL中,优势函数定义为 $A(s,a)=Q(s,a)-V(s)$

在同一状态下,所有动作的优势值和为0,因此在Dueling DQN中,Q函数被建模为

$\eta$ 为状态价值函数和优势函数共享的参数,在神经网络中,用于提取特征的前几层 $\alpha$ 和 $\beta$ 分别表示状态价值和优势函数的参数 我们不再让神经网络直接输出 Q 值,在最后一层的两个分支,分别输出状态价值函数和优势函数,再求和得到Q值

在Dueling DQN中,存在对于V函数和A函数建模不唯一问题

对于同样的Q值,若V值加上任意大小的常数C,所有A值减去C,则Q值不变,但其余的Q’值就不等于其真实Q’值 为解决这一问题,强制最优动作的优势函数输出为0

在实现过程中,也可以用平均代替最大

此时,$V(s)=\frac{1}{\vert \mathcal{A}(s)\vert}\sum\limits_{a} Q(s,a)$ ,虽然不再满足贝尔曼最优方程,但实际更稳定

每次更新,V函数都会被更新,也会影响到其他动作的Q值,传统DQN值更新某个动作的Q值,其他动作的Q值不会被更新。换句话说,Dueling DQN能更加频繁、准确地学习状态价值函数,由收缩映射求解BOE过程可知,越早更新,收敛越快

实现 1 2 3 4 5 6 7 8 9 10 11 12 13 class VAnet (torch.nn.Module): ''' 只有一层隐藏层的A网络和V网络 ''' def __init__ (self, state_dim, hidden_dim, action_dim ): super (VAnet, self).__init__() self.fc1 = torch.nn.Linear(state_dim, hidden_dim) self.fc_A = torch.nn.Linear(hidden_dim, action_dim) self.fc_V = torch.nn.Linear(hidden_dim, 1 ) def forward (self, x ): A = self.fc_A(F.relu(self.fc1(x))) V = self.fc_V(F.relu(self.fc1(x))) Q = V + A - A.mean(1 ).view(-1 , 1 ) return Q

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 class DQN : ''' DQN算法,包括Double DQN和Dueling DQN ''' def __init__ (self, state_dim, hidden_dim, action_dim, learning_rate, gamma, epsilon, target_update, device, dqn_type='VanillaDQN' ): self.action_dim = action_dim if dqn_type == 'DuelingDQN' : self.q_net = VAnet(state_dim, hidden_dim, self.action_dim).to(device) self.target_q_net = VAnet(state_dim, hidden_dim, self.action_dim).to(device) else : self.q_net = Qnet(state_dim, hidden_dim, self.action_dim).to(device) self.target_q_net = Qnet(state_dim, hidden_dim, self.action_dim).to(device) self.optimizer = torch.optim.Adam(self.q_net.parameters(),lr=learning_rate) self.gamma = gamma self.epsilon = epsilon self.target_update = target_update self.count = 0 self.dqn_type = dqn_type self.device = device def take_action (self, state ): if np.random.random() < self.epsilon: action = np.random.randint(self.action_dim) else : state = torch.tensor(np.array([state]), dtype=torch.float ).to(self.device) action = self.q_net(state).argmax().item() return action def max_q_value (self, state ): state = torch.tensor([state], dtype=torch.float ).to(self.device) return self.q_net(state).max ().item() def update (self, transition_dict ): states = torch.tensor(transition_dict['states' ], dtype=torch.float ).to(self.device) actions = torch.tensor(transition_dict['actions' ]).view(-1 , 1 ).to(self.device) rewards = torch.tensor(transition_dict['rewards' ], dtype=torch.float ).view(-1 , 1 ).to(self.device) next_states = torch.tensor(transition_dict['next_states' ], dtype=torch.float ).to(self.device) dones = torch.tensor(transition_dict['dones' ], dtype=torch.float ).view(-1 , 1 ).to(self.device) q_values = self.q_net(states).gather(1 , actions) if self.dqn_type == 'DoubleDQN' : max_action = self.q_net(next_states).max (1 )[1 ].view(-1 , 1 ) max_next_q_values = self.target_q_net(next_states).gather(1 , max_action) else : max_next_q_values = self.target_q_net(next_states).max (1 )[0 ].view(-1 , 1 ) q_targets = rewards + self.gamma * max_next_q_values * (1 - dones) dqn_loss = torch.mean(F.mse_loss(q_values, q_targets)) self.optimizer.zero_grad() dqn_loss.backward() self.optimizer.step() if self.count % self.target_update == 0 : self.target_q_net.load_state_dict(self.q_net.state_dict()) self.count += 1

训练过程 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 random.seed(0 ) np.random.seed(0 ) env.seed(0 ) torch.manual_seed(0 ) replay_buffer = rl_utils.ReplayBuffer(buffer_size) agent = DQN(state_dim, hidden_dim, action_dim, lr, gamma, epsilon, target_update, device, 'DuelingDQN' ) return_list, max_q_value_list = train_DQN(agent, env, num_episodes, replay_buffer, minimal_size, batch_size) episodes_list = list (range (len (return_list))) mv_return = rl_utils.moving_average(return_list, 5 ) plt.plot(episodes_list, mv_return) plt.xlabel('Episodes' ) plt.ylabel('Returns' ) plt.title('Dueling DQN on {}' .format (env_name)) plt.show() frames_list = list (range (len (max_q_value_list))) plt.plot(frames_list, max_q_value_list) plt.axhline(0 , c='orange' , ls='--' ) plt.axhline(10 , c='red' , ls='--' ) plt.xlabel('Frames' ) plt.ylabel('Q value' ) plt.title('Dueling DQN on {}' .format (env_name)) plt.show()

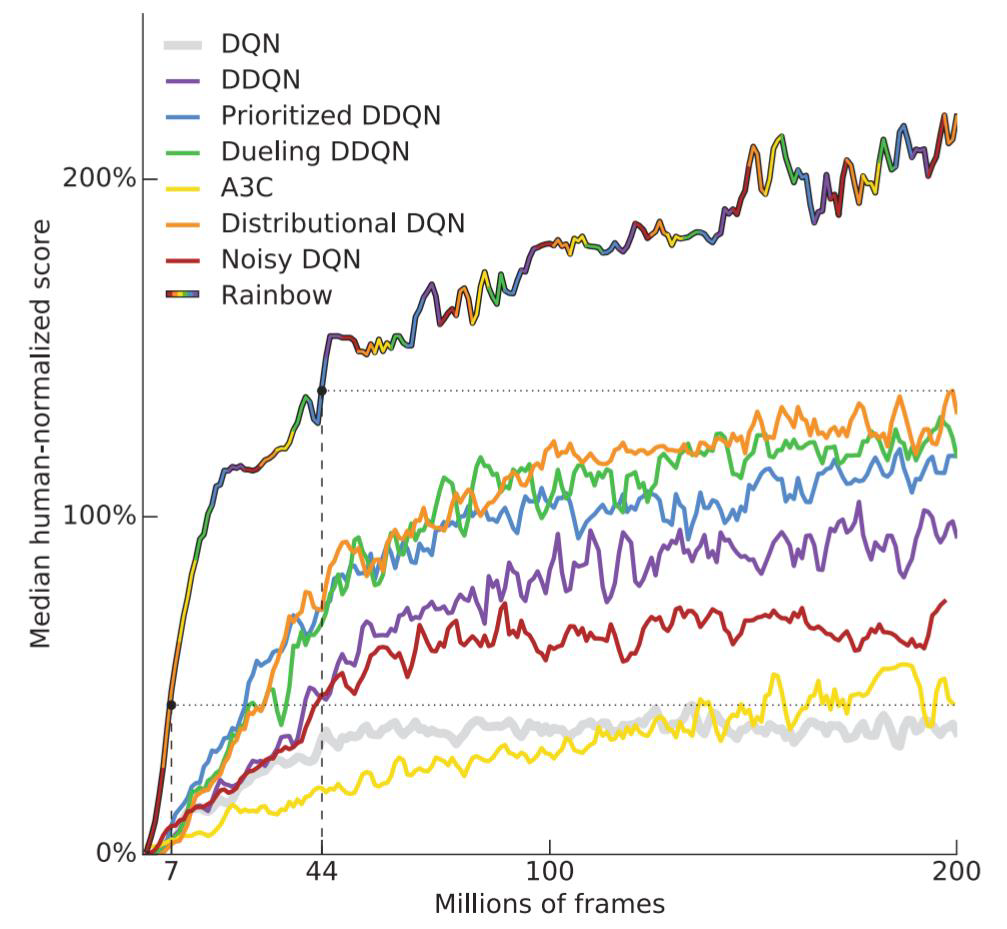

Rainbow 结合众多Value-based 的DRL方法

https://arxiv.org/pdf/1710.02298v1

step-by-step tutorial from DQN to Rainbow :https://github.com/Curt-Park/rainbow-is-all-you-need

利用柯西变异对最优解进行扰动,帮助算法跳出局部最优——噪音网络可以跳出局部最优

https://kns.cnki.net/kcms2/article/abstract?v=m2RMPZxbF1KwDDxNKEe4QhTP_vy3bL8oaQruUyhFdavQ1TlUKymat-ONeDYvzpBYsA4hC0dkbvqL0LD_omClpP67PaLd-vnDT8szQzWeSyZo_QPfYBk93g==&uniplatform=NZKPT

使用拉丁超立方体抽样增强初始种群的均匀性和多样性