前置知识:

Mybatis

Spring

SpringMVC

SpringBoot

微服务

Dubbo

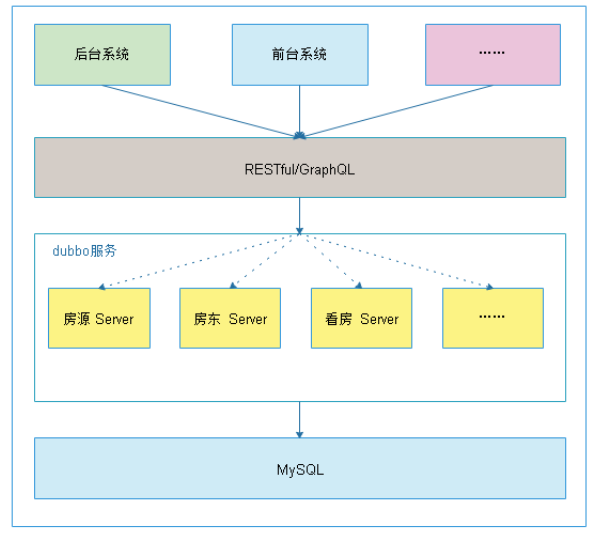

后台信息管理系统采用的是前后端分离开发模式,前端使用LayUI系统作为模板进行改造,后端采用的是SpringBoot+Dubbo+SSM的架构进行开发

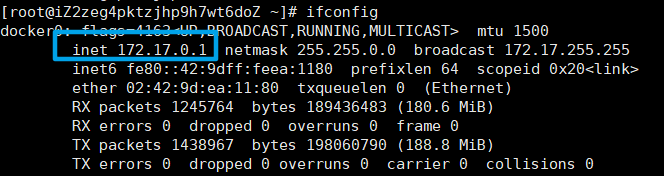

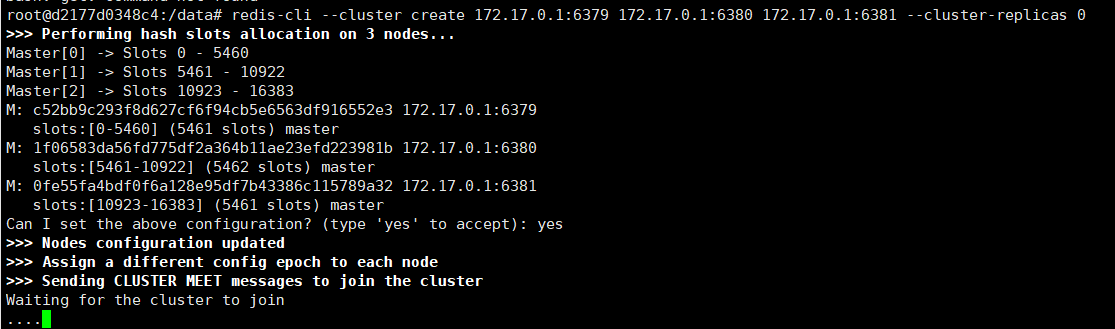

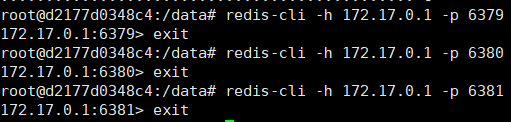

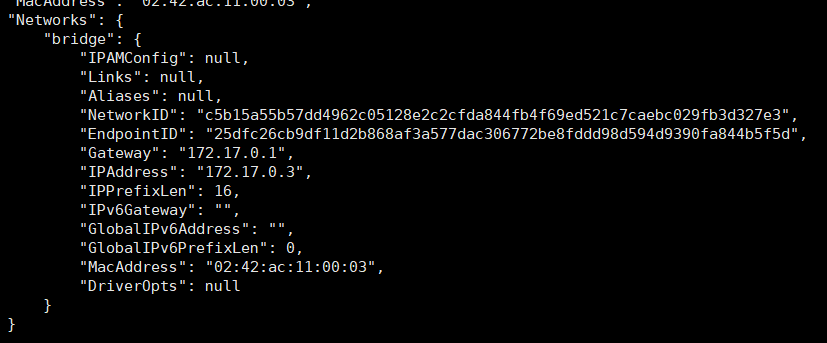

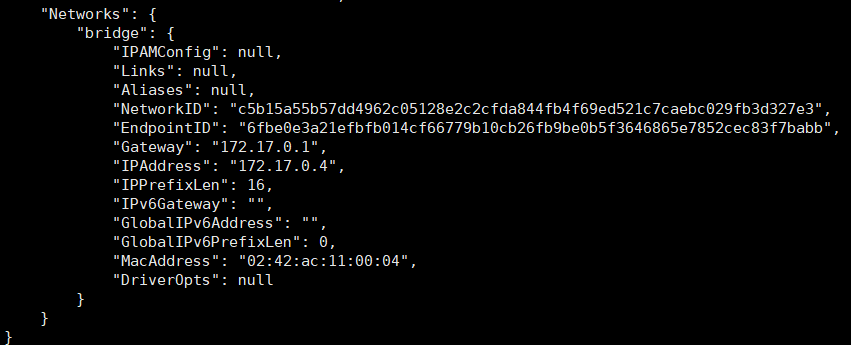

后台系统架构 工具:图像存储——COS对象存储 分析服务之间关系并创建工程 代码优化: 后台系统 后台系统架构 后台服务采用微服务的思想,使用Dubbo作为服务治理框架

微服务架构 在单体架构的应用中,一个服务的所有功能模块都会被部署到同一台机器上。当用户规模变得庞大,应用的响应速度会变慢。这个时候可以通过增加一台机器部署应用来提高响应速度。假设这个应用只有房源模块和用户模块。经过分析,这个系统中房源模块消耗的资源最多。提高响应速度最高效的方法就是单独为房源模块分配更多资源。单体架构的应用无法做到这一点。

在微服务架构的应用中,应用会按照功能模块拆分为多个服务。部署时,可以在一台机器上部署一个用户服务和一个房源服务,另一台机器上部署两个房源服务,这样可以最大化利用系统资源。

在该项目中为什么引入Dubbo Dubbo框架基于生产者-消费者模型来完成服务治理。在一项业务流程中,一个服务要么是服务提供方,要么是服务消费方。降低了各层之间服务的耦合度。

设想一种场景,前台使用者进入程序会根据地理位置、平时浏览的户型、设置的喜好推送房源列表,后台管理员登录管理系统后,也会有获取房源列表的需求。但这两种角色身份不同,获取到的房源列表也会不同,所以需要不同的处理器对模型层返回的房源列表进行处理。但这两种处理器中,从模型层获取房源列表这个过程是公有的,所以将模型层查询房源列表服务作为一个服务,提供给不同控制器调用。

这与Dubbo的生产者-消费者模式很类似,Service层处理完数据后提供给Controller层,Controller层利用Service层的数据进行不同的业务处理流程,所以我把Service层作为服务的生产者,Controller层作为服务的消费者。

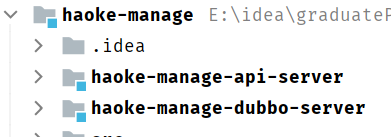

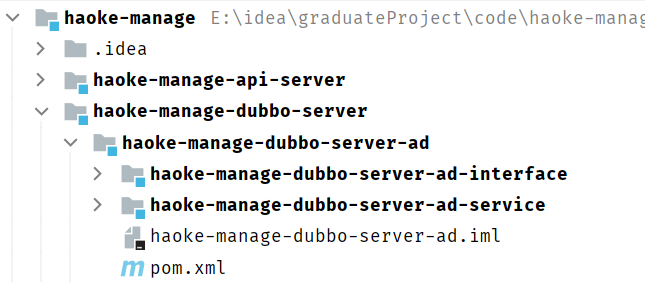

项目结构

父工程 haoke-manage

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 <?xml version="1.0" encoding="UTF-8" ?> <project xmlns ="http://maven.apache.org/POM/4.0.0" xmlns:xsi ="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation ="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd" > <modelVersion > 4.0.0</modelVersion > <groupId > com.haoke.manage</groupId > <artifactId > haoke-manage</artifactId > <packaging > pom</packaging > <version > 1.0-SNAPSHOT</version > <modules > <module > haoke-manage-dubbo-server</module > <module > haoke-manage-api-server</module > </modules > <parent > <artifactId > spring-boot-starter-parent</artifactId > <groupId > org.springframework.boot</groupId > <version > 2.4.3</version > </parent > <dependencies > <dependency > <groupId > org.springframework.boot</groupId > <artifactId > spring-boot-starter-test</artifactId > <version > 2.4.3</version > </dependency > <dependency > <groupId > org.apache.commons</groupId > <artifactId > commons-lang3</artifactId > </dependency > <dependency > <groupId > com.alibaba.boot</groupId > <artifactId > dubbo-spring-boot-starter</artifactId > <version > 0.2.0</version > </dependency > <dependency > <groupId > com.alibaba</groupId > <artifactId > dubbo</artifactId > <version > 2.6.4</version > </dependency > <dependency > <groupId > org.apache.zookeeper</groupId > <artifactId > zookeeper</artifactId > <version > 3.4.13</version > </dependency > <dependency > <groupId > com.github.sgroschupf</groupId > <artifactId > zkclient</artifactId > <version > 0.1</version > </dependency > </dependencies > <build > <plugins > <plugin > <groupId > org.springframework.boot</groupId > <artifactId > spring-boot-maven-plugin</artifactId > </plugin > </plugins > </build > </project >

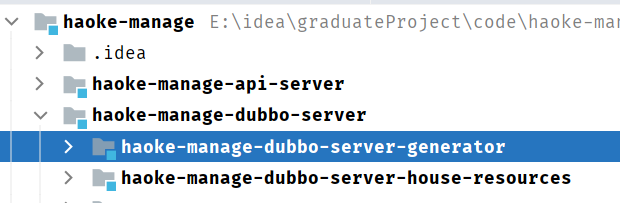

子工程 服务生产方 haoke-manage-dubbo-server

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 <?xml version="1.0" encoding="UTF-8" ?> <project xmlns ="http://maven.apache.org/POM/4.0.0" xmlns:xsi ="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation ="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd" > <parent > <artifactId > haoke-manage</artifactId > <groupId > com.haoke.manage</groupId > <version > 1.0-SNAPSHOT</version > </parent > <modelVersion > 4.0.0</modelVersion > <packaging > pom</packaging > <modules > <module > haoke-manage-dubbo-server-house-resources</module > <module > haoke-manage-dubbo-server-generator</module > </modules > <artifactId > haoke-manage-dubbo-server</artifactId > <dependencies > <dependency > <groupId > org.springframework.boot</groupId > <artifactId > spring-boot-starter</artifactId > </dependency > <dependency > <groupId > org.projectlombok</groupId > <artifactId > lombok</artifactId > </dependency > </dependencies > </project >

服务消费方 haoke-manage-api-server

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 <?xml version="1.0" encoding="UTF-8" ?> <project xmlns ="http://maven.apache.org/POM/4.0.0" xmlns:xsi ="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation ="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd" > <parent > <artifactId > haoke-manage</artifactId > <groupId > com.haoke.manage</groupId > <version > 1.0-SNAPSHOT</version > </parent > <modelVersion > 4.0.0</modelVersion > <artifactId > haoke-manage-api-server</artifactId > <dependencies > <dependency > <groupId > org.springframework.boot</groupId > <artifactId > spring-boot-starter-web</artifactId > </dependency > <dependency > <groupId > com.haoke.manage</groupId > <artifactId > haoke-manage-dubbo-server-house-resources-interface</artifactId > <version > 1.0-SNAPSHOT</version > </dependency > </dependencies > </project >

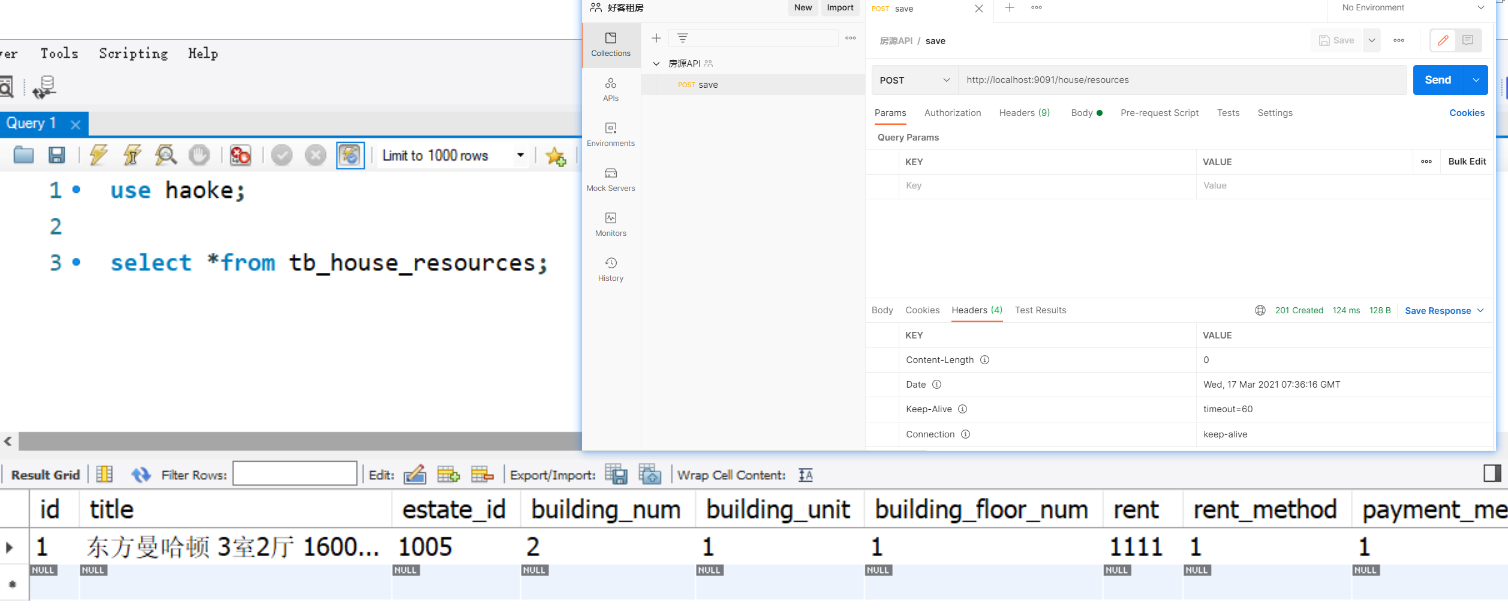

房源服务的构建 创建数据表 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 use haoke; DROP TABLE IF EXISTS `TB_ESTATE`;CREATE TABLE `TB_ESTATE` ( `id` bigint NOT NULL AUTO_INCREMENT, `name` varchar (100 ) DEFAULT NULL COMMENT '楼盘名称' , `province` varchar (10 ) DEFAULT NULL COMMENT '所在省' , `city` varchar (10 ) DEFAULT NULL COMMENT '所在市' , `area` varchar (10 ) DEFAULT NULL COMMENT '所在区' , `address` varchar (100 ) DEFAULT NULL COMMENT '具体地址' , `year ` varchar (10 ) DEFAULT NULL COMMENT '建筑年代' , `type` varchar (10 ) DEFAULT NULL COMMENT '建筑类型' , `property_cost` varchar (10 ) DEFAULT NULL COMMENT '物业费' , `property_company` varchar (20 ) DEFAULT NULL COMMENT '物业公司' , `developers` varchar (20 ) DEFAULT NULL COMMENT '开发商' , `created` datetime DEFAULT NULL COMMENT '创建时间' , `updated` datetime DEFAULT NULL COMMENT '更新时间' , PRIMARY KEY (`id`) ) ENGINE= InnoDB AUTO_INCREMENT= 1006 DEFAULT CHARSET= utf8 COMMENT= '楼盘表' ; INSERT INTO `TB_ESTATE` VALUES (1001 ,'中远两湾城' ,'上海市' ,'上海市' ,'普陀区' ,'远景路97弄' ,'2001' ,'塔楼/板楼' ,'1.5' ,'上海中远物业管理发展有限公司' ,'上海万业企业股份有限公司' ,'2021-03-16 23:00:20' ,'2021-03-16 23:00:20' ), (1002 ,'上海康城' ,'上海市' ,'上海市' ,'闵行区' ,'莘松路958弄' ,'2001' ,'塔楼/板楼' ,'1.5' ,'盛孚物业' ,'闵行房地产' ,'2021-03-16 23:00:20' ,'2021-03-16 23:00:20' ), (1003 ,'保利西子湾' ,'上海市' ,'上海市' ,'松江区' ,'广富林路1188弄' ,'2008' ,'塔楼/板楼' ,'1.75' ,'上海保利物业管理' ,'上海城乾房地产开发有限公司' ,'2021-03-16 23:00:20' ,'2021-03-16 23:00:20' ), (1004 ,'万科城市花园' ,'上海市' ,'上海市' ,'松江区' ,'广富林路1188弄' ,'2002' ,'塔楼/板楼' ,'1.5' ,'上海保利物业管理' ,'上海城乾房地产开发有限公司' ,'2021-03-16 23:00:20' ,'2021-03-16 23:00:20' ), (1005 ,'上海阳城' ,'上海市' ,'上海市' ,'闵行区' ,'罗锦路888弄' ,'2002' ,'塔楼/板楼' ,'1.5' ,'上海莲阳物业管理有限公司' ,'上海莲城房地产开发有限公司' ,'2021-03-16 23:00:20' ,'2021-03-16 23:00:20' ); CREATE TABLE `TB_HOUSE_RESOURCES` ( `id` bigint (20 ) NOT NULL AUTO_INCREMENT, `title` varchar (100 ) DEFAULT NULL COMMENT '房源标题' , `estate_id` bigint (20 ) DEFAULT NULL COMMENT '楼盘id' , `building_num` varchar (5 ) DEFAULT NULL COMMENT '楼号(栋)' , `building_unit` varchar (5 ) DEFAULT NULL COMMENT '单元号' , `building_floor_num` varchar (5 ) DEFAULT NULL COMMENT '门牌号' , `rent` int (10 ) DEFAULT NULL COMMENT '租金' , `rent_method` tinyint(1 ) DEFAULT NULL COMMENT '租赁方式,1-整租,2-合租' , `payment_method` tinyint(1 ) DEFAULT NULL COMMENT '支付方式,1-付一押一,2-付三押一,3-付六押一,4-年付押一,5-其它' , `house_type` varchar (255 ) DEFAULT NULL COMMENT '户型,如:2室1厅1卫' , `covered_area` varchar (10 ) DEFAULT NULL COMMENT '建筑面积' , `use_area` varchar (10 ) DEFAULT NULL COMMENT '使用面积' , `floor` varchar (10 ) DEFAULT NULL COMMENT '楼层,如:8/26' , `orientation` varchar (2 ) DEFAULT NULL COMMENT '朝向:东、南、西、北' , `decoration` tinyint(1 ) DEFAULT NULL COMMENT '装修,1-精装,2-简装,3-毛坯' , `facilities` varchar (50 ) DEFAULT NULL COMMENT '配套设施, 如:1,2,3' , `pic` varchar (200 ) DEFAULT NULL COMMENT '图片,最多5张' , `house_desc` varchar (200 ) DEFAULT NULL COMMENT '描述' , `contact` varchar (10 ) DEFAULT NULL COMMENT '联系人' , `mobile` varchar (11 ) DEFAULT NULL COMMENT '手机号' , `time ` tinyint(1 ) DEFAULT NULL COMMENT '看房时间,1-上午,2-中午,3-下午,4-晚上,5-全天' , `property_cost` varchar (10 ) DEFAULT NULL COMMENT '物业费' , `created` datetime DEFAULT NULL , `updated` datetime DEFAULT NULL , PRIMARY KEY (`id`) ) ENGINE= InnoDB AUTO_INCREMENT= 1 DEFAULT CHARSET= utf8 COMMENT= '房源表' ;

POJO BasePOJO 1 2 3 4 5 6 7 package com.haoke.dubbo.server.pojo;@Data public abstract class BasePojo implements Serializable { private Date created; private Date updated; }

房源POJO 1. MybatisPlus逆向工程生成POJO <span id="generator"></span>

mybatis-plus的AutoGenerator插件根据 数据库中的表结构 生成相应的POJO类

创建generator项目

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 <?xml version="1.0" encoding="UTF-8" ?> <project xmlns ="http://maven.apache.org/POM/4.0.0" xmlns:xsi ="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation ="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd" > <modelVersion > 4.0.0</modelVersion > <artifactId > haoke-manage-dubbo-server-generator</artifactId > <dependencies > <dependency > <groupId > org.freemarker</groupId > <artifactId > freemarker</artifactId > </dependency > <dependency > <groupId > com.baomidou</groupId > <artifactId > mybatis-plus-core</artifactId > <version > 3.4.2</version > </dependency > <dependency > <groupId > com.baomidou</groupId > <artifactId > mybatis-plus-generator</artifactId > <version > 3.4.1</version > </dependency > </dependencies > </project >

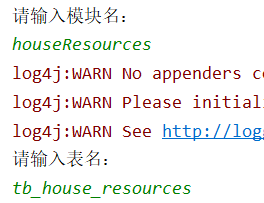

编写CodeGenerator 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 public class CodeGenerator { public static String scanner (String tip) { Scanner scanner = new Scanner (System.in); StringBuilder help = new StringBuilder (); help.append("请输入" + tip + ":" ); System.out.println(help.toString()); if (scanner.hasNext()) { String ipt = scanner.next(); if (StringUtils.isNotEmpty(ipt)) { return ipt; } } throw new MybatisPlusException ("请输入正确的" + tip + "!" ); } public static void main (String[] args) { AutoGenerator mpg = new AutoGenerator (); GlobalConfig gc = new GlobalConfig (); String projectPath = System.getProperty("user.dir" ); gc.setOutputDir(projectPath + "/src/main/java" ); gc.setAuthor("amostian" ); gc.setOpen(false ); mpg.setGlobalConfig(gc); DataSourceConfig dsc = new DataSourceConfig (); dsc.setUrl("jdbc:mysql://82.157.25.57:4002/haoke?characterEncoding=utf8&useSSL=false&serverTimezone=UTC" ); dsc.setDriverName("com.mysql.cj.jdbc.Driver" ); dsc.setUsername("mycat" ); dsc.setPassword("mycat" ); mpg.setDataSource(dsc); PackageConfig pc = new PackageConfig (); pc.setModuleName(scanner("模块名" )); pc.setParent("com.haoke.dubbo.server" ); mpg.setPackageInfo(pc); InjectionConfig cfg = new InjectionConfig (){ @Override public void initMap () { } }; List<FileOutConfig> focList = new ArrayList <>(); focList.add(new FileOutConfig ("/templates/mapper.xml.ftl" ) { @Override public String outputFile (TableInfo tableInfo) { return projectPath + "/src/main/resources/mapper/" + pc.getModuleName() + "/" + tableInfo.getEntityName() + "Mapper" + StringPool.DOT_XML; } }); cfg.setFileOutConfigList(focList); mpg.setCfg(cfg); mpg.setTemplate(new TemplateConfig ().setXml(null )); StrategyConfig strategy = new StrategyConfig (); strategy.setNaming(NamingStrategy.underline_to_camel); strategy.setColumnNaming(NamingStrategy.underline_to_camel); strategy.setSuperEntityClass("com.haoke.dubbo.server.pojo.BasePojo" ); strategy.setEntityLombokModel(true ); strategy.setRestControllerStyle(true ); strategy.setInclude(scanner("表名" )); strategy.setSuperEntityColumns("id" ); strategy.setControllerMappingHyphenStyle(true ); strategy.setTablePrefix(pc.getModuleName() + "_" ); mpg.setStrategy(strategy); mpg.setTemplateEngine(new FreemarkerTemplateEngine ()); mpg.execute(); } }

运行代码

只需要entity (pojo)

@EqualsAndHashCode(callSuper = true)自动生成equals和 hashcode 方法,一般不需要,所以去掉 @Accessors(chain = true) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 package com.haoke.dubbo.server.pojo;import com.baomidou.mybatisplus.annotation.TableName;import lombok.Data;import lombok.EqualsAndHashCode;@Data @EqualsAndHashCode(callSuper = true) @TableName("TB_HOUSE_RESOURCES") public class HouseResources extends BasePojo { private static final long serialVersionUID = -2471649692631014216L ; private String title; @TableId(value = "ID", type = IdType.AUTO) private Long estateId; private String buildingNum; private String buildingUnit; private String buildingFloorNum; private Integer rent; private Integer rentMethod; private Integer paymentMethod; private String houseType; private String coveredArea; private String useArea; private String floor; private String orientation; private Integer decoration; private String facilities; private String pic; private String houseDesc; private String contact; private String mobile; private Integer time; private String propertyCost; }

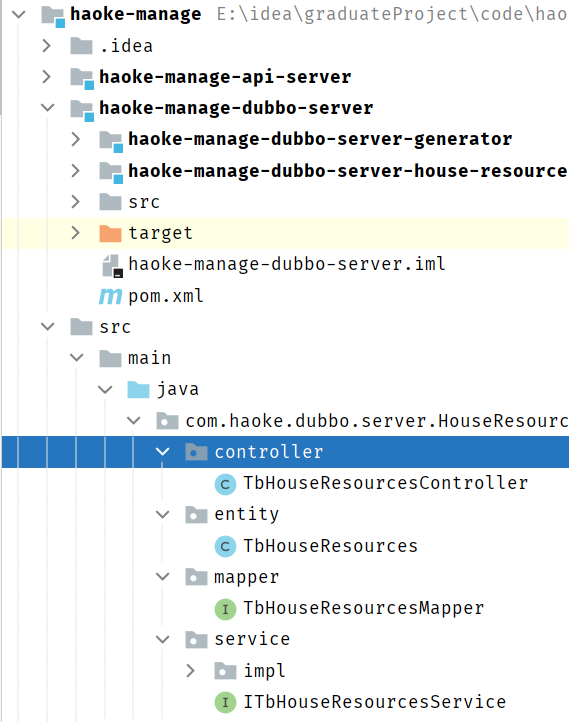

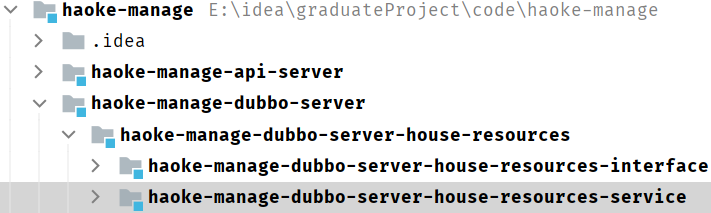

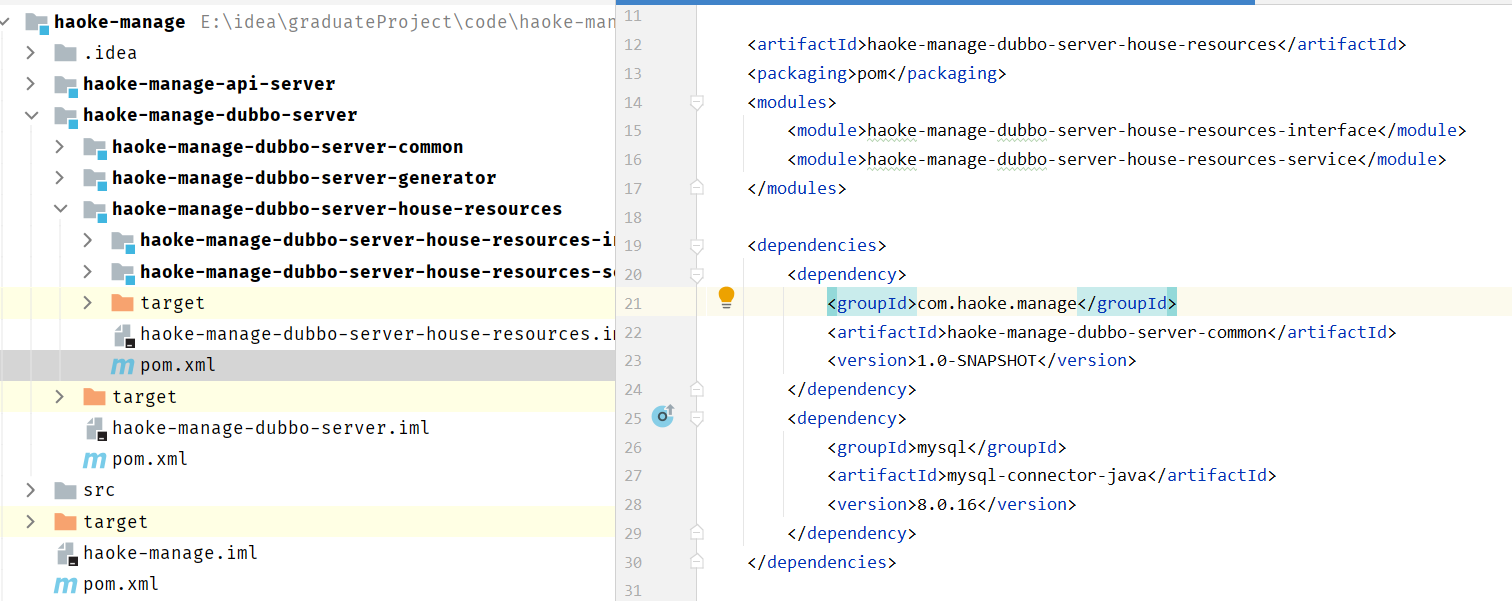

房源服务项目结构

将房源业务分为接口层面和实现层面,是为了便于组件化维护。

实现层面是Spring业务,具体实现业务逻辑。 接口层面作为Dubbo的服务导出。 haoke-manage-dubbo-server-house-resources

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 <?xml version="1.0" encoding="UTF-8" ?> <project xmlns ="http://maven.apache.org/POM/4.0.0" xmlns:xsi ="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation ="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd" > <parent > <artifactId > haoke-manage-dubbo-server</artifactId > <groupId > com.haoke.manage</groupId > <version > 1.0-SNAPSHOT</version > </parent > <modelVersion > 4.0.0</modelVersion > <artifactId > haoke-manage-dubbo-server-house-resources</artifactId > <packaging > pom</packaging > <modules > <module > haoke-manage-dubbo-server-house-resources-interface</module > <module > haoke-manage-dubbo-server-house-resources-service</module > </modules > <dependencies > <dependency > <groupId > com.baomidou</groupId > <artifactId > mybatis-plus-boot-starter</artifactId > <version > 3.4.2</version > </dependency > <dependency > <groupId > mysql</groupId > <artifactId > mysql-connector-java</artifactId > <version > 8.0.16</version > </dependency > </dependencies > </project >

房源业务接口 haoke-manage-dubbo-server-house-resources-interface

1 2 3 4 5 6 7 8 9 10 11 12 13 <?xml version="1.0" encoding="UTF-8" ?> <project xmlns ="http://maven.apache.org/POM/4.0.0" xmlns:xsi ="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation ="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd" > <parent > <artifactId > haoke-manage-dubbo-server-house-resources</artifactId > <groupId > com.haoke.manage</groupId > <version > 1.0-SNAPSHOT</version > </parent > <modelVersion > 4.0.0</modelVersion > <artifactId > haoke-manage-dubbo-server-house-resources-interface</artifactId > </project >

房源业务实现 haoke-manage-server-house-resources-service

房源服务的实现——Spring业务

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 <?xml version="1.0" encoding="UTF-8" ?> <project xmlns ="http://maven.apache.org/POM/4.0.0" xmlns:xsi ="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation ="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd" > <parent > <artifactId > haoke-manage-dubbo-server-house-resources</artifactId > <groupId > com.haoke.manage</groupId > <version > 1.0-SNAPSHOT</version > </parent > <modelVersion > 4.0.0</modelVersion > <artifactId > haoke-manage-dubbo-server-house-resources-service</artifactId > <dependencies > <dependency > <groupId > org.springframework.boot</groupId > <artifactId > spring-boot-starter-jdbc</artifactId > </dependency > <dependency > <groupId > com.haoke.manage</groupId > <artifactId > haoke-manage-dubbo-server-house-resources-interface</artifactId > <version > 1.0-SNAPSHOT</version > </dependency > </dependencies > </project >

相关配置 application.preperties

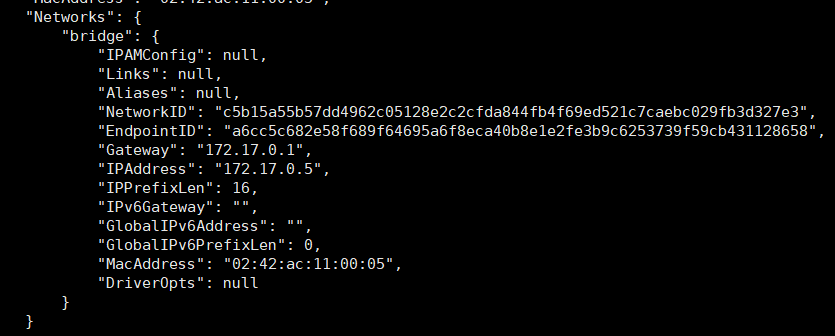

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 spring.application.name = haoke-manage-dubbo-server-house-resources spring.datasource.driver-class-name =com.mysql.cj.jdbc.Driver spring.datasource.url =jdbc:mysql://82.157.25.25:4002/haoke?characterEncoding=utf8&useSSL=false&serverTimezone=UTC spring.datasource.username =mycat spring.datasource.password =mycat dubbo.scan.basePackages = com.haoke.server.api dubbo.application.name = dubbo-provider-house-resources dubbo.service.version = 1.0.0 dubbo.protocol.name = dubbo dubbo.protocol.port = 20880 dubbo.registry.address = zookeeper://8.140.130.91:2181 dubbo.registry.client = zkclient

新增房源 服务提供方 Dubbo服务定义 haoke-manage-dubbo-server-house-resources-interface

1 2 3 4 5 6 7 8 9 10 11 package com.haoke.server.api;import com.haoke.server.pojo.HouseResources;public interface ApiHouseResourcesService { int saveHouseResources (HouseResources houseResources) ; }

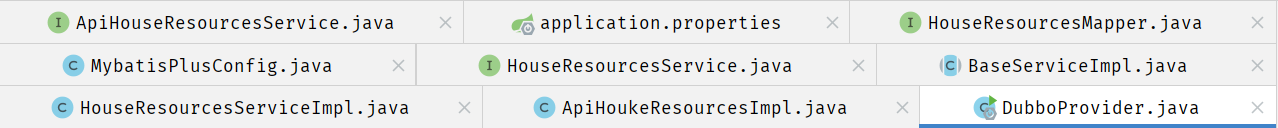

新增房源业务实现 创建SpringBoot应用,实现新增房源服务

连接数据库——Dao层 实现CRUD接口——Service层

Dao层 MybatisPlus配置类

1 2 3 4 5 6 7 8 9 package com.haoke.server.config;import org.mybatis.spring.annotation.MapperScan;import org.springframework.context.annotation.Configuration;@MapperScan("com.haoke.server.mapper") @Configuration public class MybatisPlusConfig {}

HouseResourcesMapper接口

1 2 3 4 5 6 7 package com.haoke.server.mapper;import com.baomidou.mybatisplus.core.mapper.BaseMapper;import com.haoke.dubbo.server.pojo.HouseResources;public interface HouseResourcesMapper extends BaseMapper <HouseResources> {}

Service层 此处实现的是spring的服务,为dubbo服务的具体实现细节,无需对外暴露,同时需要进行事务控制和其他判断逻辑

定义接口

1 2 3 4 5 6 7 8 9 10 11 package com.haoke.server.service;import com.haoke.server.pojo.HouseResources;public interface HouseResourcesService { int saveHouseResources (HouseResources houseResources) ; }

编写实现类

通用CRUD实现

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 package com.haoke.server.service.impl;import com.baomidou.mybatisplus.core.conditions.query.QueryWrapper;import com.baomidou.mybatisplus.core.mapper.BaseMapper;import com.baomidou.mybatisplus.core.metadata.IPage;import com.baomidou.mybatisplus.extension.plugins.pagination.Page;import com.haoke.dubbo.server.pojo.BasePojo;import org.springframework.beans.factory.annotation.Autowired;import java.util.Date;import java.util.List;public class BaseServiceImpl <T extends BasePojo >{ @Autowired private BaseMapper<T> mapper; public T queryById (Long id) { return this .mapper.selectById(id); } public List<T> queryAll () { return this .mapper.selectList(null ); } public T queryOne (T record) { return this .mapper.selectOne(new QueryWrapper <>(record)); } public List<T> queryListByWhere (T record) { return this .mapper.selectList(new QueryWrapper <>(record)); } public IPage<T> queryPageListByWhere (T record, Integer page, Integer rows) { return this .mapper.selectPage(new Page <T>(page, rows), new QueryWrapper <> (record)); } public Integer save (T record) { record.setCreated(new Date ()); record.setUpdated(record.getCreated()); return this .mapper.insert(record); } public Integer update (T record) { record.setUpdated(new Date ()); return this .mapper.updateById(record); } public Integer deleteById (Long id) { return this .mapper.deleteById(id); } public Integer deleteByIds (List<Long> ids) { return this .mapper.deleteBatchIds(ids); } public Integer deleteByWhere (T record) { return this .mapper.delete(new QueryWrapper <>(record)); } }

房源相关实现类——HouseResourcesImpl

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 package com.haoke.server.service.impl;import com.alibaba.dubbo.common.utils.StringUtils;import com.haoke.server.pojo.HouseResources;import com.haoke.server.service.HouseResourcesService;import org.springframework.stereotype.Service;import org.springframework.transaction.annotation.Transactional;@Transactional @Service public class HouseResourcesServiceImpl extends BaseServiceImpl implements HouseResourcesService { @Override public int saveHouseResources (HouseResources houseResources) { if (StringUtils.isBlank(houseResources.getTitle())) { return -1 ; } return super .save(houseResources); } }

Dubbo服务实现 暴露新增房源的dubbo服务,将接口作为Dubbo服务导出

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 package com.haoke.server.api;import com.alibaba.dubbo.config.annotation.Service;import com.haoke.server.pojo.HouseResources;import com.haoke.server.service.HouseResourcesService;import org.springframework.beans.factory.annotation.Autowired;@Service(version = "${dubbo.service.version}") public class ApiHoukeResourcesImpl implements ApiHouseResourcesService { @Autowired private HouseResourcesService resourcesService; @Override public int saveHouseResources (HouseResources houseResources) { return this .resourcesService.saveHouseResources(houseResources); } }

Dubbo服务启动 启动SpringBoot程序,将Dubbo服务导出到注册中心

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 package com.haoke.server;import org.springframework.boot.WebApplicationType;import org.springframework.boot.autoconfigure.SpringBootApplication;import org.springframework.boot.builder.SpringApplicationBuilder;@SpringBootApplication public class DubboProvider { public static void main (String[] args) { new SpringApplicationBuilder (DubboProvider.class) .web(WebApplicationType.NONE) .run(args); } }

启用DubboAdmin 1 2 3 cd /opt/incubator-dubbo-ops/ mvn --projects dubbo-admin-server spring-boot:run

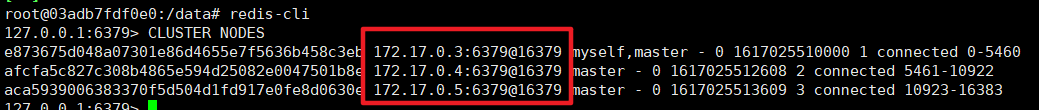

查询dubbo服务提供方

dubbo-provider-house-resources,端口为20880

服务消费方 haoke-manage-api-server

为前端系统提供RESTful风格接口 dubbo的消费方

添加依赖 因为dubbo是消费方,需要添加dubbo提供方提供的接口、pojo的依赖

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 <dependencies > <dependency > <groupId > org.springframework.boot</groupId > <artifactId > spring-boot-starter-web</artifactId > </dependency > <dependency > <groupId > com.haoke.manage</groupId > <artifactId > haoke-manage-dubbo-server-house-resources-interface</artifactId > <version > 1.0-SNAPSHOT</version > </dependency > </dependencies >

消费方配置文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 spring.application.name = haoke-manage-api-server server.port = 9091 dubbo.application.name = dubbo-consumer-haoke-manage dubbo.registry.address = zookeeper://8.140.130.91:2181 dubbo.registry.client = zkclient dubbo.service.version = 1.0.0

服务消费方 HouseResourceService用于调用dubbo服务

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 package com.haoke.api.service;import com.alibaba.dubbo.config.annotation.Reference;import com.haoke.server.api.ApiHouseResourcesService;import com.haoke.server.pojo.HouseResources;import org.springframework.stereotype.Service;@Service public class HouseResourceService { @Reference(version = "${dubbo.service.version}") private ApiHouseResourcesService apiHouseResourcesService; public boolean save (HouseResources houseResources) { int result = this .apiHouseResourcesService.saveHouseResources(houseResources); return result==1 ; } }

控制层 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 package com.haoke.api.controller;import com.haoke.api.service.HouseResourceService;import com.haoke.server.pojo.HouseResources;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.http.HttpStatus;import org.springframework.http.ResponseEntity;import org.springframework.stereotype.Controller;import org.springframework.web.bind.annotation.*;@RequestMapping("house/resources") @Controller public class HouseResourcesController { @Autowired private HouseResourceService houseResourceService; @PostMapping @ResponseBody public ResponseEntity<Void> save (@RequestBody HouseResources houseResources) { try { boolean bool = this .houseResourceService.save(houseResources); if (bool){ return ResponseEntity.status(HttpStatus.CREATED).build(); } } catch (Exception e) { e.printStackTrace(); } return ResponseEntity.status(HttpStatus.INTERNAL_SERVER_ERROR).build(); } @GetMapping @ResponseBody public ResponseEntity<String> get () { System.out.println("get House Resources" ); return ResponseEntity.ok("ok" ); } }

测试程序 1 2 3 4 5 6 7 8 9 10 11 package com.haoke.api;import org.springframework.boot.SpringApplication;import org.springframework.boot.autoconfigure.SpringBootApplication;@SpringBootApplication(exclude = {DataSourceAutoConfiguration.class}) public class DubboApiApplication { public static void main (String[] args) { SpringApplication.run(DubboApiApplication.class, args); } }

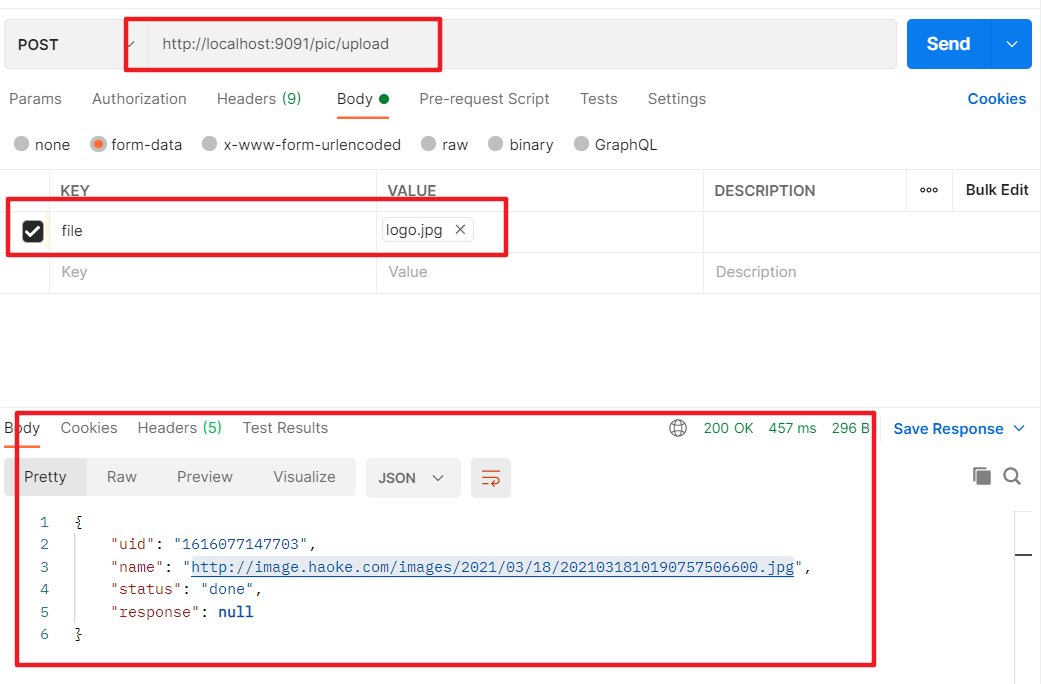

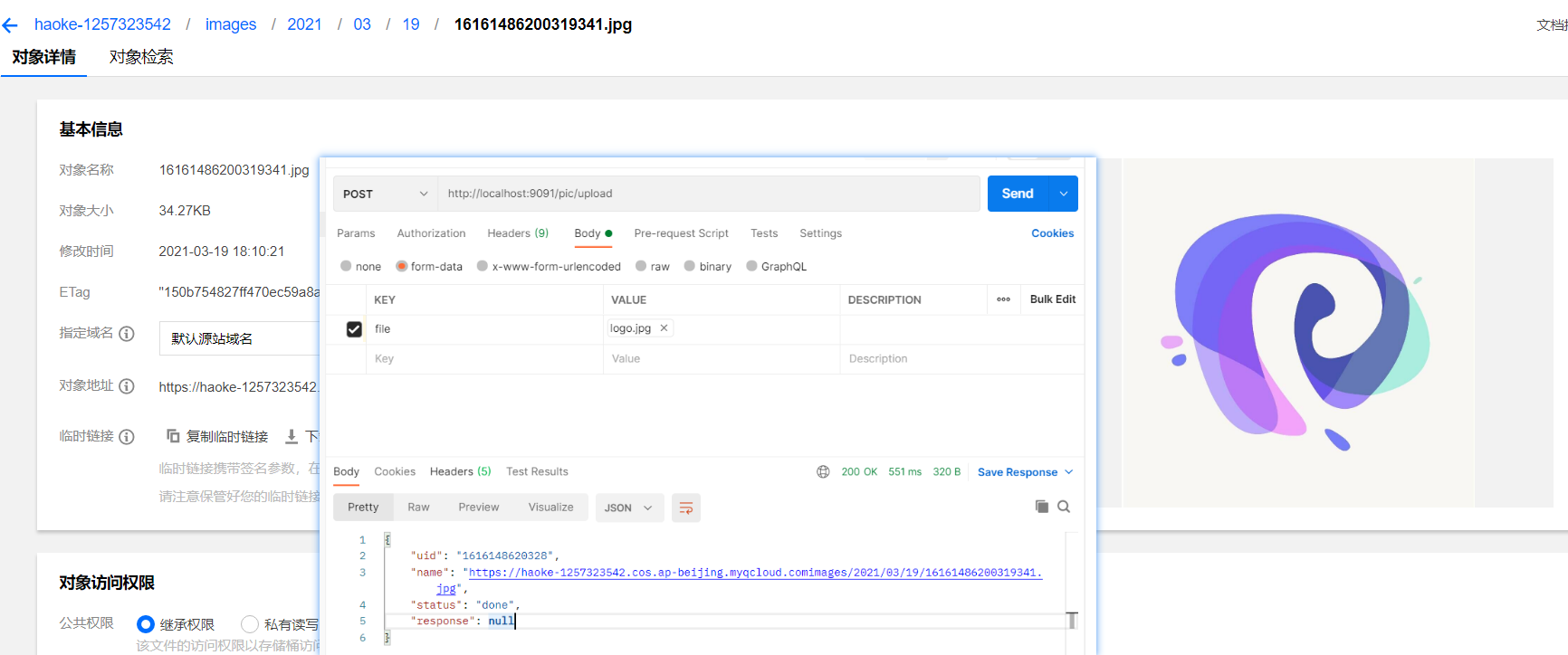

测试接口

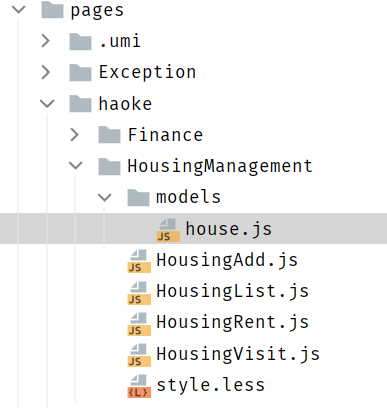

前后端整合 增加model 新建 models 文件夹

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 import { routerRedux } from 'dva/router' ;import { message } from 'antd' ;import { addHouseResource } from '@/services/haoke/haoke' ;export default { namespace : 'house' , state : { }, effects : { *submitHouseForm ({ payload }, { call } ) { console .log ("page model" ) yield call (addHouseResource, payload); message.success ('提交成功' ); } }, reducers : { }, };

增加services 1 2 3 4 5 6 7 8 import request from '@/utils/request' ;export async function addHouseResource (params ) { return request ('/haoke/house/resources' , { method : 'POST' , body : params }); }

修改表单提交地址 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 handleSubmit = e => const { dispatch, form } = this .props ; e.preventDefault (); form.validateFieldsAndScroll ((err, values ) => { if (!err) { if (values.facilities ){ values.facilities = values.facilities .join ("," ); } if (values.floor_1 && values.floor_2 ){ values.floor = `${values.floor_1 } /${ values.floor_2} ` ; } values.houseType = `${values.houseType_1 } 室${ values.houseType_2 } 厅${ values.houseType_3 } 卫${ values.houseType_4 } 厨${ values.houseType_2 } 阳台` delete values.floor_1 ; delete values.floor_2 ; delete values.houseType_1 ; delete values.houseType_2 ; delete values.houseType_3 ; delete values.houseType_4 ; delete values.houseType_5 ; dispatch ({ type : 'house/submitHouseForm' , payload : values, }); } }); };

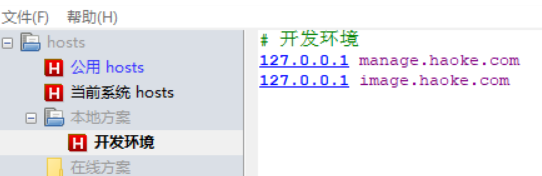

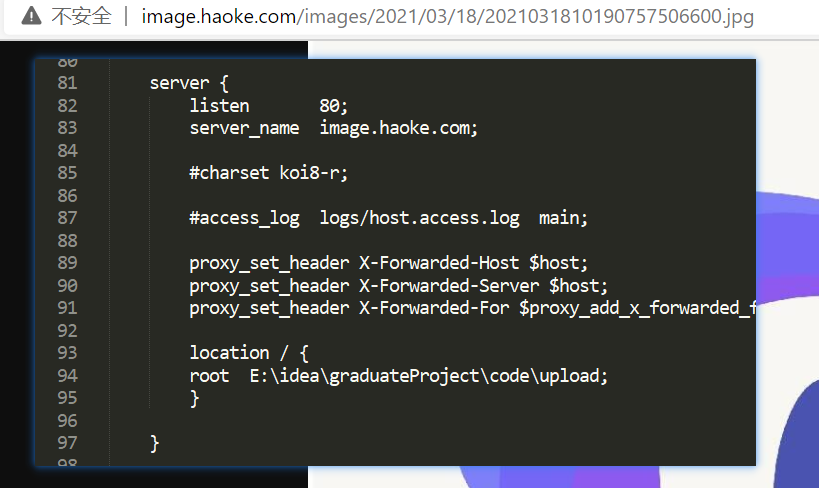

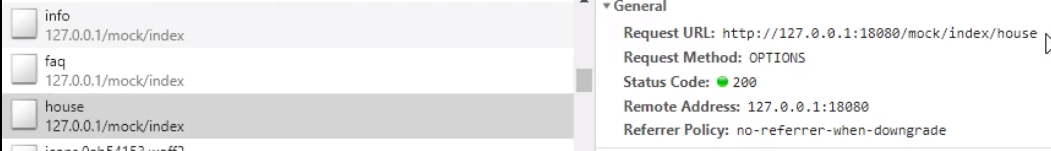

通过反向代理解决跨域问题 https://umijs.org/zh-CN/config#proxy

1 2 3 4 5 6 7 proxy: { '/haoke/': { target: 'http: changeOrigin: true , pathRewrite: { '^/haoke/': '' } , } , } ,

代理效果:

请求:http://127.0.0.1:8000/haoke/house/resources

实际:http://127.0.0.1:9091/house/resources

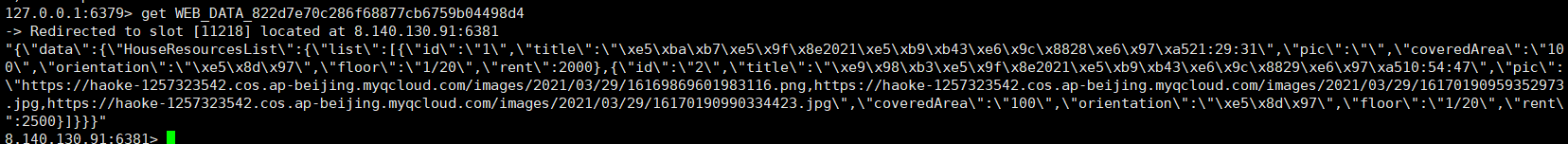

房源列表

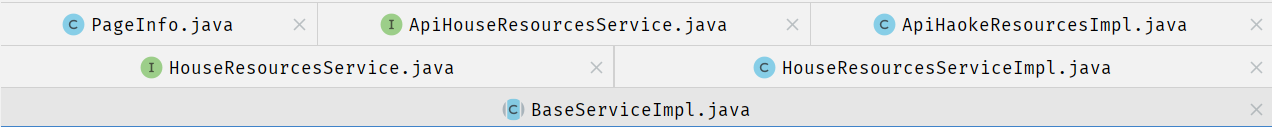

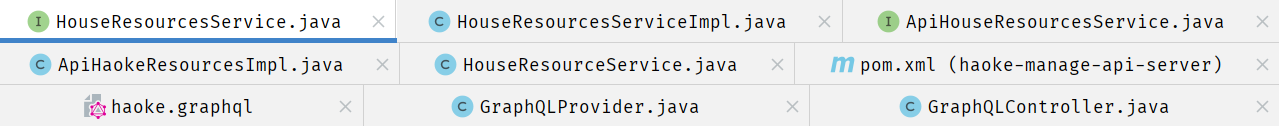

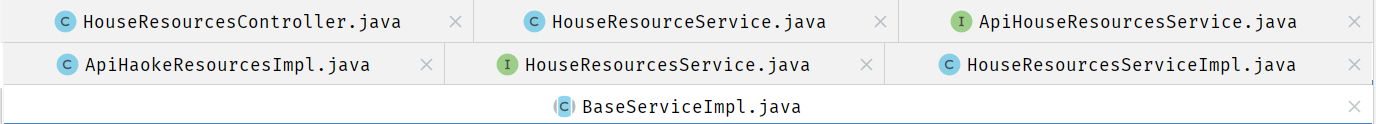

PageInfo:返回给服务消费方的数据 ApiHouseResourcesService:暴露 Dubbo 服务提供方接口 ApiHaoKeResourcesImpl: Dubbo 服务提供方的实现 HouseResourcesService: spring 服务层定义 HouseResourcesServiceImpl:spring 业务的实现 BaseServiceImpl:Mybatisplus 层访问数据库 1. 定义dubbo服务 haoke-manage-server-house-resources-dubbo-interface

Dubbo服务提供方接口

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 package com.haoke.server.api;import com.haoke.server.pojo.HouseResources;import com.haoke.server.vo.PageInfo;public interface ApiHouseResourcesService { int saveHouseResources (HouseResources houseResources) ; PageInfo<HouseResources> queryHouseResourcesList (int page, int pageSize, HouseResources queryCondition) ; }

2. Dubbo生产方 haoke-manage-dubbo-server-house-resources-service

1. 定义数据模型 服务提供方封装返回的数据

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 package com.haoke.server.vo;import lombok.AllArgsConstructor;import lombok.Data;import java.util.Collections;import java.util.List;@Data @AllArgsConstructor public class PageInfo <T> implements java .io.Serializable{ private Integer total; private Integer pageNum; private Integer pageSize; private List<T> records = Collections.emptyList(); }

2. 服务提供方实现 dubbo服务的实现实际上为调用Spring的服务层业务

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 package com.haoke.server.api;import com.alibaba.dubbo.config.annotation.Service;import com.haoke.server.pojo.HouseResources;import com.haoke.server.service.HouseResourcesService;import com.haoke.server.vo.PageInfo;import org.springframework.beans.factory.annotation.Autowired;@Service(version = "${dubbo.service.version}") public class ApiHaokeResourcesImpl implements ApiHouseResourcesService { @Autowired private HouseResourcesService houseResourcesService; @Override public int saveHouseResources (HouseResources houseResources) { return this .houseResourcesService.saveHouseResources(houseResources); } @Override public PageInfo<HouseResources> queryHouseResourcesList (int page, int pageSize, HouseResources queryCondition) { return this .houseResourcesService.queryHouseResourcesList(page, pageSize, queryCondition); } }

3. 列表业务实现 Dao层 mybatisplus从数据库获取数据

1 2 3 4 5 6 7 8 @Override public PageInfo<HouseResources> queryHouseResourcesList (int page, int pageSize, HouseResources queryCondition) { QueryWrapper<HouseResources> queryWrapper = new QueryWrapper <HouseResources>(queryCondition); queryWrapper.orderByDesc("updated" ); IPage iPage = super .queryPageList(queryWrapper, page, pageSize); return new PageInfo <HouseResources>(Long.valueOf(iPage.getTotal()).intValue() , page, pageSize, iPage.getRecords()); }

Service层 spring的服务层实现查询列表业务

spring服务层定义

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 package com.haoke.server.service;import com.haoke.server.pojo.HouseResources;import com.haoke.server.vo.PageInfo;public interface HouseResourcesService { int saveHouseResources (HouseResources houseResources) ; public PageInfo<HouseResources> queryHouseResourcesList (int page, int pageSize, HouseResources queryCondition) ; }

spring服务层实现

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 package com.haoke.server.service.impl;import com.alibaba.dubbo.common.utils.StringUtils;import com.baomidou.mybatisplus.core.conditions.query.QueryWrapper;import com.baomidou.mybatisplus.core.metadata.IPage;import com.haoke.server.pojo.HouseResources;import com.haoke.server.service.HouseResourcesService;import com.haoke.server.vo.PageInfo;import org.springframework.stereotype.Service;import org.springframework.transaction.annotation.Transactional;@Transactional @Service public class HouseResourcesServiceImpl extends BaseServiceImpl implements HouseResourcesService { @Override public int saveHouseResources (HouseResources houseResources) { if (StringUtils.isBlank(houseResources.getTitle())) { return -1 ; } return super .save(houseResources); } @Override public PageInfo<HouseResources> queryHouseResourcesList (int page, int pageSize, HouseResources queryCondition) { QueryWrapper<Object> queryWrapper = new QueryWrapper <>(queryCondition); queryWrapper.orderByDesc("updated" ); IPage iPage = super .queryPageList(queryWrapper, page, pageSize); return new PageInfo <HouseResources>(Long.valueOf(iPage.getTotal()).intValue() , page, pageSize, iPage.getRecords()); } }

3. Dubbo消费方 实现RESTful风格接口

TableResult:返回给前端的vo Pagination:分页信息 HouseResourceService:调用服务提供方提供的接口 HouseResourcesController:服务消费方提供接口给前端调用 1. 定义vo 1 2 3 4 5 6 7 8 9 10 11 12 13 14 @Data @AllArgsConstructor public class TableResult <T> { private List<T> list; private Pagination pagination; } @Data @AllArgsConstructor public class Pagination { private Integer current; private Integer pageSize; private Integer total; }

2. 调用服务提供方 1 2 3 4 5 6 7 8 public TableResult queryList (HouseResources houseResources, Integer currentPage, Integer pageSize) { PageInfo<HouseResources> pageInfo = this .apiHouseResourcesService.queryHouseResourcesList(currentPage, pageSize, houseResources); return new TableResult ( pageInfo.getRecords(), new Pagination (currentPage, pageSize, pageInfo.getTotal())); }

3. 服务消费方提供给前端接口 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 @GetMapping("/list") @ResponseBody public ResponseEntity<TableResult> list (HouseResources houseResources, @RequestParam(name = "currentPage", defaultValue = "1") Integer currentPage, @RequestParam(name = "pageSize",defaultValue = "10") Integer pageSize) { return ResponseEntity.ok(this .houseResourceService.queryList(houseResources, currentPage, pageSize)); }

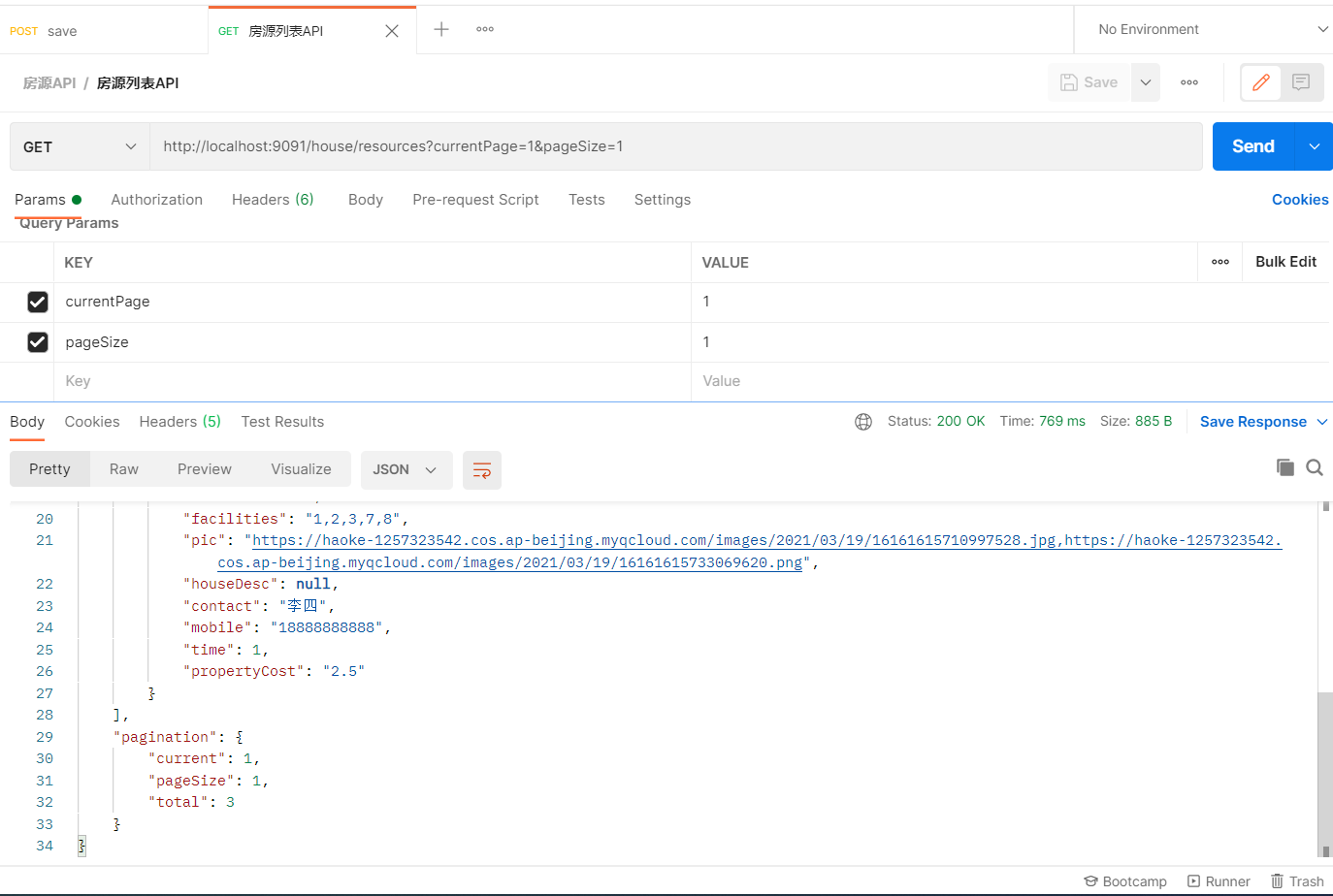

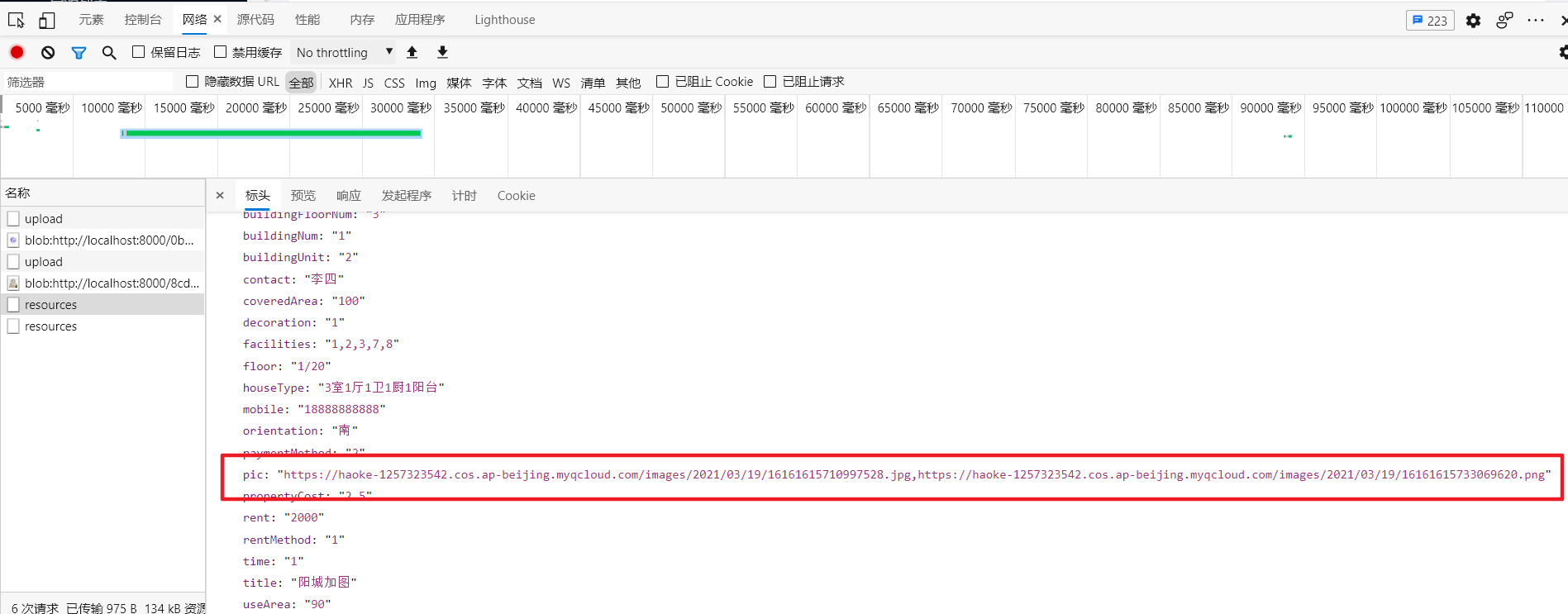

4. 测试接口

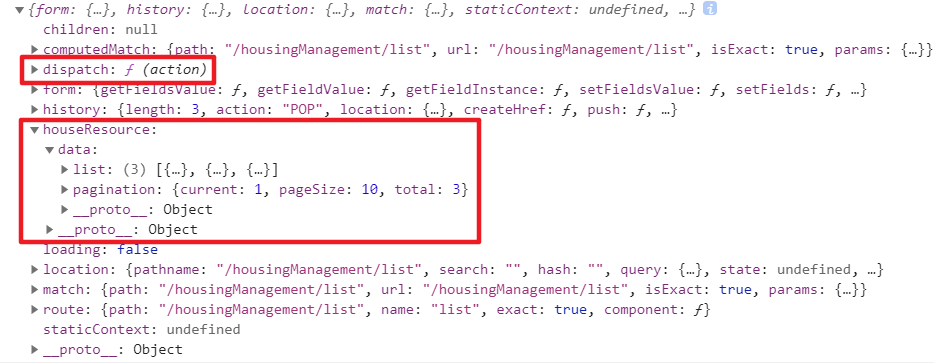

4. 前后端整合

1. 修改前端表结构 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 columns = [ { title : '房源编号' , dataIndex : 'id' , }, { title : '房源信息' , dataIndex : 'title' , }, { title : '图' , dataIndex : 'pic' , render : (text, record, index ) => <ShowPics pics ={text} /> }, { title : '楼栋' , render : (text, record, index ) => `${record.buildingFloorNum } 栋${record.buildingNum} 单元${record.buildingUnit} 号` }, { title : '户型' , dataIndex : 'houseType' }, { title : '面积' , dataIndex : 'useArea' , render : (text, record, index ) => `${text} 平方` }, { title : '楼层' , dataIndex : 'floor' }, { title : '操作' , render : (text, record ) => ( <Fragment > <a onClick ={() => this.handleUpdateModalVisible(true, record)}>查看</a > <Divider type ="vertical" /> <a href ="" > 删除</a > </Fragment > ), }, ];

2. 自定义图片展示组件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 import React from 'react' ;import { Modal , Button , Carousel } from 'antd' ;class ShowPics extends React.Component { info = () => { Modal .info ({ title : '' , iconType :'false' , width : '800px' , okText : "ok" , content : ( <div style ={{width:650, height: 400 , lineHeight:400 , textAlign: "center "}}> <Carousel autoplay > { this.props.pics.split(',').map((value,index) => <div > <img style ={{ maxWidth:600 ,maxHeight:400 , margin: "0 auto " }} src ={value} /> </div > ) } </Carousel > </div > ), onOk ( }); }; constructor (props ){ super (props); this .state ={ btnDisabled : !this .props .pics } } render ( return ( <div > <Button disabled ={this.state.btnDisabled} icon ="picture" shape ="circle" onClick ={() => {this.info()}} /> </div > ) } } export default ShowPics ;

3. model层 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 import { queryResource } from '@/services/haoke/houseResource' ;export default { namespace : 'houseResource' , state : { data : { list : [], pagination : {}, }, }, effects : { *fetch ({ payload }, { call, put } ) { console .log ("houseResource fetch" ) const response = yield call (queryResource, payload); yield put ({ type : 'save' , payload : response, }); } }, reducers : { save (state, action ) { return { ...state, data : action.payload , }; }, }, };

4. 修改数据请求地址 1 2 3 4 5 6 import request from '@/utils/request' ;import { stringify } from 'qs' ;export async function queryResource (params ) { return request (`/haoke/house/resources/list?${stringify(params)} ` ); }

GraphQL 使用GraphQL开发房源接口 实现房源列表查询的接口 简介

官网地址

一种用于前后端 数据查询 方式的规范

RESTful存在的问题 1 2 3 4 GET http://127.0.0.1/user/1 #查询 POST http://127.0.0.1/user #新增 PUT http://127.0.0.1/user #更新 DELETE http://127.0.0.1/user #删除

场景一:

只需某一对象的部分属性,但通过RESTful返回的是这个对象的所有属性

1 2 3 4 5 6 7 8 9 10 #请求 GET http: #响应: { id : 1001 , name : "张三" , age : 20 , address : "北京市" , …… }

场景二:

一个需求,要发起多次请求才能完成

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 #查询用户信息 GET http: #响应: { id : 1001 , name : "张三" , age : 20 , address : "北京市" , …… } #查询用户的身份证信息 GET http: #响应: { id : 8888 , name : "张三" , cardNumber : "999999999999999" , address : "北京市" , …… }

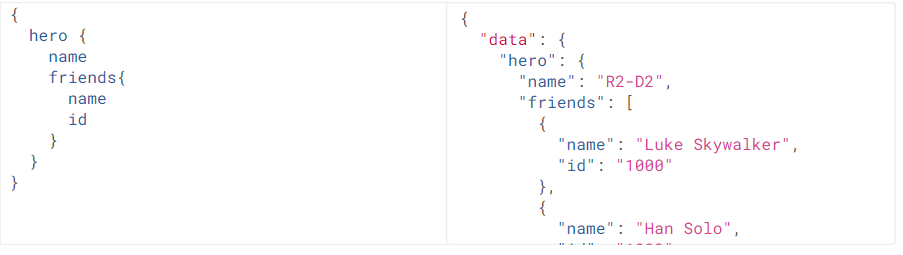

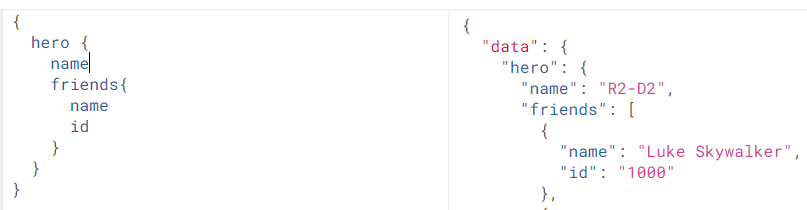

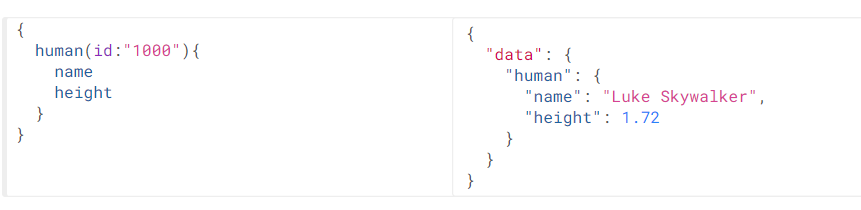

GraphQL的优势 1. 按需索取数据 当请求中只有name属性时,响应结果中只包含name属性,如果请求中添加appearsIn属性,那么结果中就会返回appearsIn的值

演示地址:https://graphql.cn/learn/schema/#type-system

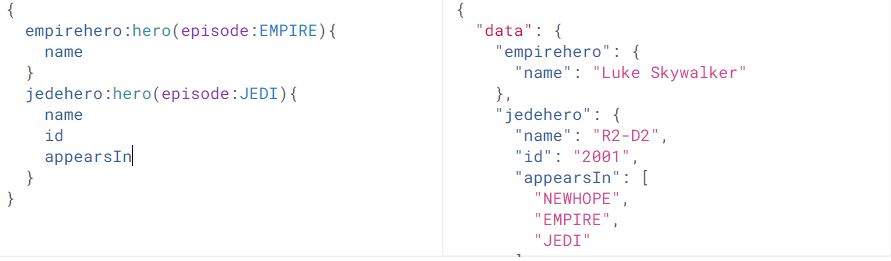

2. 一次查询多个数据

一次请求,不仅查询到了hero数据,而且还查询到了friends数据。节省了网络请求次数

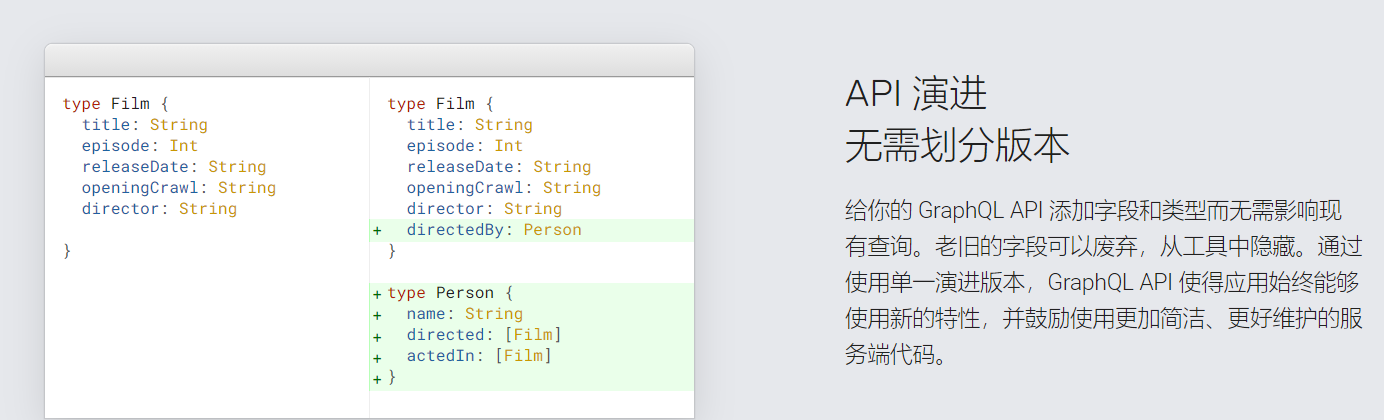

3. API的演进无需划分版本

当API进行升级时,客户端可以不进行升级,可以等到后期一起升级,这样就大大减少了客户端和服务端的耦合度

GraphQL查询的规范 GraphQL定义了一套规范,用来描述语法定义 http://graphql.cn/learn/queries/

规范 $\neq$ 实现

字段 Fields 在GraphQL的查询中,请求结构中包含了所预期结果的结构,这个就是字段。并且响应的结构和请求结构基本一致,这是GraphQL的一个特性,这样就可以让请求发起者很清楚的知道自己想要什么。

参数Arguments 语法:(参数名:参数值)

别名 Aliases 如果一次查询多个 相同对象 ,但是 值不同 ,这个时候就需要起别名了,否则json的语法就不能通过了

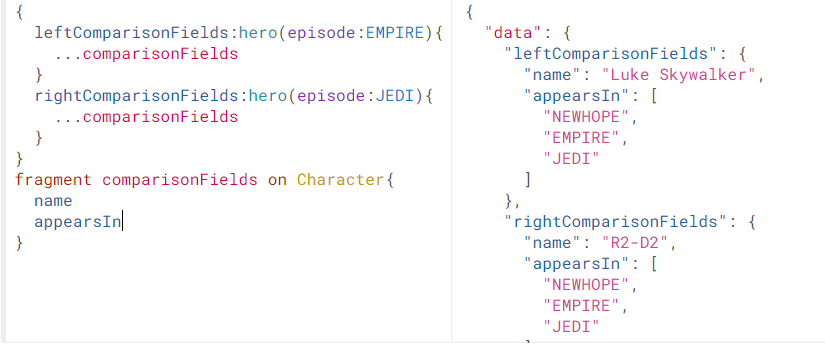

片段 Fragments 查询对的属性如果相同,可以采用片段的方式进行简化定义

GraphQL的schema和类型规范 Schema用于定义数据结构

https://graphql.cn/learn/schema/

Schema定义结构 每一个 GraphQL 服务都有一个 query 类型,可能有一个 mutation 类型。这两个类型和常规对象类型无差,但是它们之所以特殊,是因为它们定义了每一个 GraphQL 查询的入口 。

1 2 3 4 5 6 7 8 9 10 11 12 13 schema { #定义查询 query: UserQuery } type UserQuery{# 定义查询的类型 user(id:ID):User #指定对象以及参数类型 } type User{# 定义对象 id:ID! #!表示该属性必须不可为空 name:String age:Int }

标量类型 Int :有符号 32 位整数。 Float :有符号双精度浮点值。 String :UTF‐8 字符序列。 Boolean : true 或者 false 。 ID :ID 标量类型表示一个唯一标识符,通常用以重新获取对象或者作为缓存中的键 GraphQL支持自定义类型,比如在graphql-java实现中增加了:Long、Byte等。

枚举类型 1 2 3 4 5 6 7 8 9 10 11 12 enum Episode{# 定义枚举 NEWHOPE EMPIRE JEDI } type huma{ id: ID! name: String! appearsIn: [Episode]! #使用枚举类型 表示一个 Episode 数组 homePlanet: String }

接口 interface 一个接口是一个抽象类型,它包含某些字段,而对象类型必须包含这些字段,才能算实现了这个接口

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 interface Character{# 定义接口 id: ID! name: String! friends: [Character] appearsIn: [Episode]! } #实现接口 type Human implememts Character{ id: ID! name: String! friends: [Character]! starship: [Startships]! totalCredits: Int } type Droid implements Character { id: ID! name: String! friends: [Character] appearsIn: [Episode]! primaryFunction: String }

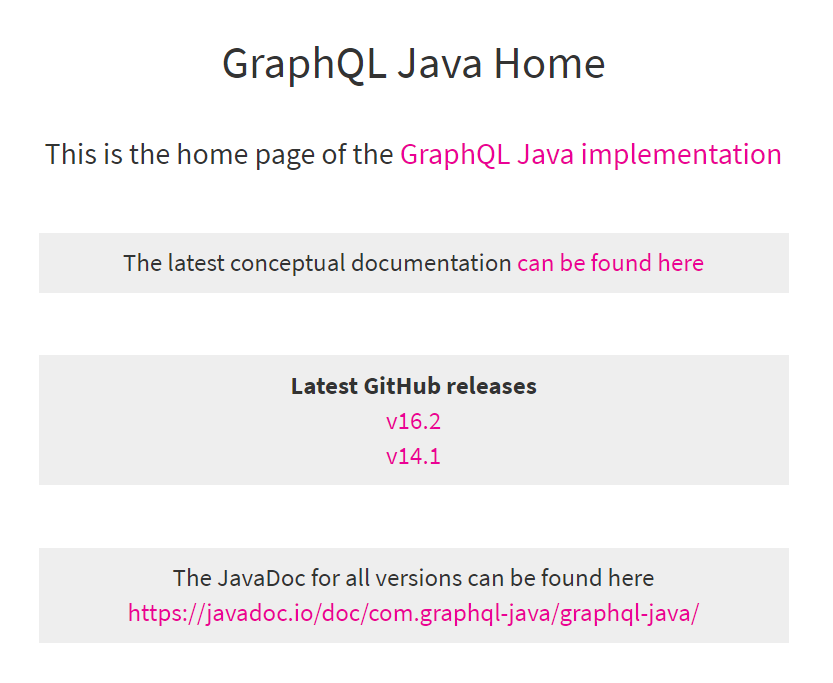

GraphQL的Java实现 官方只是定义了规范并没有做实现,就需要有第三方来进行实现了

官网:https://www.graphql-java.com/

https://www.graphql-java.com/documentation/v16/getting-started/

graphQL并未发布到maven中央仓库中,需要添加第三方仓库,才能下载到依赖

Maven:若使用mirrors配置镜像,则第三方配置不会生效

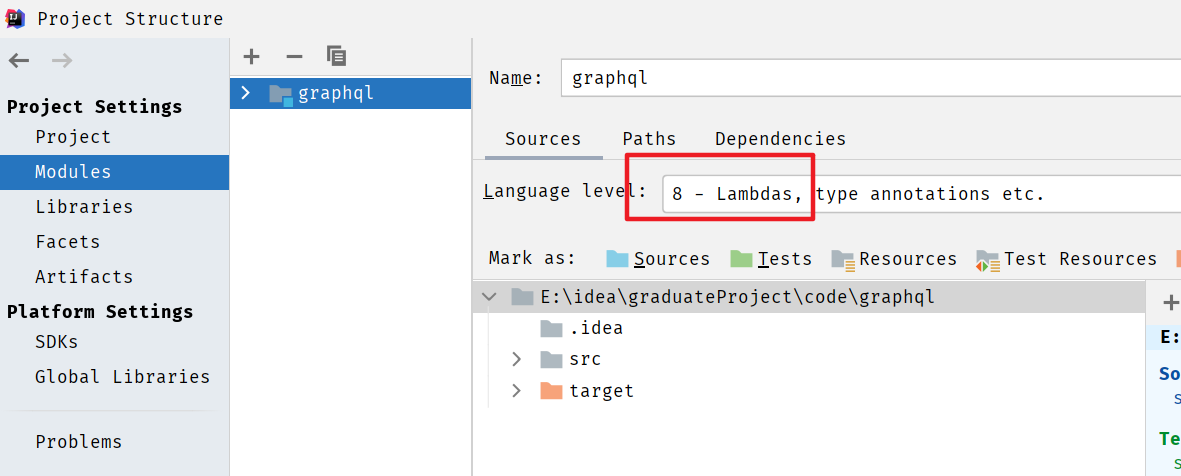

1. 导入依赖 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 <?xml version="1.0" encoding="UTF-8" ?> <project xmlns ="http://maven.apache.org/POM/4.0.0" xmlns:xsi ="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation ="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd" > <modelVersion > 4.0.0</modelVersion > <groupId > org.example</groupId > <artifactId > graphql</artifactId > <version > 1.0-SNAPSHOT</version > <repositories > <repository > <snapshots > <enabled > false</enabled > </snapshots > <id > bintray-andimarek-graphql-java</id > <name > bintray</name > <url > https://dl.bintray.com/andimarek/graphql-java</url > </repository > </repositories > <dependencies > <dependency > <groupId > org.projectlombok</groupId > <artifactId > lombok</artifactId > </dependency > <dependency > <groupId > com.graphql-java</groupId > <artifactId > graphql-java</artifactId > <version > 11.0</version > </dependency > </dependencies > </project >

2. 安装插件

1 2 3 4 5 6 7 8 9 10 11 12 13 schema { query: UserQuery } type UserQuery{ user(id:ID): User } type User{ id: ID! name: String age: Int }

Java API实现 按需返回 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 public class GraphQLDemo { public static void main (String[] args) { GraphQLObjectType userType = newObject() .name("User" ) .field(newFieldDefinition().name("id" ).type(GraphQLLong)) .field(newFieldDefinition().name("name" ).type(GraphQLString)) .field(newFieldDefinition().name("age" ).type(GraphQLInt)) .build(); GraphQLObjectType userQuery = newObject() .name("userQuery" ) .field(newFieldDefinition() .name("user" ) .type(userType) .dataFetcher(new StaticDataFetcher (new User (1L ,"张三" ,20 ))) ) .build(); GraphQLSchema graphQLSchema = GraphQLSchema.newSchema() .query(userQuery) .build(); GraphQL graphQL = GraphQL.newGraphQL(graphQLSchema).build(); String query = "{user{id,name}}" ; ExecutionResult executionResult = graphQL.execute(query); System.out.println("错误:" + executionResult.getErrors()); System.out.println("结果:" +(Object) executionResult.toSpecification()); } }

查询参数的设置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 public class GraphQLDemo { public static void main (String[] args) { GraphQLObjectType userType = newObject() .name("User" ) .field(newFieldDefinition().name("id" ).type(GraphQLLong)) .field(newFieldDefinition().name("name" ).type(GraphQLString)) .field(newFieldDefinition().name("age" ).type(GraphQLInt)) .build(); GraphQLObjectType userQuery = newObject() .name("userQuery" ) .field(newFieldDefinition() .name("user" ) .argument(GraphQLArgument.newArgument() .name("id" ) .type("GraphQLLong" ) ) .type(userType) .dataFetcher( Environment->{ Long id = Environment.getArgument("id" ); return new User (id,"张三" ,id.intValue()+10 ); }) ) .build(); GraphQLSchema graphQLSchema = GraphQLSchema.newSchema() .query(userQuery) .build(); GraphQL graphQL = GraphQL.newGraphQL(graphQLSchema).build(); String query = "{user(id:100){id,name,age}}" ; ExecutionResult executionResult = graphQL.execute(query); System.out.println("错误:" + executionResult.getErrors()); System.out.println("结果:" +(Object) executionResult.toSpecification()); } }

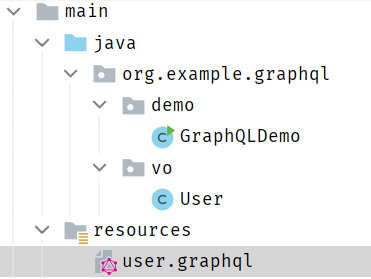

3. SDL构建Schema SDL通过插件将GraphQL定义文件转换为java

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 schema { query : UserQuery } type UserQuery{ user( id : ID) : User } type User{ id : ID! name : String age : Int card : Card } type Card { cardNumber : String! userId : ID }

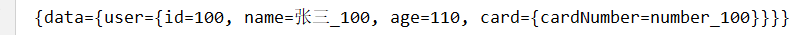

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 public class GraphQLSDLDemo { public static void main (String[] args) throws IOException { String fileName = "user.graphql" ; String fileContent = IOUtils.toString(GraphQLSDLDemo.class.getClassLoader().getResource(fileName),"UTF-8" ); TypeDefinitionRegistry tyRegistry = new SchemaParser ().parse(fileContent); RuntimeWiring wiring = RuntimeWiring.newRuntimeWiring() .type("UserQuery" ,builder -> builder.dataFetcher("user" , Environment->{ Long id = Long.parseLong(Environment.getArgument("id" )); Card card = new Card ("number_" +id,id); return new User (id,"张三_" +id,id.intValue()+10 ,card); }) ) .build(); GraphQLSchema graphQLSchema = new SchemaGenerator ().makeExecutableSchema(tyRegistry,wiring); GraphQL graphQL = GraphQL.newGraphQL(graphQLSchema).build(); String query = "{user(id:100){id,name,age,card{cardNumber}}}" ; ExecutionResult executionResult = graphQL.execute(query); System.out.println(executionResult.toSpecification()); } }

id查询房源接口

dubbo服务提供方 HouseResourcesService——Spring服务的Interface 1 public HouseResources queryHouseResourcesById (Long id) ;

HouseResourcesServiceImpl——Spring服务的实现 1 2 3 4 @Override public HouseResources queryHouseResourcesById (Long id) { return (HouseResources) super .queryById(id); }

ApiHouseResourcesService——dubbo服务提供方接口 1 2 3 4 5 6 7 HouseResources queryHouseResourcesById (Long id) ;

ApiHaokeResourcesImpl——dubbo服务提供方实现 1 2 3 4 @Override public HouseResources queryHouseResourcesById (Long id) { return houseResourcesService.queryHouseResourcesById(id); }

dubbo服务消费方 HouseResourceService

1 2 3 4 5 6 7 8 9 10 11 12 13 @Reference(version = "${dubbo.service.version}") private ApiHouseResourcesService apiHouseResourcesService;public HouseResources queryHouseResourcesById (Long id) { return this .apiHouseResourcesService.queryHouseResourcesById(id);

GraphQL接口 导入依赖 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 <repositories > <repository > <snapshots > <enabled > false</enabled > </snapshots > <id > bintray-andimarek-graphql-java</id > <name > bintray</name > <url > https://dl.bintray.com/andimarek/graphql-java</url > </repository > </repositories > <dependency > <groupId > com.graphql-java</groupId > <artifactId > graphql-java</artifactId > <version > 16.0</version > </dependency >

GraphQL定义 haoke.graphql

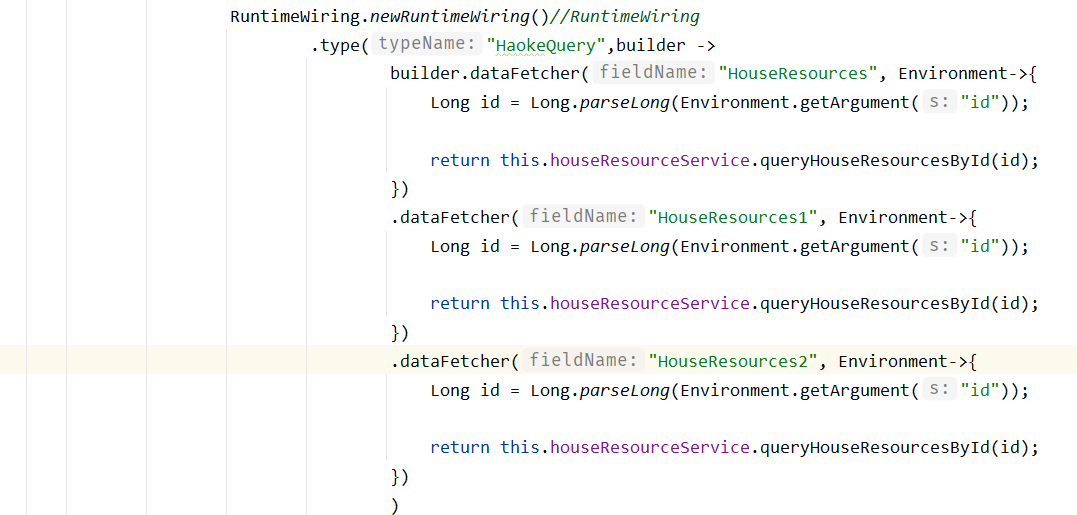

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 schema { query : HaokeQuery } type HaokeQuery{ HouseResources( id : ID) : HouseResources } type HouseResources{ id : ID! title : String estateId : ID buildingNum : String buildingUnit : String buildingFloorNum : String rent : Int rentMethod : Int paymentMethod : Int houseType : String coveredArea : String useArea : String floor : String orientation : String decoration : Int facilities : String pic : String houseDesc : String contact : String mobile : String time : Int propertyCost : String }

GraphQL组件 graphql —— Bean

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 @Component public class GraphQLProvider { private GraphQL graphQL; @Autowired private HouseResourceService houseResourceService; @PostConstruct public void init () throws FileNotFoundException { File file = ResourceUtils.getFile("classpath:haoke.graphql" ); this .graphQL = GraphQL.newGraphQL( new SchemaGenerator ().makeExecutableSchema( new SchemaParser ().parse(file), RuntimeWiring.newRuntimeWiring() .type("HaokeQuery" ,builder -> builder.dataFetcher("HouseResources" , Environment->{ Long id = Long.parseLong(Environment.getArgument("id" )); return this .houseResourceService.queryHouseResourcesById(id); }) ) .build() ) ).build(); } @Bean GraphQL graphQL () { return this .graphQL; } }

暴露接口 1 2 3 4 5 6 7 8 9 10 11 12 13 @RequestMapping("graphql") @Controller public class GraphQLController { @Autowired private GraphQL graphQL; @GetMapping @ResponseBody public Map<String,Object> graphql (@RequestParam("query") String query) { return this .graphQL.execute(query).toSpecification(); } }

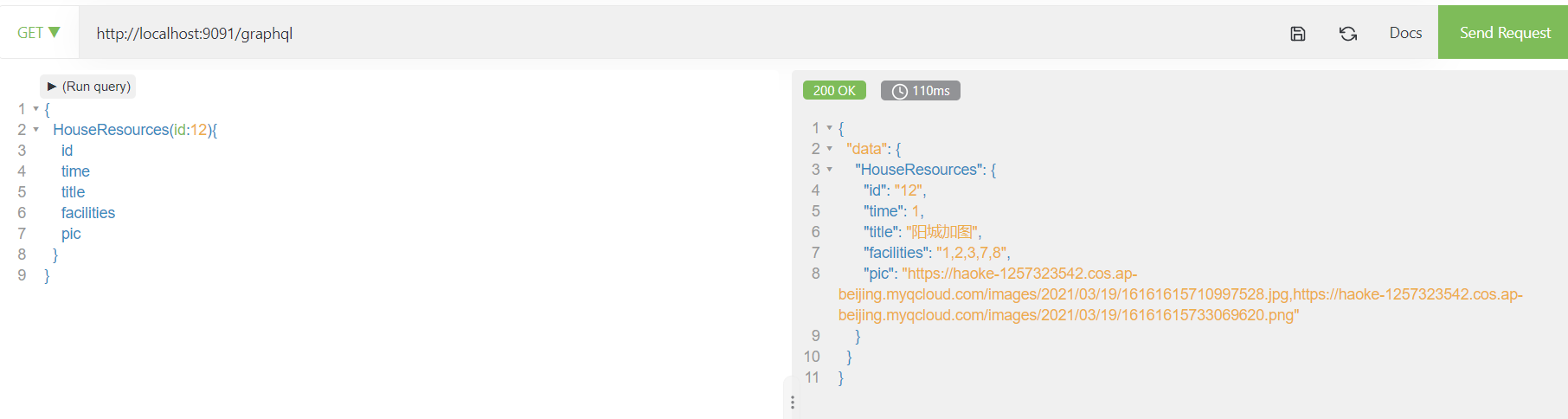

测试

GraphQL组件获取的优化 问题

每当增加查询时,都需要修改该方法

改进思路

编写接口 所有实现查询的逻辑都实现该接口 在GraphQLProvider中使用该接口的实现类进行处理 以后新增查询逻辑只需增加实现类即可 1. 编写MyDataFetcher接口 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 package com.haoke.api.graphql;import graphql.schema.DataFetchingEnvironment;public interface MyDataFetcher { String fieldName () ; Object dataFetcher (DataFetchingEnvironment environment) ; }

2. 实现MyDataFetcher 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 @Component public class HouseResourcesDataFetcher implements MyDataFetcher { @Autowired HouseResourceService houseResourceService; @Override public String fieldName () { return "HouseResources" ; } @Override public Object dataFetcher (DataFetchingEnvironment environment) { Long id = Long.parseLong(environment.getArgument("id" )); return this .houseResourceService.queryHouseResourcesById(id); } }

3. 修改GraphQLProvider 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 this .graphQL = GraphQL.newGraphQL( new SchemaGenerator ().makeExecutableSchema( new SchemaParser ().parse(file), RuntimeWiring.newRuntimeWiring() .type("HaokeQuery" ,builder ->{ for (MyDataFetcher myDataFetcher : myDataFetchers) { builder.dataFetcher( myDataFetcher.fieldName(), Environment->myDataFetcher.dataFetcher(Environment) ); } return builder; } ) .build() )

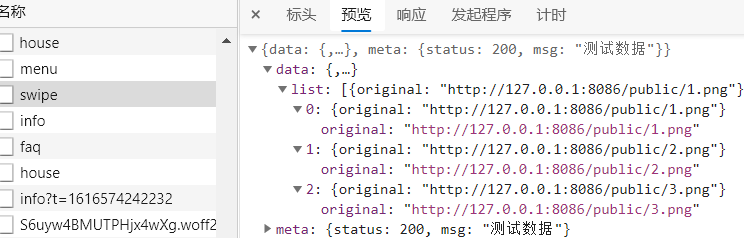

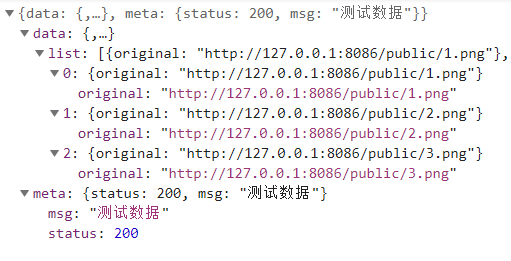

房源接口(GraphQL) 首页轮播广告 1. 数据结构 请求地址:

响应:

所以,数据只需要返回图片链接即可

2. 数据表设计 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 use haoke; CREATE TABLE `tb_ad` (`id` bigint (20 ) NOT NULL AUTO_INCREMENT, `type` int (10 ) DEFAULT NULL COMMENT '广告类型' , `title` varchar (100 ) DEFAULT NULL COMMENT '描述' , `url` varchar (200 ) DEFAULT NULL COMMENT '图片URL地址' , `created` datetime DEFAULT NULL , `updated` datetime DEFAULT NULL , PRIMARY KEY (`id`)) ENGINE= InnoDB DEFAULT CHARSET= utf8 COMMENT= '广告表' ; INSERT INTO `tb_ad` (`id`, `type`, `title`, `url`, `created`, `updated`) VALUES ('1' ,'1' , 'UniCity万科天空之城' , 'https://haoke-1257323542.cos.ap-beijing.myqcloud.com/ad-swipes/1.jpg' , '2021-3-24 16:36:11' ,'2021-3-24 16:36:16' );INSERT INTO `tb_ad` (`id`, `type`, `title`, `url`, `created`, `updated`) VALUES ('2' ,'1' , '天和尚海庭前' ,'https://haoke-1257323542.cos.ap-beijing.myqcloud.com/ad-swipes/2.jpg' , '2021-3-24 16:36:43' ,'2021-3-24 16:36:37' );INSERT INTO `tb_ad` (`id`, `type`, `title`, `url`, `created`, `updated`) VALUES ('3' , '1' , '[奉贤 南桥] 光语著' , 'https://haoke-1257323542.cos.ap-beijing.myqcloud.com/ad-swipes/3.jpg' , '2021-3-24 16:38:32' ,'2021-3-24 16:38:26' );INSERT INTO `tb_ad` (`id`, `type`, `title`, `url`, `created`, `updated`) VALUES ('4' ,'1' , '[上海周边 嘉兴] 融创海逸长洲' , 'https://haoke-1257323542.cos.ap-beijing.myqcloud.com/ad-swipes/4.jpg' , '2021-3-24 16:39:10' ,'2021-3-24 16:39:13' );

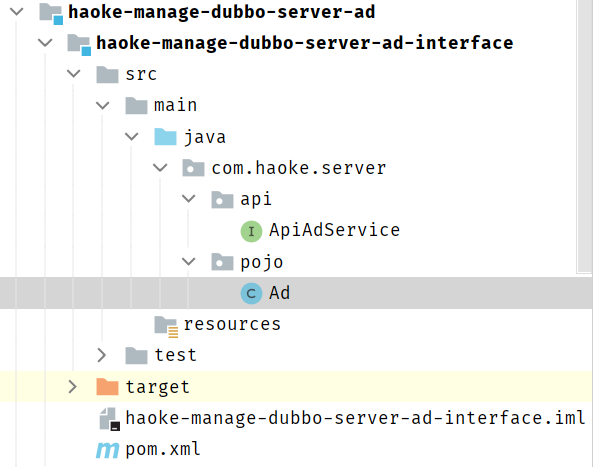

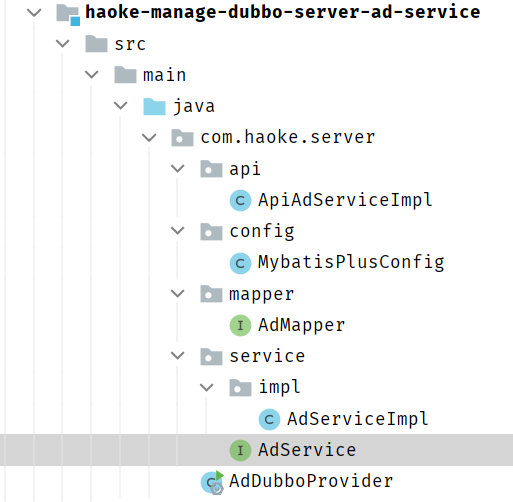

3. 实现查询接口 dubbo服务提供方

1. 创建工程

1 2 3 4 5 6 7 8 <dependencies > <dependency > <groupId > com.haoke.manage</groupId > <artifactId > haoke-manage-dubbo-server-common</artifactId > <version > 1.0-SNAPSHOT</version > </dependency > </dependencies >

1 2 3 4 5 6 7 8 <dependencies > <dependency > <groupId > com.haoke.manage</groupId > <artifactId > haoke-manage-dubbo-server-ad-interface</artifactId > <version > 1.0-SNAPSHOT</version > </dependency > </dependencies >

2.appplication.properties 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 spring.application.name = haoke-manage-dubbo-server-ad spring.datasource.driver-class-name =com.mysql.cj.jdbc.Driver spring.datasource.url =jdbc:mysql://8.140.130.91:3306/myhome\ ?characterEncoding=utf8&useSSL=false&serverTimezone=UTC&autoReconnect=true&allowMultiQueries=true spring.datasource.username =root spring.datasource.password =root spring.datasource.hikari.maximum-pool-size =60 spring.datasource.hikari.idle-timeout =60000 spring.datasource.hikari.connection-timeout =60000 spring.datasource.hikari.validation-timeout =3000 spring.datasource.hikari.login-timeout =5 spring.datasource.hikari.max-lifetime =60000 dubbo.scan.basePackages = com.haoke.server.api dubbo.application.name = dubbo-provider-ad dubbo.service.version = 1.0.0 dubbo.protocol.name = dubbo dubbo.protocol.port = 21880 dubbo.registry.address = zookeeper://8.140.130.91:2181 dubbo.registry.client = zkclient

3.Dao层 POJO

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 @Data @TableName("tb_ad") public class Ad extends BasePojo { private static final long serialVersionUID = -493439243433085768L ; @TableId(value = "id", type = IdType.AUTO) private Long id; private Integer type; private String title; private String url; }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 package com.haoke.server.api;import com.haoke.server.pojo.Ad;import com.haoke.server.vo.PageInfo;public interface ApiAdService { PageInfo<Ad> queryAdList (Integer type, Integer page, Integer pageSize) ; }

AdMapper 1 2 3 4 5 6 package com.haoke.server.mapper;import com.baomidou.mybatisplus.core.mapper.BaseMapper;import com.haoke.server.pojo.Ad;public interface AdMapper extends BaseMapper <Ad> {}

MybatisPlusConfig 分页配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 package com.haoke.server.config;import com.baomidou.mybatisplus.annotation.DbType;import com.baomidou.mybatisplus.extension.plugins.MybatisPlusInterceptor;import com.baomidou.mybatisplus.extension.plugins.PaginationInterceptor;import com.baomidou.mybatisplus.extension.plugins.inner.PaginationInnerInterceptor;import org.mybatis.spring.annotation.MapperScan;import org.springframework.context.annotation.Bean;import org.springframework.context.annotation.Configuration;@MapperScan("com.haoke.server.mapper") @Configuration public class MybatisPlusConfig { @Bean public MybatisPlusInterceptor mybatisPlusInterceptor () { MybatisPlusInterceptor interceptor = new MybatisPlusInterceptor (); PaginationInnerInterceptor paginationInnerInterceptor = new PaginationInnerInterceptor (); paginationInnerInterceptor.setDbType(DbType.MYSQL); interceptor.addInnerInterceptor(paginationInnerInterceptor); return interceptor; } }

4.Service层 实现业务

编写接口:

1 2 3 4 5 6 7 8 package com.haoke.server.service;import com.haoke.server.pojo.Ad;import com.haoke.server.vo.PageInfo;public interface AdService { PageInfo<Ad> queryAdList (Ad ad, Integer page, Integer pageSize) ; }

实现接口:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 package com.haoke.server.service.impl;import com.baomidou.mybatisplus.core.conditions.query.QueryWrapper;import com.baomidou.mybatisplus.core.metadata.IPage;import com.haoke.server.pojo.Ad;import com.haoke.server.service.AdService;import com.haoke.server.service.BaseServiceImpl;import com.haoke.server.vo.PageInfo;import org.springframework.stereotype.Service;@Service public class AdServiceImpl extends BaseServiceImpl implements AdService { @Override public PageInfo<Ad> queryAdList (Ad ad, Integer page, Integer pageSize) { QueryWrapper queryWrapper = new QueryWrapper (); queryWrapper.orderByDesc("updated" ); queryWrapper.eq("type" ,ad.getType()); IPage iPage = super .queryPageList(queryWrapper,page,pageSize); return new PageInfo <>(Long.valueOf(iPage.getTotal()).intValue(),page,pageSize,iPage.getRecords()); } }

5.dubbo服务实现类 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 package com.haoke.server.api;import com.haoke.server.pojo.Ad;import com.haoke.server.vo.PageInfo;public interface ApiAdService { PageInfo<Ad> queryAdList (Integer type, Integer page, Integer pageSize) ; }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 package com.haoke.server.api;import com.alibaba.dubbo.config.annotation.Service;import com.haoke.server.pojo.Ad;import com.haoke.server.service.AdService;import com.haoke.server.vo.PageInfo;import org.springframework.beans.factory.annotation.Autowired;@Service(version = "${dubbo.service.version}") public class ApiAdServiceImpl implements ApiAdService { @Autowired private AdService adService; @Override public PageInfo<Ad> queryAdList (Integer type, Integer page, Integer pageSize) { Ad ad = new Ad (); ad.setType(type); return this .adService.queryAdList(ad,page,pageSize); } }

6.编写启动类 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 package com.haoke.server;import org.springframework.boot.WebApplicationType;import org.springframework.boot.autoconfigure.SpringBootApplication;import org.springframework.boot.builder.SpringApplicationBuilder;@SpringBootApplication public class AdDubboProvider { public static void main (String[] args) { new SpringApplicationBuilder (AdDubboProvider.class) .web(WebApplicationType.NONE) .run(args); } }

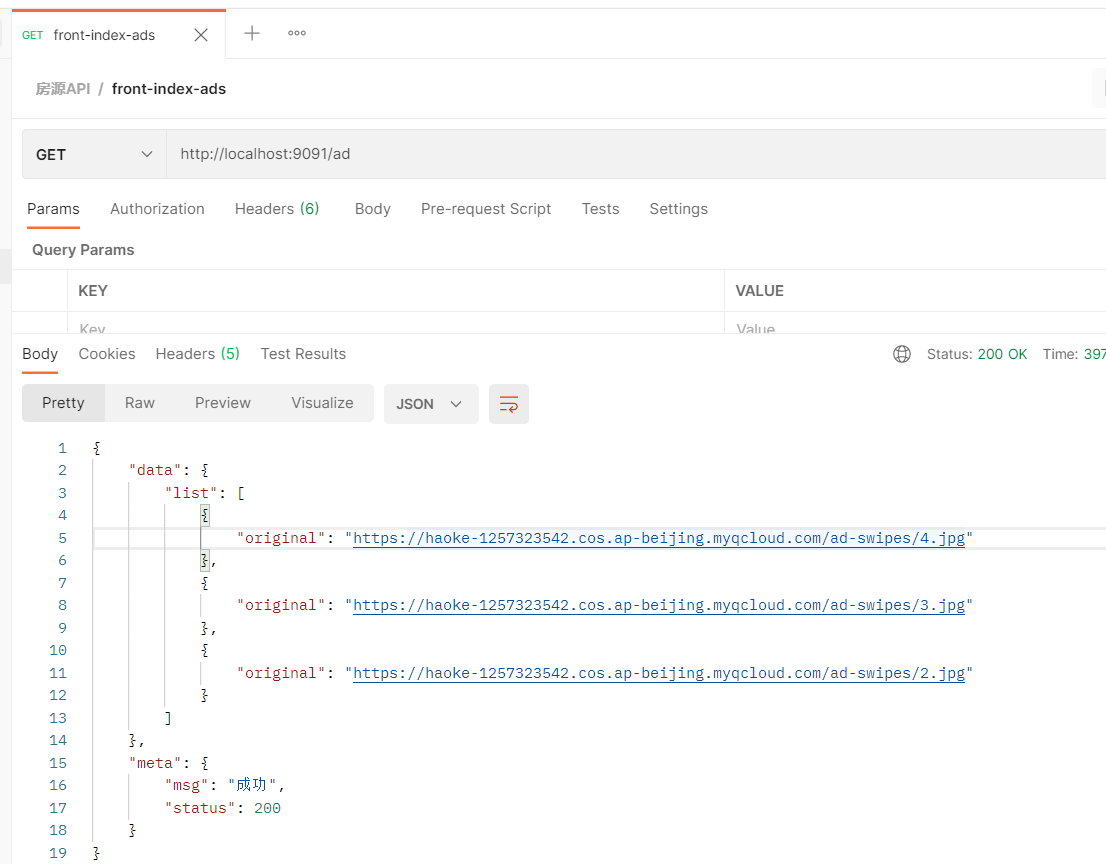

4.API实现(Dubbo消费方) 1. 导入依赖 1 2 3 4 5 6 <dependency > <groupId > com.haoke.manage</groupId > <artifactId > haoke-manage-dubbo-server-ad-interface</artifactId > <version > 1.0-SNAPSHOT</version > </dependency >

2. 编写WebResult

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 package com.haoke.api.vo;import com.fasterxml.jackson.annotation.JsonIgnore;import lombok.AllArgsConstructor;import lombok.Data;import java.util.HashMap;import java.util.List;import java.util.Map;@Data @AllArgsConstructor public class WebResult { @JsonIgnore private int status; @JsonIgnore private String msg; @JsonIgnore private List<?> list; @JsonIgnore public static WebResult ok (List<?> list) { return new WebResult (200 , "成功" , list); } @JsonIgnore public static WebResult ok (List<?> list, String msg) { return new WebResult (200 , msg, list); } public Map<String, Object> getData () { HashMap<String, Object> data = new HashMap <String, Object>(); data.put("list" , this .list); return data; } public Map<String, Object> getMeta () { HashMap<String, Object> meta = new HashMap <String, Object>(); meta.put("msg" , this .msg); meta.put("status" , this .status); return meta; } }

3.编写Service 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 package com.haoke.api.service;import com.alibaba.dubbo.config.annotation.Reference;import com.haoke.api.vo.WebResult;import com.haoke.server.api.ApiAdService;import com.haoke.server.pojo.Ad;import com.haoke.server.vo.PageInfo;import org.springframework.stereotype.Service;import java.util.ArrayList;import java.util.HashMap;import java.util.List;import java.util.Map;@Service public class AdService { @Reference(version = "1.0.0") private ApiAdService apiAdService; public PageInfo<Ad> queryAdList (Integer type, Integer page, Integer pageSize) { return this .apiAdService.queryAdList(type, page, pageSize); } }

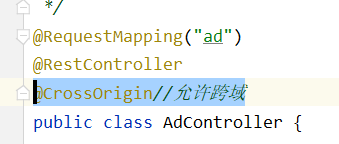

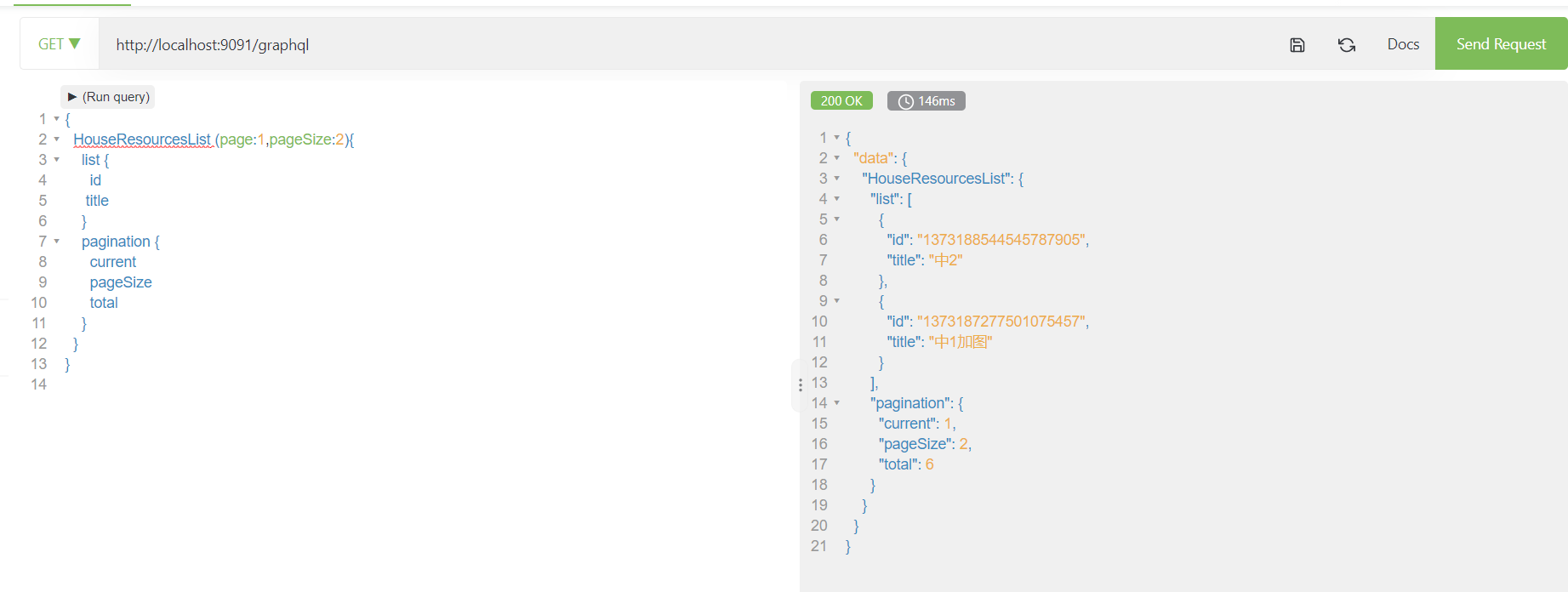

4.Controller 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 package com.haoke.api.controller;import com.haoke.api.service.AdService;import com.haoke.api.vo.WebResult;import com.haoke.server.pojo.Ad;import com.haoke.server.vo.PageInfo;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.web.bind.annotation.CrossOrigin;import org.springframework.web.bind.annotation.GetMapping;import org.springframework.web.bind.annotation.RequestMapping;import org.springframework.web.bind.annotation.RestController;import java.util.ArrayList;import java.util.HashMap;import java.util.List;import java.util.Map;@RequestMapping("ad") @RestController @CrossOrigin public class AdController { @Autowired private AdService adService; @GetMapping public WebResult queryIndexad () { PageInfo<Ad> pageInfo = this .adService.queryAdList(1 ,1 ,3 ); List<Ad> ads = pageInfo.getRecords(); List<Map<String,Object>> data = new ArrayList <>(); for (Ad ad : ads) { Map<String,Object> map = new HashMap <>(); map.put("original" ,ad.getUrl()); data.add(map); } return WebResult.ok(data); } }

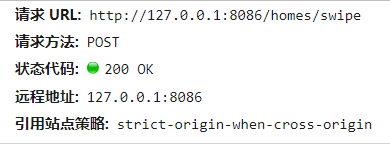

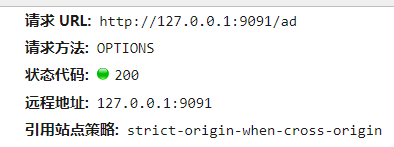

测试

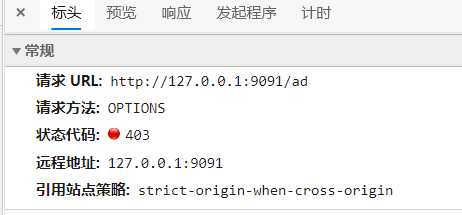

5. 整合前端系统 修改home.js文件中请求地址

1 2 3 4 5 let swipe = new Promise ((resolve, reject ) => { axios.get ('http://127.0.0.1:9091/ad' ).then ((data )=> { resolve (data.data .list ); }); })

跨域问题:

6. 广告的GraphQL接口 1. 目标数据结构 1 2 3 4 5 6 7 8 9 10 11 12 13 { "list" : [ { "original" : "http://itcast-haoke.oss-cnqingdao.aliyuncs.com/images/2018/11/26/15432030275359146.jpg" }, { "original" : "http://itcast-haoke.oss-cnqingdao.aliyuncs.com/images/2018/11/26/15432029946721854.jpg" }, { "original" : "http://itcast-haoke.oss-cnqingdao.aliyuncs.com/images/2018/11/26/1543202958579877.jpg" } ] }

2. graphql定义语句 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 type HaokeQuery{ HouseResourcesList( page : Int, pageSize : Int) : TableResult HouseResources( id : ID) : HouseResources IndexAdList : IndexAdResult } type IndexAdResult{ list : [ IndexAdResultData] } type IndexAdResultData{ original : String }

3. 根据GraphQL结构编写VO 1 2 3 4 5 6 7 8 9 10 11 12 13 14 package com.haoke.api.vo.ad.index;import lombok.AllArgsConstructor;import lombok.Data;import lombok.NoArgsConstructor;import java.util.List;@Data @AllArgsConstructor @NoArgsConstructor public class IndexAdResult { private List<IndexAdResultData> list; }

1 2 3 4 5 6 7 8 9 10 11 12 package com.haoke.api.vo.ad.index;import lombok.AllArgsConstructor;import lombok.Data;import lombok.NoArgsConstructor;@Data @AllArgsConstructor @NoArgsConstructor public class IndexAdResultData { private String original; }

4. IndexAdDataFetcher 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 package com.haoke.api.graphql.myDataFetcherImpl;import com.haoke.api.graphql.MyDataFetcher;import com.haoke.api.service.AdService;import com.haoke.api.vo.WebResult;import com.haoke.api.vo.ad.index.IndexAdResult;import com.haoke.api.vo.ad.index.IndexAdResultData;import com.haoke.server.pojo.Ad;import com.haoke.server.vo.PageInfo;import graphql.schema.DataFetchingEnvironment;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.stereotype.Component;import java.util.ArrayList;import java.util.List;@Component public class IndexAdDataFetcher implements MyDataFetcher { @Autowired private AdService adService; @Override public String fieldName () { return "IndexAdList" ; } @Override public Object dataFetcher (DataFetchingEnvironment environment) { PageInfo<Ad> pageInfo = this .adService.queryAdList(1 , 1 , 3 ); List<Ad> ads = pageInfo.getRecords(); List<IndexAdResultData> list = new ArrayList <>(); for (Ad ad : ads) { list.add(new IndexAdResultData (ad.getUrl())); } return new IndexAdResult (list); } }

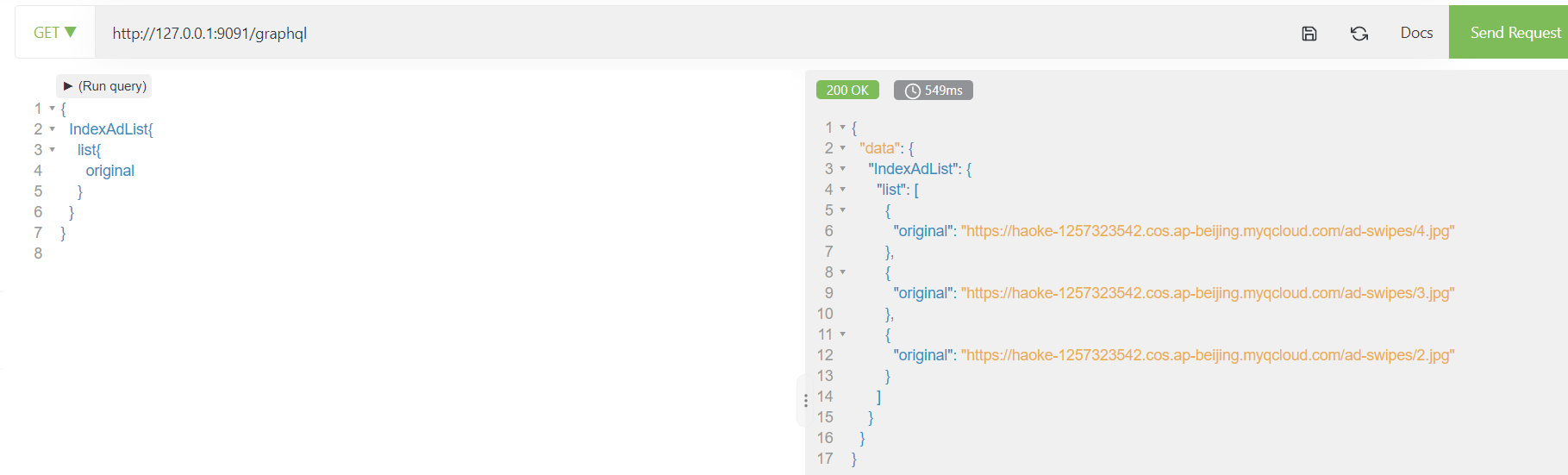

5. 测试 1 2 3 4 5 6 7 { IndexAdList{ list{ original } } }

7. GraphQL客户端

参考文档:https://www.apollographql.com/docs/react/get-started/

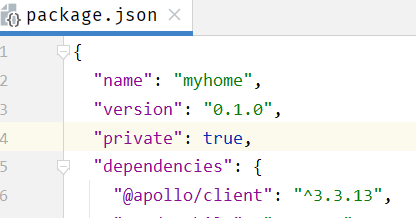

1. 安装依赖 1 npm install @apollo/client graphql

2. 创建客户端 1 2 3 4 5 import { ApolloClient , gql } from '@apollo/client' ;const client = new ApolloClient ({ uri : 'http://127.0.0.1:9091/graphql' , });

3. 定义查询 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 const GET_INDEX_ADS = gql`{ IndexAdList{ list{ original } } } ` ;let swipe = new Promise ((resolve, reject ) => { client.query ({query : GET_INDEX_ADS }).then (result => resolve (result.data .IndexAdList .list )); })

4. 测试

两个问题:

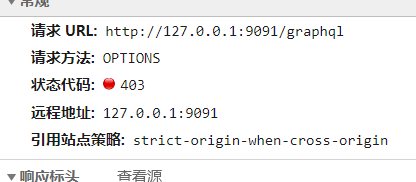

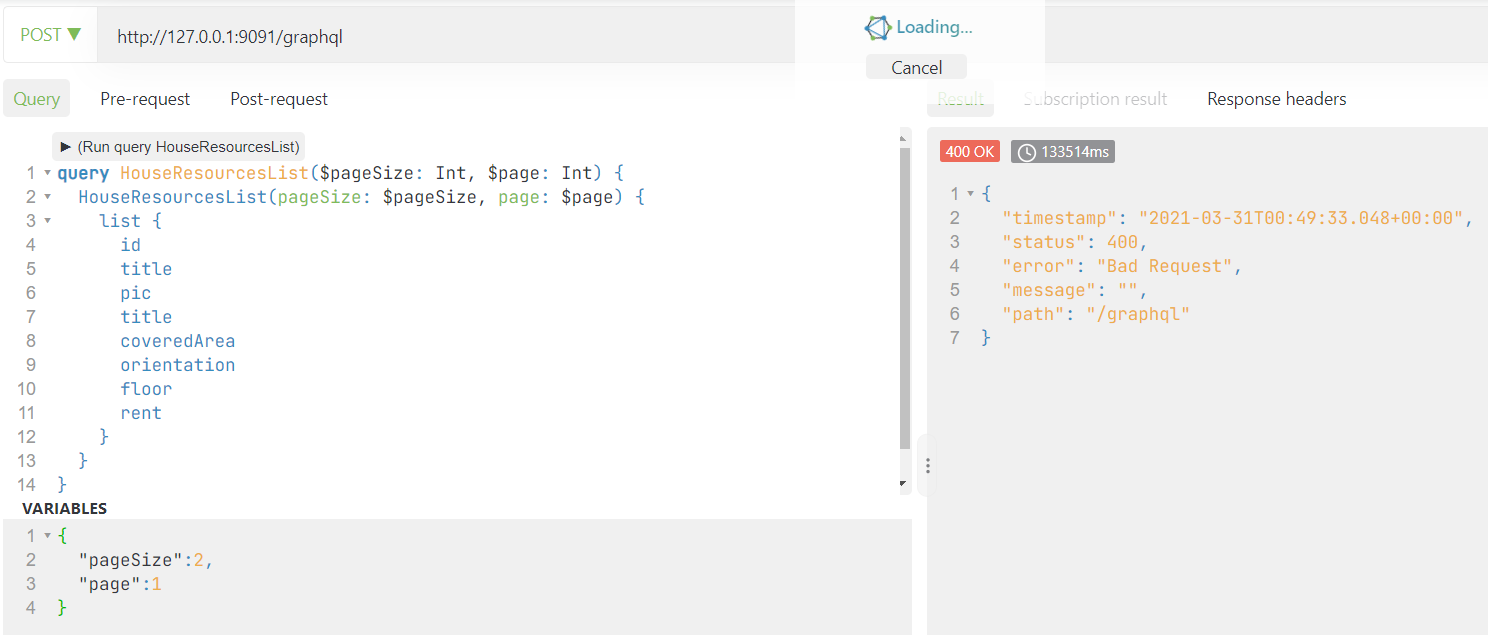

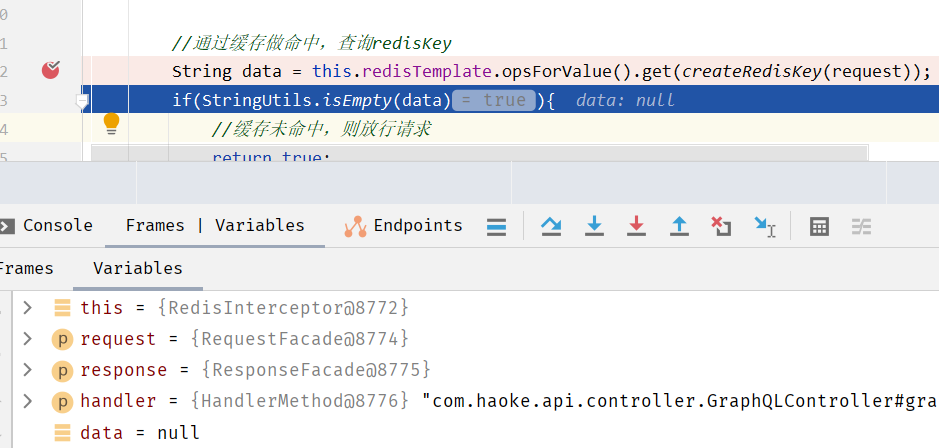

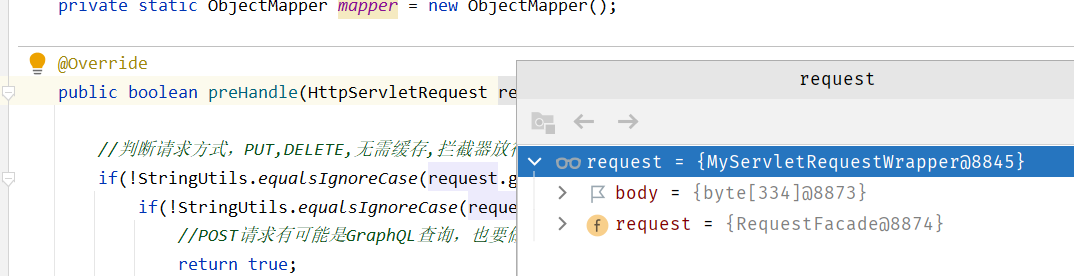

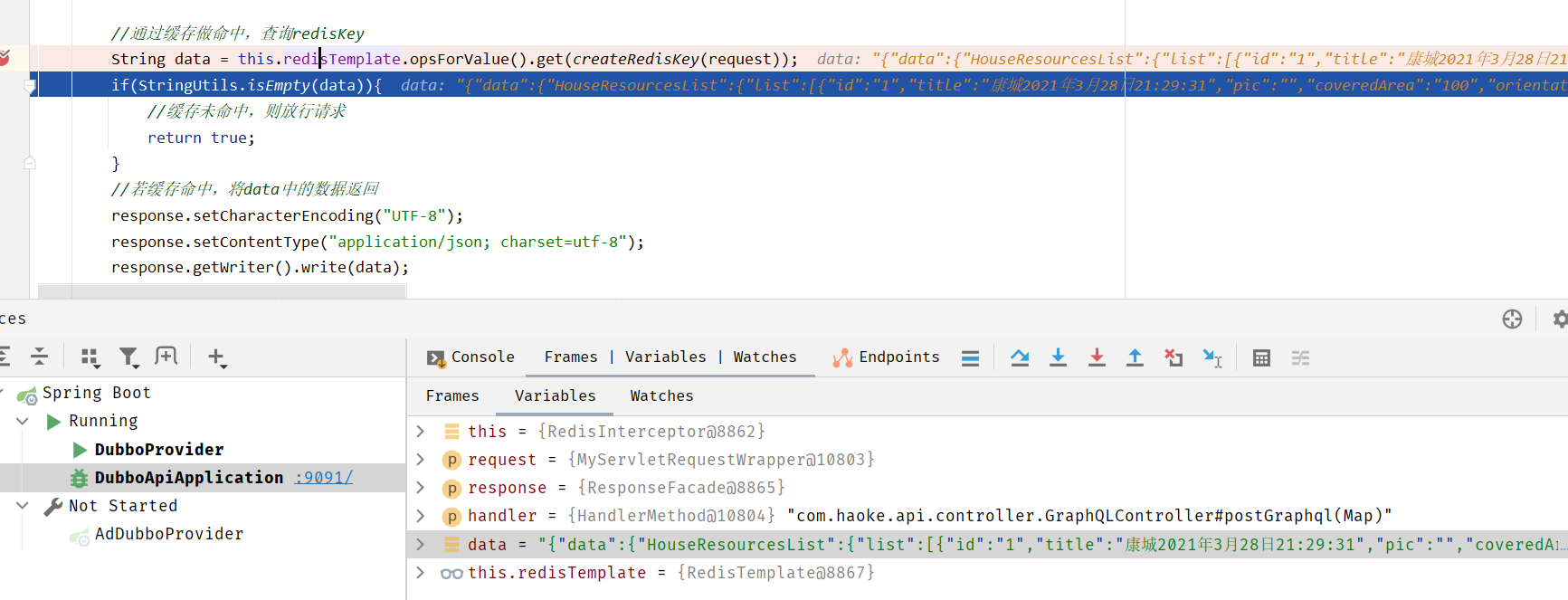

GraphQL服务没有支持cross,Controller上标注@CrossOrigin Apollo Client发起的数据请求为POST请求,现在实现的GraphQL仅仅实现了GET请求处理 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 package com.haoke.api.controller;import com.fasterxml.jackson.databind.JsonNode;import com.fasterxml.jackson.databind.ObjectMapper;import graphql.GraphQL;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.stereotype.Controller;import org.springframework.web.bind.annotation.*;import java.io.IOException;import java.util.HashMap;import java.util.Map;@RequestMapping("graphql") @Controller @CrossOrigin public class GraphQLController { @Autowired private GraphQL graphQL; private static final ObjectMapper MAPPER = new ObjectMapper (); @GetMapping @ResponseBody public Map<String,Object> graphql (@RequestParam("query") String query) { return this .graphQL.execute(query).toSpecification(); } @PostMapping @ResponseBody public Map<String, Object> postGraphql (@RequestBody String json) throws IOException { try { JsonNode jsonNode = MAPPER.readTree(json); if (jsonNode.has("query" )){ String query = jsonNode.get("query" ).asText(); return this .graphQL.execute(query).toSpecification(); } }catch (IOException e){ e.printStackTrace(); } Map<String,Object> error = new HashMap <>(); error.put("status" ,500 ); error.put("msg" ,"查询出错" ); return error; } }

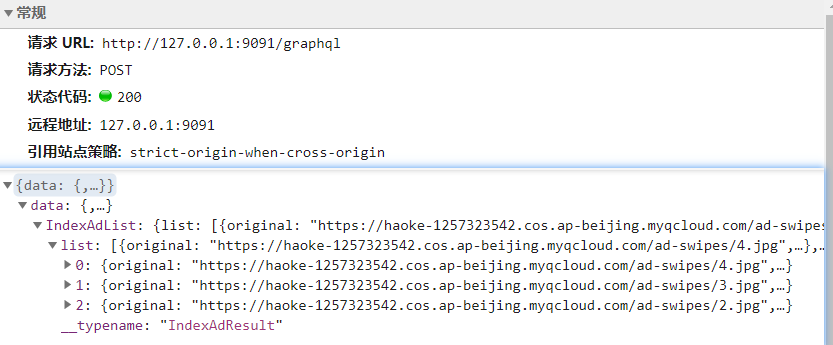

房源信息列表 1. 查询语句定义 haoke.graphql

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 schema { query: HaokeQuery } type HaokeQuery{ #分页查询房源信息-应用于前台房源信息 HouseResourcesList(page:Int, pageSize:Int):TableResult # 通过Id查询房源信息 HouseResources(id:ID): HouseResources #首页广告图-应用于前台首页 IndexAdList: IndexAdResult } type HouseResources{ id:ID! title:String estateId:ID buildingNum:String buildingUnit:String buildingFloorNum:String rent:Int rentMethod:Int paymentMethod:Int houseType:String coveredArea:String useArea:String floor:String orientation:String decoration:Int facilities:String pic:String houseDesc:String contact:String mobile:String time:Int propertyCost:String } type TableResult{ list: [HouseResources] pagination: Pagination } type Pagination{ current:Int pageSize:Int total:Int }

2.DataFetcher HouseResourcesListDataFetcher

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 @Component public class HouseResourcesListDataFetcher implements MyDataFetcher { @Autowired HouseResourceService houseResourceService; @Override public String fieldName () { return "HouseResourcesList" ; } @Override public Object dataFetcher (DataFetchingEnvironment environment) { Integer page = environment.getArgument("page" ); if (page == null ){ page = 1 ; } Integer pageSize = environment.getArgument("pageSize" ); if (pageSize == null ){ pageSize = 5 ; } return this .houseResourceService.queryList(null , page, pageSize); } }

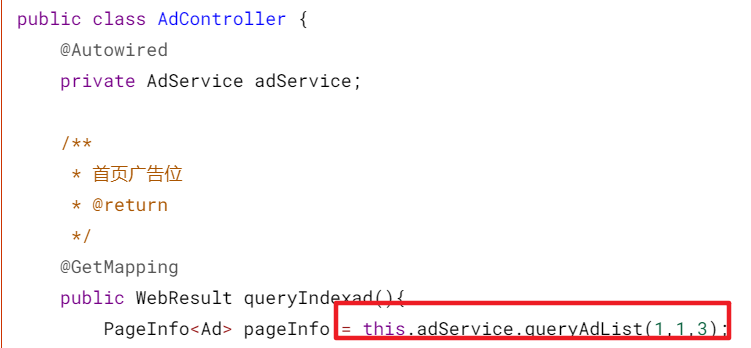

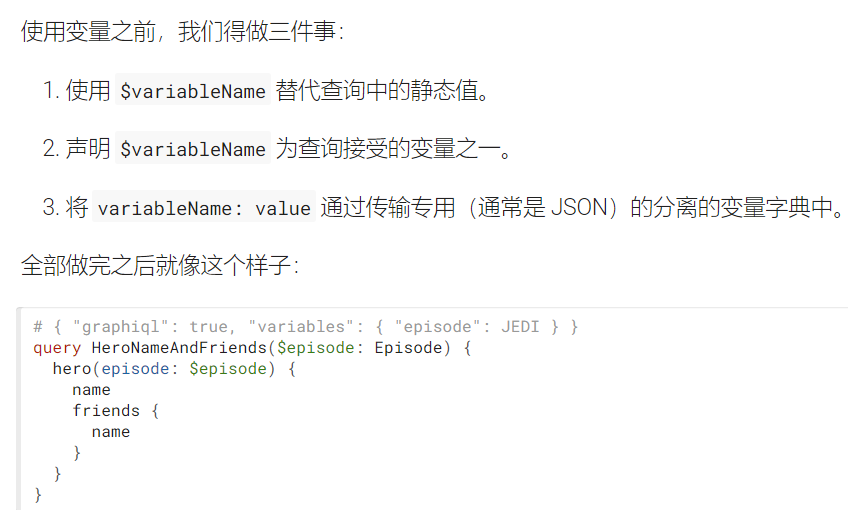

3.GraphQL参数 问题分析:上述 首页轮播广告查询接口 中的参数是固定的

实际应用中要实现根据前端的请求参数设置参数查询

https://graphql.cn/learn/queries/#variables

一种办法使直接将参数动态的设置到请求体(POST)或URL(GET)中,缺点就是可以直接通过修改查询字符串来自行获取数据。

GraphQL 拥有一级方法将动态值提取到查询之外,然后作为分离的字典传进去。这些动态值即称为变量 。

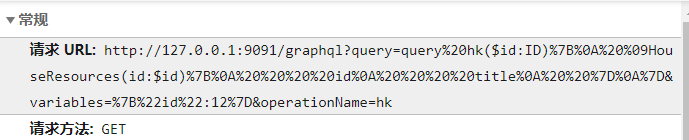

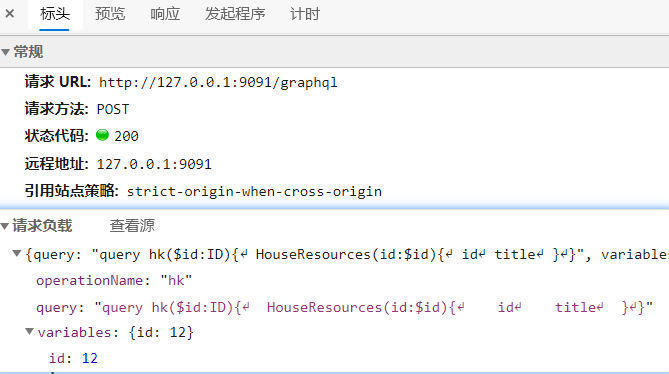

前台系统发送的参数分析 1 2 3 4 5 6 query hk( $id :ID) { HouseResources( id : $id ){ id title } }

GraphQL发送的数据如上,后端需处理请求并返回相应的数据

4. 后端处理参数

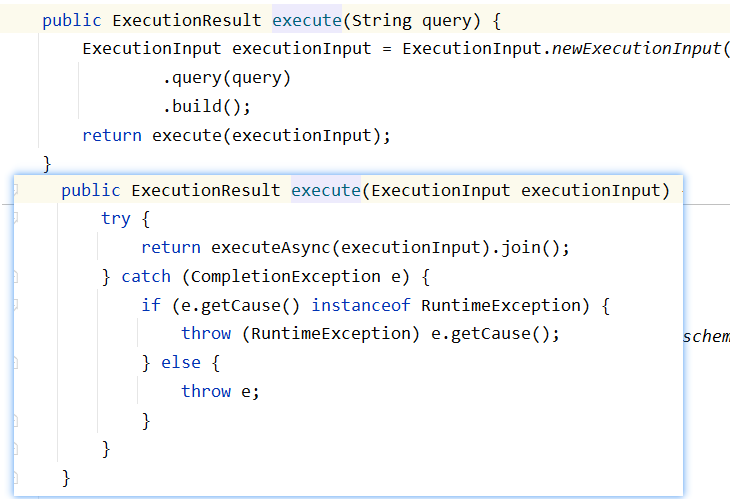

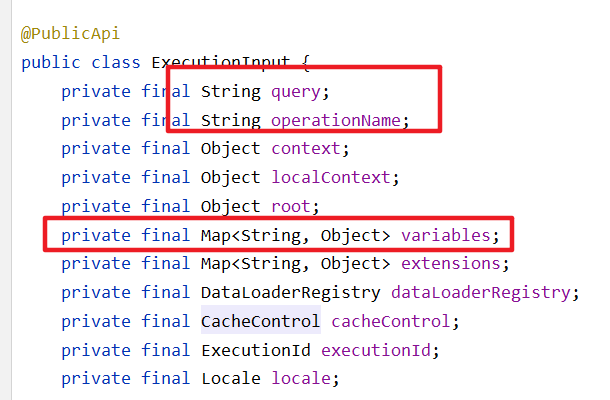

由GraphQL的调用流程可知,传入到后端的GraphQL字符串最终会被构造成一个 ExecutionInput 对象

GraphQLController

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 package com.haoke.api.controller;@RequestMapping("graphql") @Controller @CrossOrigin public class GraphQLController { @Autowired private GraphQL graphQL; private static final ObjectMapper MAPPER = new ObjectMapper (); @GetMapping @ResponseBody public Map<String,Object> graphql (@RequestParam("query") String query, @RequestParam(value = "variables",required = false) String variablesJSON, @RequestParam(value = "operationName",required = false) String operationName) { try { Map<String, Object> variables = MAPPER.readValue(variablesJSON, MAPPER.getTypeFactory().constructMapType(HashMap.class,String.class,Object.class)); return this .executeGraphQLQuery(query,operationName,variables); } catch (JsonProcessingException e) { e.printStackTrace(); } Map<String,Object> error = new HashMap <>(); error.put("status" ,500 ); error.put("msg" ,"查询出错" ); return error; } @PostMapping @ResponseBody public Map<String, Object> postGraphql (@RequestBody Map<String,Object> map) throws IOException { try { String query = (String) map.get("query" ); if (null == query){ query = "" ; } String operationName = (String) map.get("operationName" ); if (null == operationName){ operationName = "" ; } Map variables = (Map) map.get("variables" ); if (variables == null ){ variables = Collections.EMPTY_MAP; } return this .executeGraphQLQuery(query,operationName,variables); } catch (Exception e) { e.printStackTrace(); } Map<String,Object> error = new HashMap <>(); error.put("status" ,500 ); error.put("msg" ,"查询出错" ); return error; } private Map<String, Object> executeGraphQLQuery (String query,String operationName,Map<String,Object> variables) { return this .graphQL.execute( ExecutionInput.newExecutionInput() .query(query) .variables(variables) .operationName(operationName) .build() ).toSpecification(); } }

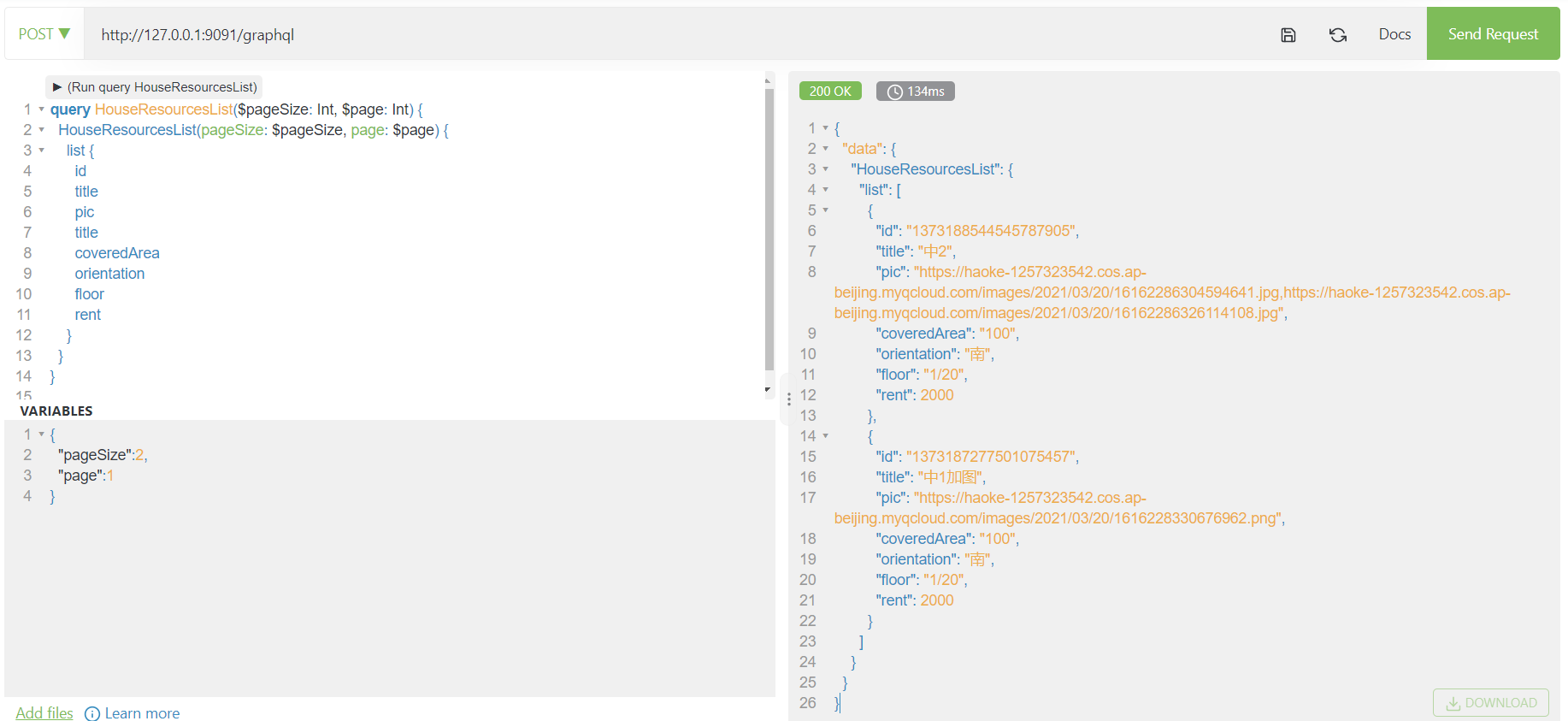

5. 查询字符串 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 query HouseResourcesList( $pageSize : Int, $page : Int) { HouseResourcesList( pageSize : $pageSize , page : $page ) { list { id title pic title coveredArea orientation floor rent } } } { "pageSize" : 2 , "page" : 1 }

6. 改造list.js页面 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 import React from 'react' ;import { withRouter } from 'react-router' ;import { Icon ,Item } from 'semantic-ui-react' ;import config from '../../common.js' ;import { ApolloClient , gql , InMemoryCache } from '@apollo/client' ;const client = new ApolloClient ({ uri : 'http://127.0.0.1:9091/graphql' , cache : new InMemoryCache () }); const QUERY_LIST = gql` query HouseResourcesList( $pageSize : Int, $page : Int) { HouseResourcesList( pageSize : $pageSize , page : $page ) { list { id title pic title coveredArea orientation floor rent } } } ` ;class HouseList extends React.Component { constructor (props ) { super (props); this .state = { listData : [], typeName : '' , type : null , loadFlag : false }; } goBack = () => { console .log (this .props .history ) this .props .history .goBack (); } componentDidMount = () => { const {query} = this .props .location .state ; this .setState ({ typeName : query.name , type : query.type }) client.query ({query :QUERY_LIST ,variables :{"pageSize" :2 ,"page" :1 }}).then (result => console .log (result) this .setState ({ listData : result.data .HouseResourcesList .list , loadFlag : true }) }) } render ( let list = null ; if (this .state .loadFlag ) { list = this .state .listData .map (item => return ( <Item key ={item.id} > <Item.Image src ={item.pic.split( ',')[0 ]}/> <Item.Content > <Item.Header > {item.title}</Item.Header > <Item.Meta > <span className ='cinema' > {item.coveredArea} ㎡/{item.orientation}/{item.floor}</span > </Item.Meta > <Item.Description > 上海 </Item.Description > <Item.Description > {item.rent}</Item.Description > </Item.Content > </Item > ) }); } return ( <div className = 'house-list' > <div className = "house-list-title" > <Icon onClick ={this.goBack} name = 'angle left' size = 'large' /> {this.state.typeName} </div > <div className = "house-list-content" > <Item.Group divided unstackable > {list} </Item.Group > </div > </div > ); } } export default withRouter (HouseList );

更新房源数据

1. Controller haoke-manage-api-server

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 @PutMapping @ResponseBody public ResponseEntity<Void> update (@RequestBody HouseResources houseResources) { try { boolean bool = this .houseResourceService.update(houseResources); if (bool) { return ResponseEntity.status(HttpStatus.NO_CONTENT).build(); } } catch (Exception e) { e.printStackTrace(); } return ResponseEntity.status(HttpStatus.INTERNAL_SERVER_ERROR).build(); }

2. Service haoke-manage-api-server

1 2 3 public boolean update (HouseResources houseResources) { return this .apiHouseResourcesService.updateHouseResources(houseResources); }

3. 修改dubbo服务 haoke-manage-dubbo-server-house-resources-interface

ApiHouserResourcesService

1 2 3 4 5 6 7 boolean updateHouseResources (HouseResources houseResources) ;

实现类ApiHouseResourcesServiceImpl

1 2 3 4 5 6 7 8 9 10 @Override public boolean updateHouseResources (HouseResources houseResources) { return this .houseResourcesService.updateHouseResources(houseResources); }

修改业务Service:HouseResourcesServiceImpl

1 2 3 4 @Override public boolean updateHouseResources (HouseResources houseResources) { return super .update(houseResources)==1 ; }

BaseServiceImpl

1 2 3 4 5 6 7 8 9 public Integer update (T record) { record.setUpdated(new Date ()); return this .mapper.updateById(record); }

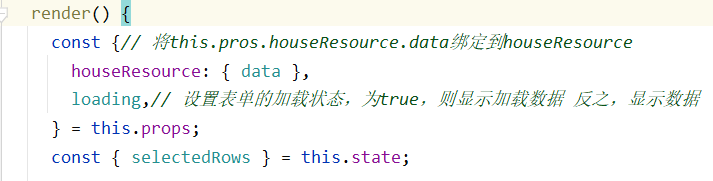

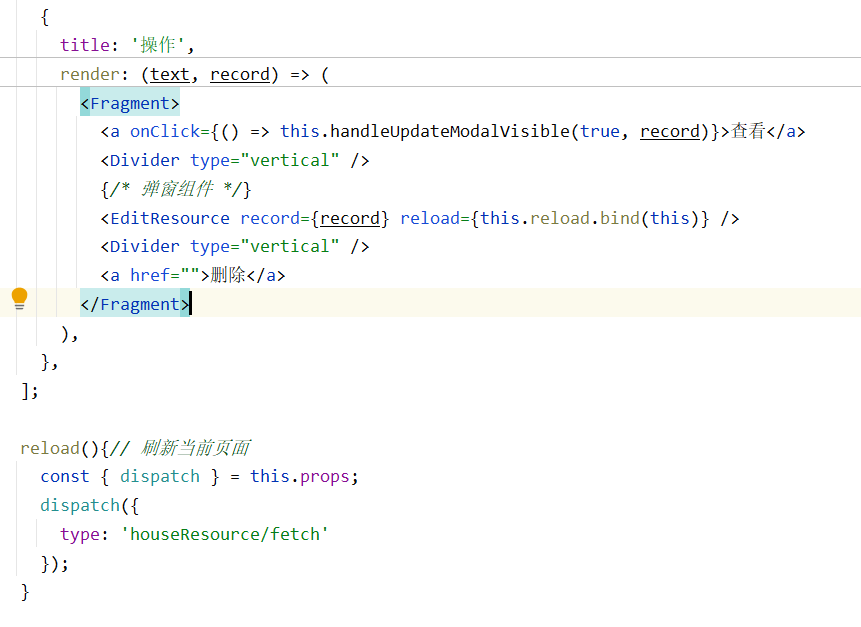

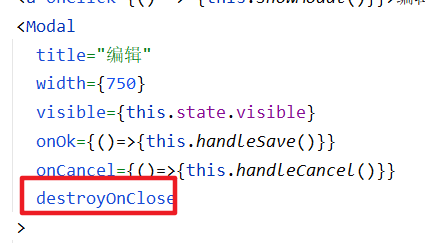

编写后台页面 1. 修改房源列表页 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 render : (text, record ) => ( <Fragment > <a onClick ={() => this.handleUpdateModalVisible(true, record)}>查看</a > <Divider type ="vertical" /> {/* 弹窗组件 */} <EditResource record ={record} reload ={this.reload.bind(this)} /> <Divider type ="vertical" /> <a href ="" > 删除</a > </Fragment > ), reload ( const { dispatch } = this .props ; dispatch ({ type : 'houseResource/fetch' }); }